-

Beta Was this translation helpful? Give feedback.

Answered by

rusty1s

Apr 23, 2021

Replies: 1 comment 7 replies

-

|

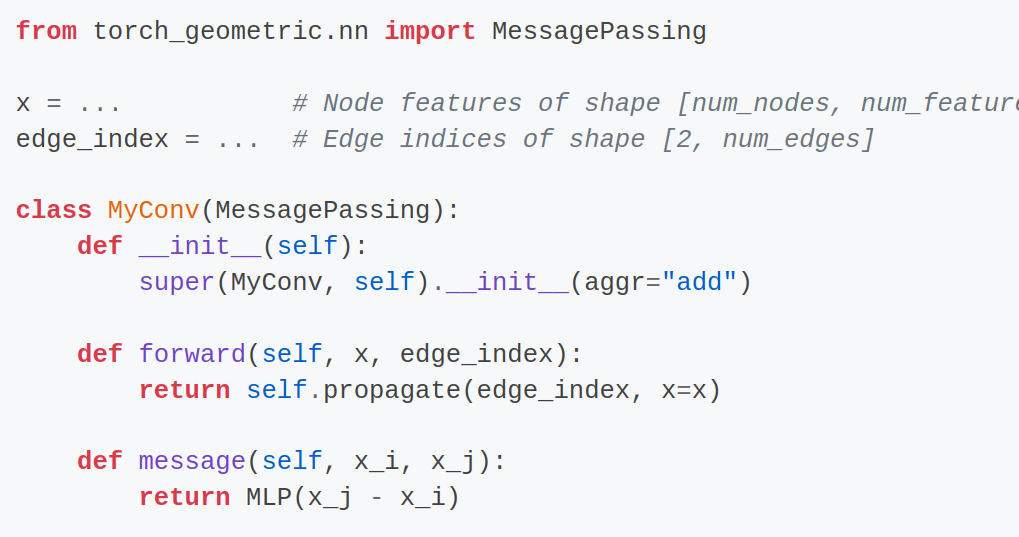

Yes, that does not make a real difference. The reason we do this here is due to the fact that it is more memory-friendly to perform the transformation node-wise instead of edge_wise, i.e.: x = x @ self.weight # [num_nodes, out_channels]

self.propagate(edge_index, x=x)is faster than doing self.propagate(edge_index, x=x)

def message(self, x_j):

return x_j @ self.weight # [num_edges, out_channels]since usually, |

Beta Was this translation helpful? Give feedback.

7 replies

Answer selected by

mdanb

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Yes, that does not make a real difference. The reason we do this here is due to the fact that it is more memory-friendly to perform the transformation node-wise instead of edge_wise, i.e.:

is faster than doing

since usually,

|E| >> |N|.