-

Beta Was this translation helpful? Give feedback.

Answered by

rusty1s

Jul 29, 2021

Replies: 1 comment 7 replies

-

|

We concatenate implicitly, leading to a smaller memory footprint. In particular, we hold two versions of the |

Beta Was this translation helpful? Give feedback.

7 replies

Answer selected by

dhorka

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

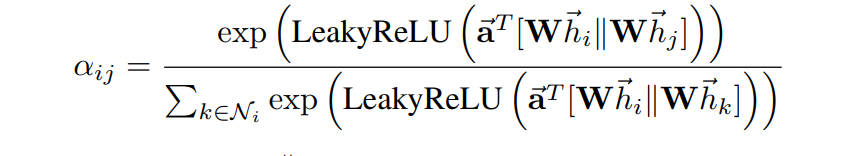

We concatenate implicitly, leading to a smaller memory footprint. In particular, we hold two versions of the

aparameter vector, one forW@h_i(namedatt_r) and one forW@h_j(namedatt_l). We then multiply the source and destination node features with these parameters and sum the resulting parts together. This is equivalent to first concatenating source and destination node features, and multiply with a single attention parameter vector afterwards. Hope this is understandable :)