Why torch-geom doesnt need fixed number of points for PointNetConv ? #3051

-

|

I was wondering, in the PointNet paper as well as in tutorials the convolution part of PointNet needs a fixed number of points thus the subsampling or upsampling in somes cases. How come |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 2 replies

-

|

Most GNN operators such as PointNet++ share weights across the node/point dimension, so in theory there is no limitation in applying GNNs to a varying number of nodes. PyG makes use of sparse tensor arithmetics and can therefore handle point clouds of varying size. In particular, we do this by concatenating points from different examples in the point dimension, and keep track of which point belongs to which example via an additional |

Beta Was this translation helpful? Give feedback.

-

|

Sorry but I don't get it. Well I think I understand the idea of sharing weights a bit, but on the other hand I can't get to represent it with perceptrons.

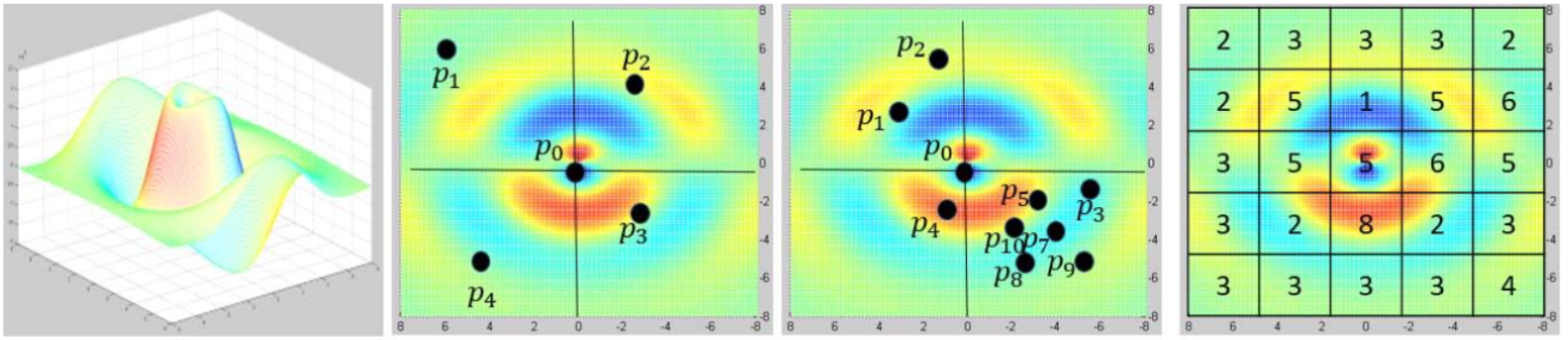

If I understand well, we are learning a continuous convolution 3D operator which explains why whether there are five points or a hundred (like in the second and third images, from PointConv paper), the operation is the same. But while convolutions on 2D images is done in a fixed structure of pixels which allows to use a MLP equivalent to a 3x3 matrix over and over for instance, points clouds are unstructured, so how is it handled by an MLP ? You keep track of which point belongs to which example via an additional |

Beta Was this translation helpful? Give feedback.

Most GNN operators such as PointNet++ share weights across the node/point dimension, so in theory there is no limitation in applying GNNs to a varying number of nodes. PyG makes use of sparse tensor arithmetics and can therefore handle point clouds of varying size. In particular, we do this by concatenating points from different examples in the point dimension, and keep track of which point belongs to which example via an additional

batchvector. As a result, we do not have a dedicated batch dimension, which is in contrast to most other implementations that batch point clouds via a[batch_size, num_points, 3]representation. This explains why alternative implementations can only work on f…