-

|

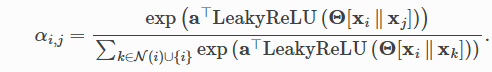

In Heterogeneous Graph Learning, Let's say type1_n1 - > target The attention may perform on type1 and type2 separately and then aggregate. |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 2 replies

-

|

Yes, attention is computed indepedently for every edge type, i.e., attention is performed on type1 and type2 individually. If you want to combine the computation of attention for both edge types, it's probably best to merge them into a single edge type. |

Beta Was this translation helpful? Give feedback.

Yes, attention is computed indepedently for every edge type, i.e., attention is performed on type1 and type2 individually. If you want to combine the computation of attention for both edge types, it's probably best to merge them into a single edge type.