Node feature embedding are identical. #3747

Unanswered

lingchen1991

asked this question in

Q&A

Replies: 1 comment 6 replies

-

|

This is hard to tell without knowing your input feature matrix and PyG splits the weight vector |

Beta Was this translation helpful? Give feedback.

6 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

I used GATConv and SAGPooling to classify a undirected complete graph with 48 nodes. (torch-geometic version 1.6.1)

But I found that the output of GATConv(512, 3) shows that all 48 node feature embedding are the same.

tensor([[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237],

[-43.2148, -0.8637, -0.2237]], device='cuda:0',

grad_fn=)

Then I looked into the source code of GATConv class, and found that the following line matters.

" out = self.propagate(edge_index, x=(x_l, x_r), alpha=(alpha_l, alpha_r), size=size) "

The inputs including 'x_l', 'x_r', 'alpha_l', 'alpha_r' shows different values in terms of node. However, the 'out' shows the identical 3D features in terms of node. Just like the above tensor.

Furthermore, I looked at the self.propagate function in the MessagePassing class. But the argument is no matched with the one used in GATConv class.

I also looked into the value of 'alpha' in the message function in GATConv class. The alpha are the totally the same with the value 1 / 48 = 0.0208.

Thus, I would like to ask you that do you have any clues on this situation?

And how the self.propagate function works? How come that the dim of alpha_l/r is 48 while the dim of alpha_i/j is 48*48?

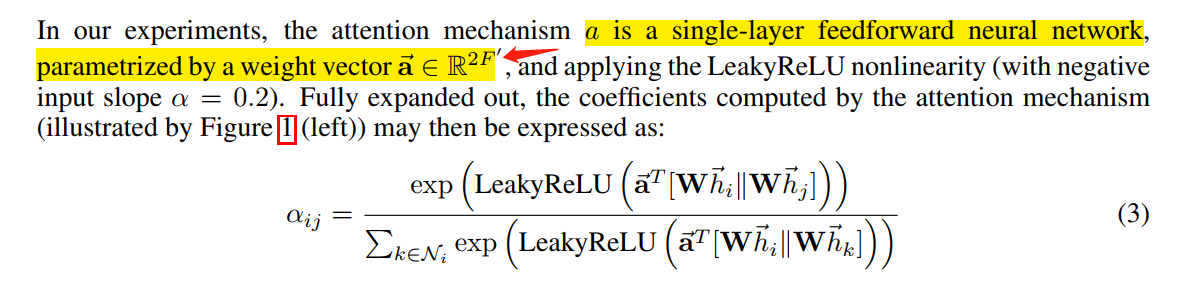

BTW, in the original paper, there is a weight vector a for calculating the alphas by the two concatenated node embedding. Hence, the dim of weight vector a should be two times of out_channels. However, I cannot see any learnable parameter with this dim.

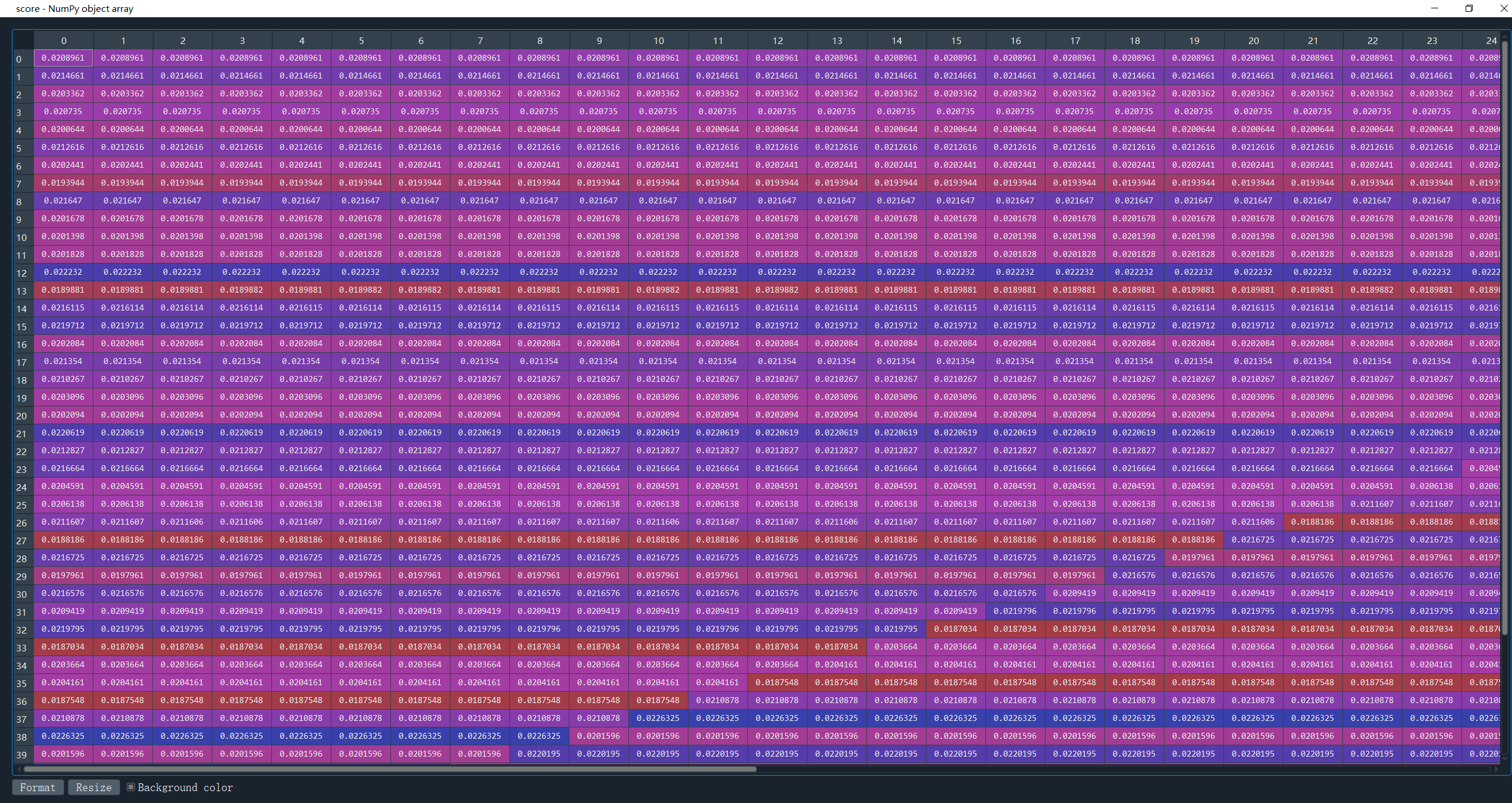

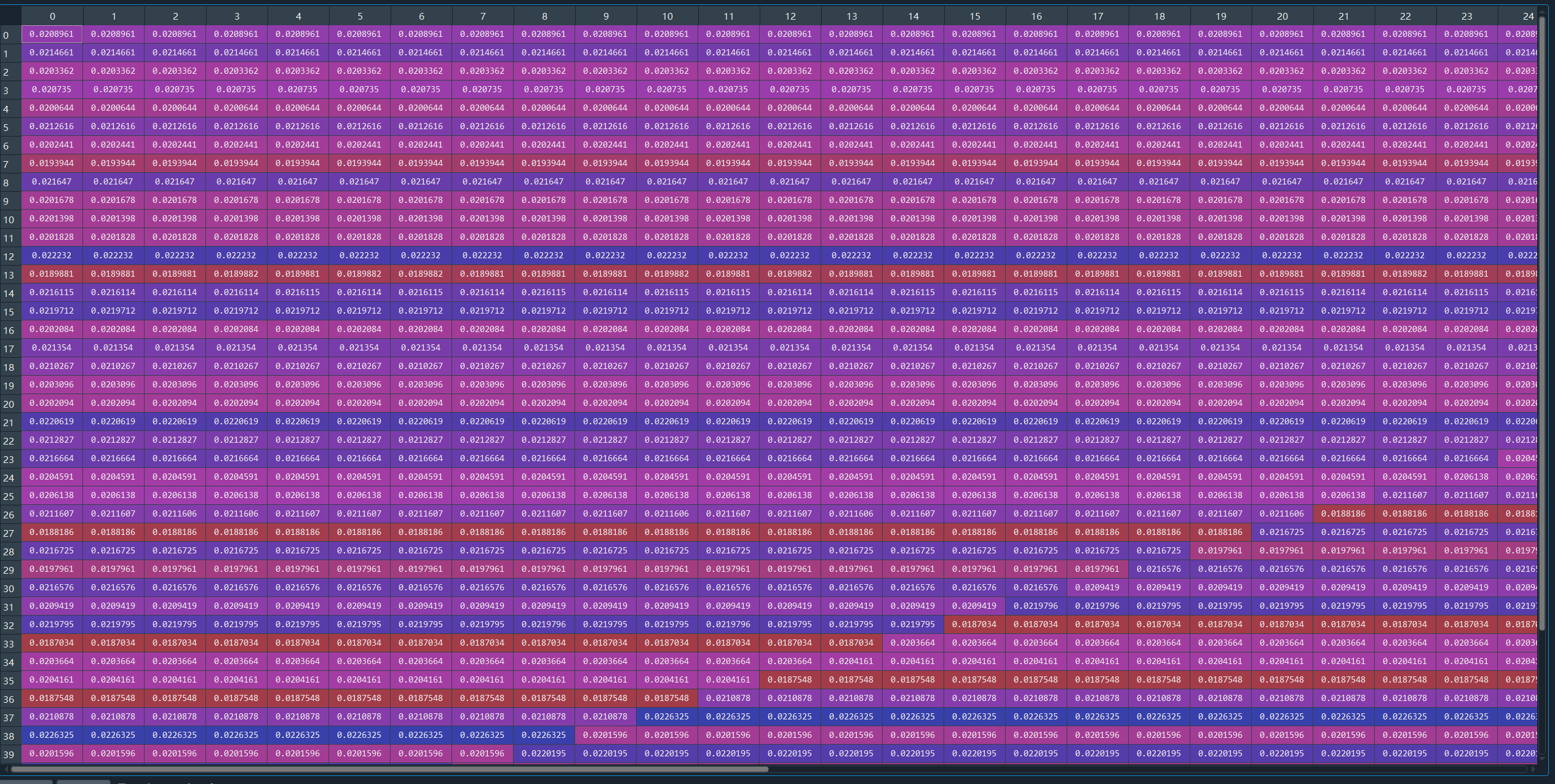

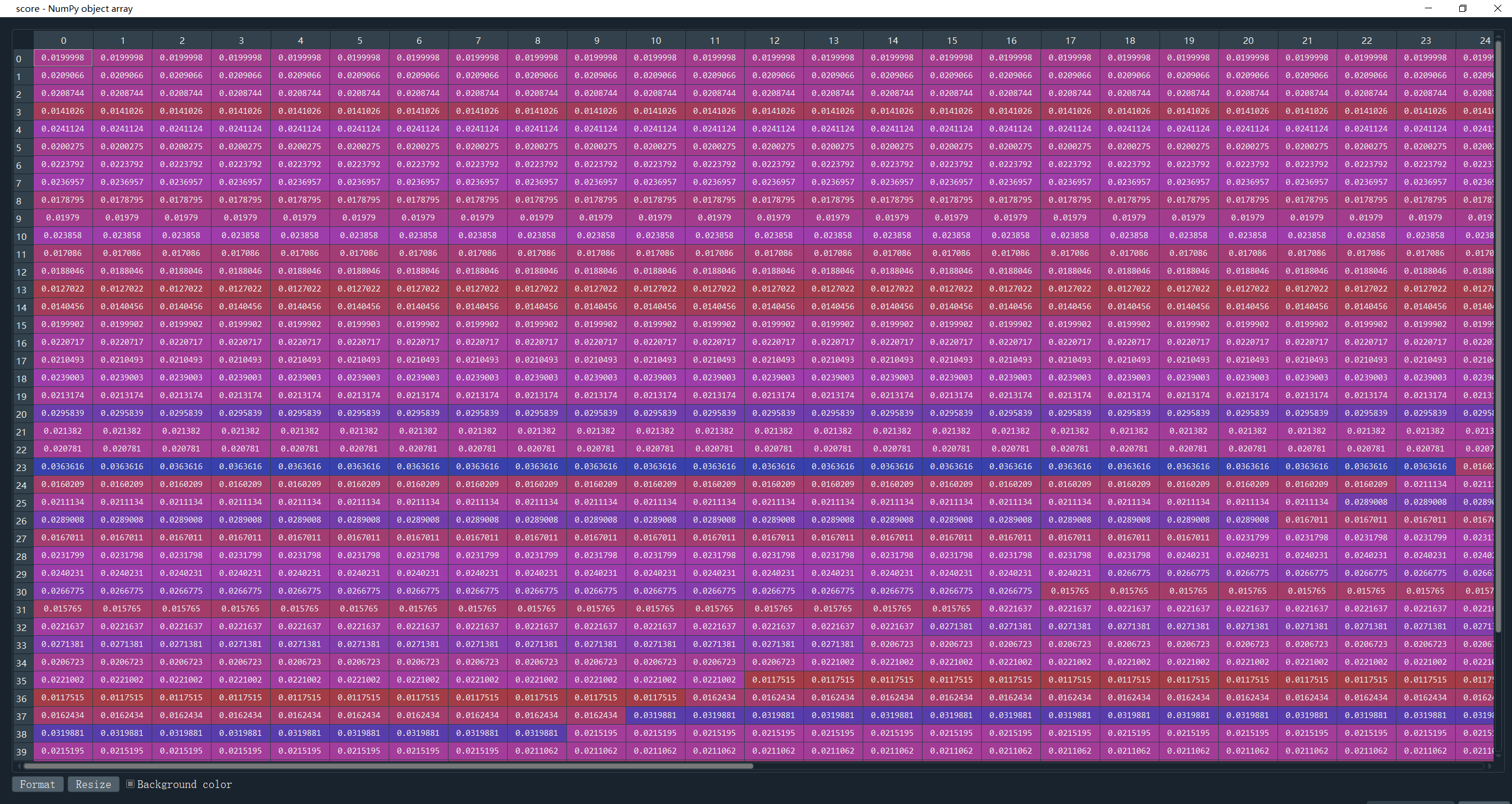

The output attention weight alpha in above equation as shown below, which leads to the identical node feature embedding.

Beta Was this translation helpful? Give feedback.

All reactions