DataLoader with variable sequence-lengths as target for Data objects #4226

Answered

by

rusty1s

DanielFPerez

asked this question in

Q&A

-

Beta Was this translation helpful? Give feedback.

Answered by

rusty1s

Mar 10, 2022

Replies: 1 comment 3 replies

-

|

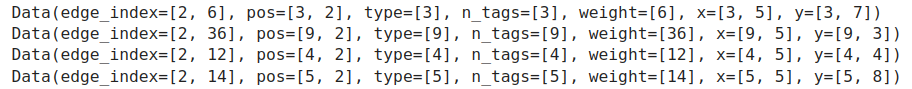

Yeah, the standard approach to solve this (as utilized in NLP) is to pad the label dimension before the def my_pad_transform(data):

size = [data.y.size(0), max_seq - data.y.size(1)]

data.y = torch.cat([data.y, data.y.new_zeros(size)], dim=-1)Does that work for you? |

Beta Was this translation helpful? Give feedback.

3 replies

Answer selected by

DanielFPerez

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Yeah, the standard approach to solve this (as utilized in NLP) is to pad the label dimension before the

torch.catcall. You can implement that as a transform on your data, e.g.:Does that work for you?