Activation function in GCNConv layer. #4389

Answered

by

rusty1s

errhernandez

asked this question in

Q&A

-

|

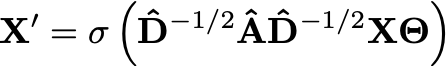

Just to check that I understand: in the Kipf and Welling paper (https://arxiv.org/abs/1609.02907) describing the GCNConv, I understand that there is an activation function applied, like here (the sigma) But in the PyG implementation the activation function is missing; I presume this is because one can just apply it to the output of the GCNConv layer, right? Sorry if this is a stupid question. I'm a slow learner ;-) |

Beta Was this translation helpful? Give feedback.

Answered by

rusty1s

Apr 1, 2022

Replies: 1 comment

-

|

Yes, activation functions are not part of our

|

Beta Was this translation helpful? Give feedback.

0 replies

Answer selected by

errhernandez

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Yes, activation functions are not part of our

nn.convlayers. This has mostly two reasons:Conv2d, which also do not apply a non-linearity