-

|

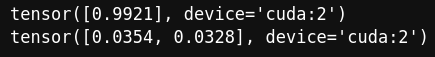

Hello! First of all thanks for all the great work you guys are doing here :). I'm having a tricky issue (similar to #2665) where the results and the embeddings produced for the same node differ drastically depending on the batch size. I'm doing link prediction on a heterogeneous graph, with the new LinkNeighborLoader. The graph is actually a bipartite graph with two node types (type A and B) and 2 edge types (plus the reverse edges), where each node type only interacts with the other type. The objective is simply to predict the if there is an edge between two nodes. I don't think this is related to the issue but there's somewhat of a temporal component and nodes of type B change across snapshots, and during inference I add a bunch of new edges with new nodes of type B by appending them to the original graph and remapping the IDs, something similar to: I then want to perform link prediction for each of these new nodes, on the other edge type. Let's assume the following scenario: With this code we get these results: The output for edge 717607 -> 18003125 changes from 0.99 to 0.03. The sampled subgraph in both scenarios is identical, since there's less connections than the number of specified neighbors. Furthermore, both edges in l2 have the exact same features and subgraphs, and they get different results. Using a negative_sampling_ratio > 0 also changes the results. In this case, depending on the negative edge sampled, results for the positive edge fluctuate all over. The output embeddings of the very first conv layers are different, so it seems to be an issue outside the model Any ideas as to what might be happening? |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 6 replies

-

|

Hey @aaran2 thanks for the question. Do you mind providing a small example with your model and a dataset to reproduce this? I couldn't reproduce the bug on a very simple model unfortunately. |

Beta Was this translation helpful? Give feedback.

Hey @aaran2 thanks for the question.

Do you mind providing a small example with your model and a dataset to reproduce this? I couldn't reproduce the bug on a very simple model unfortunately.