Point cloud classification/regression with 400.0-400K points per input #4834

Replies: 4 comments 26 replies

-

|

I would recommend to start small and then improve gradually. Importantly, I think you should pre-process your dataset to have a uniform size, e.g., to slice big point clouds into smaller chunks and save them as individual examples. You can then start by applying |

Beta Was this translation helpful? Give feedback.

-

|

Thanks, so if I perform min-max scale all labels (3) to 0-100 will that suffice? MinMaxScaler

|

Beta Was this translation helpful? Give feedback.

-

|

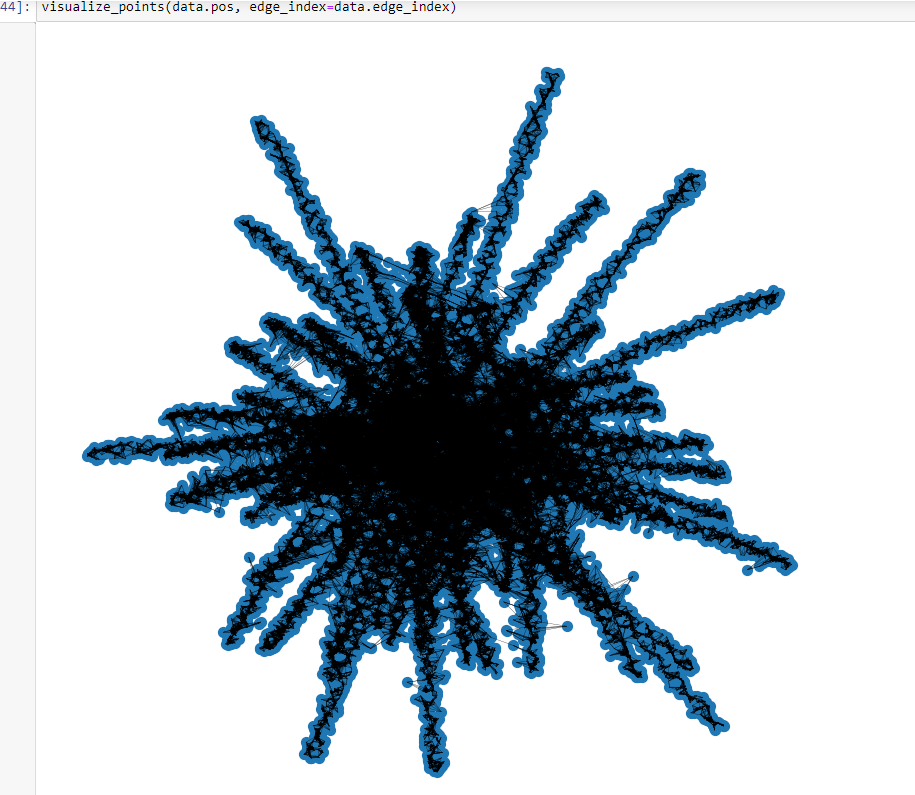

Hi @rusty1s This is the top down view of the Tree Point Cloud with KNNGraph used to create the edges. Do you think it's useful for learning tree params or too complex and a terrible representation? |

Beta Was this translation helpful? Give feedback.

-

|

@davodogster Hi!, |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Hi I successfully wrote the PyG dataloader to iteratively read in points clouds ranging from 400 points to 400,000 points (but most have >100K points!). I have X,Y,Z coords + ReturnIntensity. number of points clouds = 1000-10000. I have multiple parameters (multi-task regression) to estimate per point cloud. I will start with just single paramater regression. Which architecture/network would you recommend???

Any advice is much appreciated. And something that can learn both global and local features. The network needs to be quite computationally efficient and able to train on my 3090 GPU :)

I will probably just use the KNNGraph function to convert the PCDs into graphs (on the fly?).

Thanks everyone!! Sam

Beta Was this translation helpful? Give feedback.

All reactions