GCN-based Autoencoder not training as intended #6917

Unanswered

sivaramkrishnan101

asked this question in

Q&A

Replies: 1 comment 4 replies

-

|

GCN cannot operate on a fully connected graph, it will produce identical node embeddings (that's exactly what you observe). Try replacing with |

Beta Was this translation helpful? Give feedback.

4 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

Hi everyone,

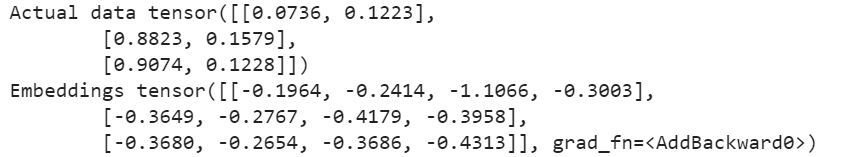

Dataset - I have a dataset of 10000 graphs. Each of the graph consists 3 nodes, and the nodes have a node features corresponding to their euclidean(x, y) coordinates. The graphs are fully connected.

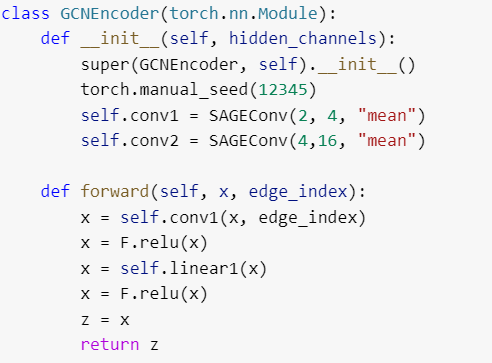

Problem statement - I want to train a simple graph autoencoder (GAE) and backpropagate based on loss(node_features, predicted node_features). The proposed encoder consists of 2 GCN layers, each followed by a Relu activation, very similar to the one in the examples.

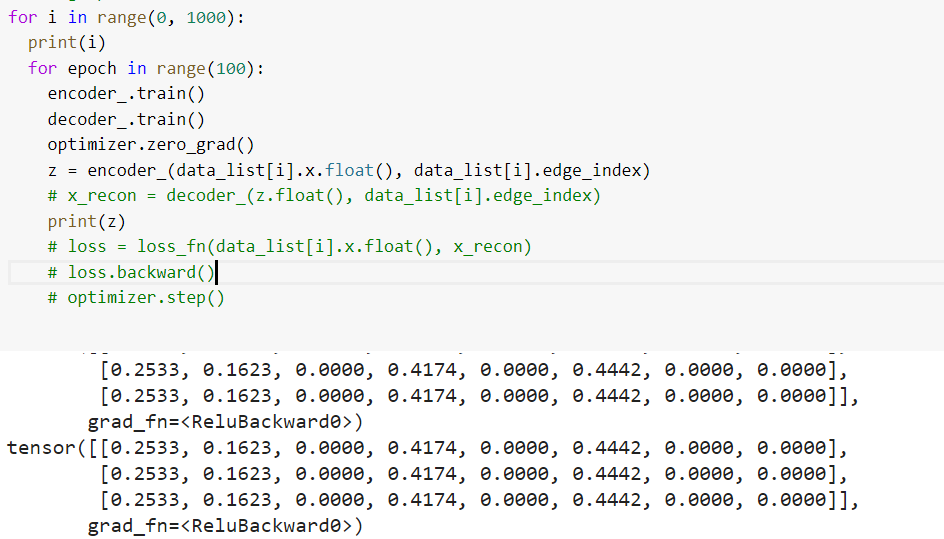

Issue - But I have been seeing a problem, when looking at the output of the encoder part, i.e. the node embeddings, all the node embeddings have the same vector. For ex., see the image below, all I have done is generate the embedding using the GCN layer for a single graph.

Conclusion - I am not sure what is causing this problem, I have implemented the same GAE architecture that I have come up with on the Citeseer dataset and the results seem to be okay. But in the case of using my dataset, the training is not working fine, and it is better when I just include the FCN layers and remove the GCN layers completely.

I would love to hear your feedback on this and reasons to why this might be happening.

Thank you!

Beta Was this translation helpful? Give feedback.

All reactions