|

115 | 115 | "name": "stderr", |

116 | 116 | "output_type": "stream", |

117 | 117 | "text": [ |

118 | | - "2023-07-13 16:32:44.194110: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.\n", |

| 118 | + "2023-07-14 16:36:31.417479: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.\n", |

119 | 119 | "To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.\n", |

120 | | - "2023-07-13 16:32:44.646582: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT\n", |

121 | | - "2023-07-13 16:32:45.301857: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

122 | | - "2023-07-13 16:32:45.315705: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

123 | | - "2023-07-13 16:32:45.315853: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n" |

| 120 | + "2023-07-14 16:36:31.847184: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT\n", |

| 121 | + "2023-07-14 16:36:32.502173: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

| 122 | + "2023-07-14 16:36:32.515143: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

| 123 | + "2023-07-14 16:36:32.515309: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n" |

124 | 124 | ] |

125 | 125 | } |

126 | 126 | ], |

|

182 | 182 | "name": "stderr", |

183 | 183 | "output_type": "stream", |

184 | 184 | "text": [ |

185 | | - "2023-07-13 16:32:56.568097: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

186 | | - "2023-07-13 16:32:56.568260: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

187 | | - "2023-07-13 16:32:56.568369: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

188 | | - "2023-07-13 16:32:56.941768: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

189 | | - "2023-07-13 16:32:56.941932: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

190 | | - "2023-07-13 16:32:56.942048: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

191 | | - "2023-07-13 16:32:56.942142: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1635] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 17652 MB memory: -> device: 0, name: NVIDIA GeForce RTX 3090, pci bus id: 0000:2b:00.0, compute capability: 8.6\n" |

| 185 | + "2023-07-14 16:36:32.683864: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

| 186 | + "2023-07-14 16:36:32.684043: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

| 187 | + "2023-07-14 16:36:32.684149: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

| 188 | + "2023-07-14 16:36:33.059900: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

| 189 | + "2023-07-14 16:36:33.060059: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

| 190 | + "2023-07-14 16:36:33.060173: I tensorflow/compiler/xla/stream_executor/cuda/cuda_gpu_executor.cc:996] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355\n", |

| 191 | + "2023-07-14 16:36:33.060265: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1635] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 17421 MB memory: -> device: 0, name: NVIDIA GeForce RTX 3090, pci bus id: 0000:2b:00.0, compute capability: 8.6\n" |

192 | 192 | ] |

193 | 193 | }, |

194 | 194 | { |

|

588 | 588 | "name": "stderr", |

589 | 589 | "output_type": "stream", |

590 | 590 | "text": [ |

591 | | - "2023-07-13 16:33:49.284195: I tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:637] TensorFloat-32 will be used for the matrix multiplication. This will only be logged once.\n", |

592 | | - "2023-07-13 16:33:49.391670: I tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:424] Loaded cuDNN version 8600\n" |

| 591 | + "2023-07-14 16:36:34.267761: I tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:637] TensorFloat-32 will be used for the matrix multiplication. This will only be logged once.\n", |

| 592 | + "2023-07-14 16:36:34.365535: I tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:424] Loaded cuDNN version 8600\n" |

593 | 593 | ] |

594 | 594 | } |

595 | 595 | ], |

|

645 | 645 | }, |

646 | 646 | { |

647 | 647 | "cell_type": "code", |

648 | | - "execution_count": 10, |

| 648 | + "execution_count": 9, |

649 | 649 | "metadata": {}, |

650 | 650 | "outputs": [], |

651 | 651 | "source": [ |

|

694 | 694 | }, |

695 | 695 | { |

696 | 696 | "cell_type": "code", |

697 | | - "execution_count": null, |

| 697 | + "execution_count": 10, |

698 | 698 | "metadata": {}, |

699 | | - "outputs": [], |

| 699 | + "outputs": [ |

| 700 | + { |

| 701 | + "name": "stdout", |

| 702 | + "output_type": "stream", |

| 703 | + "text": [ |

| 704 | + "encoder_embeddings shape (1, 100, 512)\n" |

| 705 | + ] |

| 706 | + }, |

| 707 | + { |

| 708 | + "ename": "TypeError", |

| 709 | + "evalue": "GlobalSelfAttention.call() takes 2 positional arguments but 3 were given", |

| 710 | + "output_type": "error", |

| 711 | + "traceback": [ |

| 712 | + "\u001b[0;31m---------------------------------------------------------------------------\u001b[0m", |

| 713 | + "\u001b[0;31mTypeError\u001b[0m Traceback (most recent call last)", |

| 714 | + "Cell \u001b[0;32mIn[10], line 13\u001b[0m\n\u001b[1;32m 10\u001b[0m \u001b[39mprint\u001b[39m(\u001b[39m\"\u001b[39m\u001b[39mencoder_embeddings shape\u001b[39m\u001b[39m\"\u001b[39m, encoder_embeddings\u001b[39m.\u001b[39mshape)\n\u001b[1;32m 12\u001b[0m cross_attention_layer \u001b[39m=\u001b[39m GlobalSelfAttention(num_heads\u001b[39m=\u001b[39m\u001b[39m2\u001b[39m, key_dim\u001b[39m=\u001b[39m\u001b[39m512\u001b[39m)\n\u001b[0;32m---> 13\u001b[0m cross_attention_output \u001b[39m=\u001b[39m cross_attention_layer(decoder_embeddings, encoder_embeddings)\n\u001b[1;32m 15\u001b[0m \u001b[39mprint\u001b[39m(\u001b[39m\"\u001b[39m\u001b[39mglobal_self_attention_output shape\u001b[39m\u001b[39m\"\u001b[39m, cross_attention_output\u001b[39m.\u001b[39mshape)\n", |

| 715 | + "File \u001b[0;32m~/Personal/mltu/venv/lib/python3.10/site-packages/keras/utils/traceback_utils.py:70\u001b[0m, in \u001b[0;36mfilter_traceback.<locals>.error_handler\u001b[0;34m(*args, **kwargs)\u001b[0m\n\u001b[1;32m 67\u001b[0m filtered_tb \u001b[39m=\u001b[39m _process_traceback_frames(e\u001b[39m.\u001b[39m__traceback__)\n\u001b[1;32m 68\u001b[0m \u001b[39m# To get the full stack trace, call:\u001b[39;00m\n\u001b[1;32m 69\u001b[0m \u001b[39m# `tf.debugging.disable_traceback_filtering()`\u001b[39;00m\n\u001b[0;32m---> 70\u001b[0m \u001b[39mraise\u001b[39;00m e\u001b[39m.\u001b[39mwith_traceback(filtered_tb) \u001b[39mfrom\u001b[39;00m \u001b[39mNone\u001b[39;00m\n\u001b[1;32m 71\u001b[0m \u001b[39mfinally\u001b[39;00m:\n\u001b[1;32m 72\u001b[0m \u001b[39mdel\u001b[39;00m filtered_tb\n", |

| 716 | + "File \u001b[0;32m~/Personal/mltu/venv/lib/python3.10/site-packages/keras/utils/traceback_utils.py:96\u001b[0m, in \u001b[0;36minject_argument_info_in_traceback.<locals>.error_handler\u001b[0;34m(*args, **kwargs)\u001b[0m\n\u001b[1;32m 94\u001b[0m bound_signature \u001b[39m=\u001b[39m \u001b[39mNone\u001b[39;00m\n\u001b[1;32m 95\u001b[0m \u001b[39mtry\u001b[39;00m:\n\u001b[0;32m---> 96\u001b[0m \u001b[39mreturn\u001b[39;00m fn(\u001b[39m*\u001b[39margs, \u001b[39m*\u001b[39m\u001b[39m*\u001b[39mkwargs)\n\u001b[1;32m 97\u001b[0m \u001b[39mexcept\u001b[39;00m \u001b[39mException\u001b[39;00m \u001b[39mas\u001b[39;00m e:\n\u001b[1;32m 98\u001b[0m \u001b[39mif\u001b[39;00m \u001b[39mhasattr\u001b[39m(e, \u001b[39m\"\u001b[39m\u001b[39m_keras_call_info_injected\u001b[39m\u001b[39m\"\u001b[39m):\n\u001b[1;32m 99\u001b[0m \u001b[39m# Only inject info for the innermost failing call\u001b[39;00m\n", |

| 717 | + "\u001b[0;31mTypeError\u001b[0m: GlobalSelfAttention.call() takes 2 positional arguments but 3 were given" |

| 718 | + ] |

| 719 | + } |

| 720 | + ], |

700 | 721 | "source": [ |

701 | 722 | "encoder_vocab_size = 1000\n", |

702 | 723 | "d_model = 512\n", |

|

726 | 747 | "cell_type": "markdown", |

727 | 748 | "metadata": {}, |

728 | 749 | "source": [ |

729 | | - "### 3.5" |

| 750 | + "### 3.5 FeedForward layer\n", |

| 751 | + "\n", |

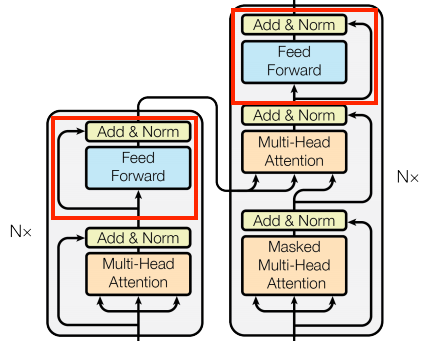

| 752 | + "Looking closer at the encoder and decoder layers, we can see that there is a `FeedForward` layer after each attention layer:\n", |

| 753 | + "\n", |

| 754 | + "\n", |

| 755 | + "\n", |

| 756 | + "The `FeedForward` layer consists of two dense layers that are applied to each position separately and identically. The `FeedForward` layer is primarily used to transform the representation of the input sequence into a form that is more suitable for the task at hand. This is achieved by applying a linear transformation, followed by a non-linear activation function. The output of the `FeedForward` layer has the same shape as the input, which is then added to the original input.\n", |

| 757 | + "\n", |

| 758 | + "Let's implement this layer:" |

| 759 | + ] |

| 760 | + }, |

| 761 | + { |

| 762 | + "cell_type": "code", |

| 763 | + "execution_count": 12, |

| 764 | + "metadata": {}, |

| 765 | + "outputs": [], |

| 766 | + "source": [ |

| 767 | + "class FeedForward(tf.keras.layers.Layer):\n", |

| 768 | + " \"\"\"\n", |

| 769 | + " A class that implements the feed-forward layer.\n", |

| 770 | + "\n", |

| 771 | + " Methods:\n", |

| 772 | + " call: Performs the forward pass of the layer.\n", |

| 773 | + "\n", |

| 774 | + " Attributes:\n", |

| 775 | + " seq (tf.keras.Sequential): The sequential layer that contains the feed-forward layers. It applies the two feed-forward layers and the dropout layer.\n", |

| 776 | + " add (tf.keras.layers.Add): The Add layer.\n", |

| 777 | + " layer_norm (tf.keras.layers.LayerNormalization): The LayerNormalization layer.\n", |

| 778 | + " \"\"\"\n", |

| 779 | + " def __init__(self, d_model: int, dff: int, dropout_rate: float=0.1):\n", |

| 780 | + " \"\"\"\n", |

| 781 | + " Constructor of the FeedForward layer.\n", |

| 782 | + "\n", |

| 783 | + " Args:\n", |

| 784 | + " d_model (int): The dimensionality of the model.\n", |

| 785 | + " dff (int): The dimensionality of the feed-forward layer.\n", |

| 786 | + " dropout_rate (float): The dropout rate.\n", |

| 787 | + " \"\"\"\n", |

| 788 | + " super().__init__()\n", |

| 789 | + " self.seq = tf.keras.Sequential([\n", |

| 790 | + " tf.keras.layers.Dense(dff, activation='relu'),\n", |

| 791 | + " tf.keras.layers.Dense(d_model),\n", |

| 792 | + " tf.keras.layers.Dropout(dropout_rate)\n", |

| 793 | + " ])\n", |

| 794 | + " self.add = tf.keras.layers.Add()\n", |

| 795 | + " self.layer_norm = tf.keras.layers.LayerNormalization()\n", |

| 796 | + "\n", |

| 797 | + " def call(self, x: tf.Tensor) -> tf.Tensor:\n", |

| 798 | + " \"\"\"\n", |

| 799 | + " The call function that performs the feed-forward operation. \n", |

| 800 | + "\n", |

| 801 | + " Args:\n", |

| 802 | + " x (tf.Tensor): The input sequence of shape (batch_size, seq_length, d_model).\n", |

| 803 | + "\n", |

| 804 | + " Returns:\n", |

| 805 | + " tf.Tensor: The output sequence of shape (batch_size, seq_length, d_model).\n", |

| 806 | + " \"\"\"\n", |

| 807 | + " x = self.add([x, self.seq(x)])\n", |

| 808 | + " x = self.layer_norm(x) \n", |

| 809 | + " return x" |

730 | 810 | ] |

731 | 811 | }, |

732 | 812 | { |

733 | 813 | "attachments": {}, |

734 | 814 | "cell_type": "markdown", |

735 | 815 | "metadata": {}, |

736 | | - "source": [] |

| 816 | + "source": [ |

| 817 | + "Let's test the FeedForward layer. We will use the same random input as before. The output shape should be the same as the input shape." |

| 818 | + ] |

| 819 | + }, |

| 820 | + { |

| 821 | + "cell_type": "code", |

| 822 | + "execution_count": 13, |

| 823 | + "metadata": {}, |

| 824 | + "outputs": [ |

| 825 | + { |

| 826 | + "name": "stdout", |

| 827 | + "output_type": "stream", |

| 828 | + "text": [ |

| 829 | + "encoder_embeddings shape (1, 100, 512)\n", |

| 830 | + "feed_forward_output shape (1, 100, 512)\n" |

| 831 | + ] |

| 832 | + } |

| 833 | + ], |

| 834 | + "source": [ |

| 835 | + "encoder_vocab_size = 1000\n", |

| 836 | + "d_model = 512\n", |

| 837 | + "\n", |

| 838 | + "encoder_embedding_layer = PositionalEmbedding(vocab_size, d_model)\n", |

| 839 | + "\n", |

| 840 | + "random_encoder_input = np.random.randint(0, encoder_vocab_size, size=(1, 100))\n", |

| 841 | + "\n", |

| 842 | + "encoder_embeddings = encoder_embedding_layer(random_encoder_input)\n", |

| 843 | + "\n", |

| 844 | + "print(\"encoder_embeddings shape\", encoder_embeddings.shape)\n", |

| 845 | + "\n", |

| 846 | + "feed_forward_layer = FeedForward(d_model, dff=2048)\n", |

| 847 | + "feed_forward_output = feed_forward_layer(encoder_embeddings)\n", |

| 848 | + "\n", |

| 849 | + "print(\"feed_forward_output shape\", feed_forward_output.shape)" |

| 850 | + ] |

| 851 | + }, |

| 852 | + { |

| 853 | + "attachments": {}, |

| 854 | + "cell_type": "markdown", |

| 855 | + "metadata": {}, |

| 856 | + "source": [ |

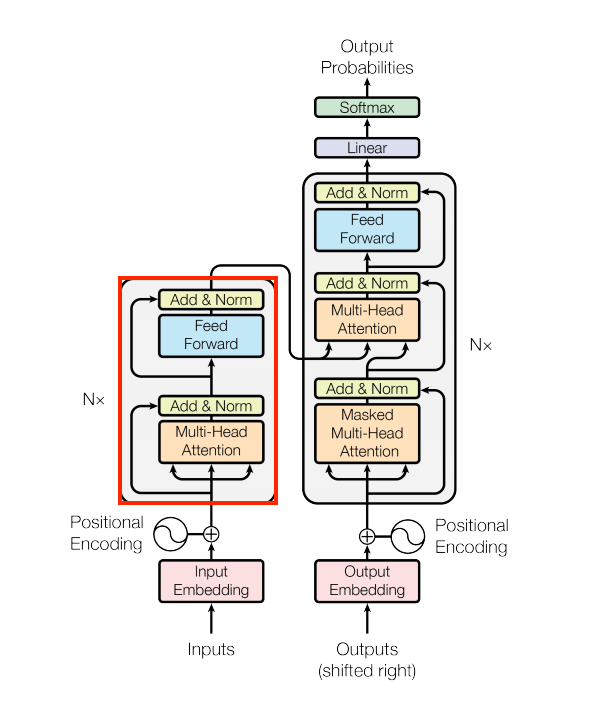

| 857 | + "## 4. Implementing Encoder and Decoder layers\n", |

| 858 | + "### 4.1. EncoderLayer layer\n", |

| 859 | + "\n", |

| 860 | + "Great, now we have all the layers we need to implement the Encoder and Decoder layers. Let's start with the EncoderLayer layer. Why it called `EncoderLayer`? Because it is a single layer of the Encoder. The Encoder is composed of multiple EncoderLayers. The same goes for the Decoder:\n", |

| 861 | + "\n", |

| 862 | + "\n", |

| 863 | + "\n", |

| 864 | + "The EncoderLayer consists of two sublayers: a `MultiHeadAttention` layer, more specifically `GlobalSelfAttention` layer and a `FeedForward` layer. Each of these sublayers has a residual connection around it, followed by a layer normalization. Residual connections help in avoiding the vanishing gradient problem in deep networks. Let's implement this layer:" |

| 865 | + ] |

| 866 | + }, |

| 867 | + { |

| 868 | + "cell_type": "code", |

| 869 | + "execution_count": null, |

| 870 | + "metadata": {}, |

| 871 | + "outputs": [], |

| 872 | + "source": [ |

| 873 | + "class EncoderLayer(tf.keras.layers.Layer):\n", |

| 874 | + " \"\"\"\n", |

| 875 | + " A single layer of the Encoder. Usually there are multiple layers stacked on top of each other.\n", |

| 876 | + "\n", |

| 877 | + " Methods:\n", |

| 878 | + " call: Performs the forward pass of the layer.\n", |

| 879 | + "\n", |

| 880 | + " Attributes:\n", |

| 881 | + " self_attention (GlobalSelfAttention): The global self-attention layer.\n", |

| 882 | + " ffn (FeedForward): The feed-forward layer.\n", |

| 883 | + " \"\"\"\n", |

| 884 | + " def __init__(self, d_model: int, num_heads: int, dff: int, dropout_rate: float=0.1):\n", |

| 885 | + " \"\"\"\n", |

| 886 | + " Constructor of the EncoderLayer.\n", |

| 887 | + "\n", |

| 888 | + " Args:\n", |

| 889 | + " d_model (int): The dimensionality of the model.\n", |

| 890 | + " num_heads (int): The number of heads in the multi-head attention layer.\n", |

| 891 | + " dff (int): The dimensionality of the feed-forward layer.\n", |

| 892 | + " dropout_rate (float): The dropout rate.\n", |

| 893 | + " \"\"\"\n", |

| 894 | + " super().__init__()\n", |

| 895 | + "\n", |

| 896 | + " self.self_attention = GlobalSelfAttention(\n", |

| 897 | + " num_heads=num_heads,\n", |

| 898 | + " key_dim=d_model,\n", |

| 899 | + " dropout=dropout_rate\n", |

| 900 | + " )\n", |

| 901 | + "\n", |

| 902 | + " self.ffn = FeedForward(d_model, dff)\n", |

| 903 | + "\n", |

| 904 | + " def call(self, x: tf.Tensor) -> tf.Tensor:\n", |

| 905 | + " \"\"\"\n", |

| 906 | + " The call function that performs the forward pass of the layer.\n", |

| 907 | + "\n", |

| 908 | + " Args:\n", |

| 909 | + " x (tf.Tensor): The input sequence of shape (batch_size, seq_length, d_model).\n", |

| 910 | + "\n", |

| 911 | + " Returns:\n", |

| 912 | + " tf.Tensor: The output sequence of shape (batch_size, seq_length, d_model).\n", |

| 913 | + " \"\"\"\n", |

| 914 | + " x = self.self_attention(x)\n", |

| 915 | + " x = self.ffn(x)\n", |

| 916 | + " return x" |

| 917 | + ] |

737 | 918 | }, |

738 | 919 | { |

739 | 920 | "attachments": {}, |

|

0 commit comments