+

+

TensorPtr, details in this documentation. For how to use TensorPtr and Module, please refer to the "Using ExecuTorch with C++" doc.

+│ ├── tensor - Tensor maker and TensorPtr, details in this documentation. For how to use TensorPtr and Module, please refer to the "Using ExecuTorch with C++" doc.

│ ├── testing_util - Helpers for writing C++ tests.

│ ├── threadpool - Threadpool.

│ └── training - Experimental libraries for on-device training.

@@ -85,7 +85,7 @@ executorch

├── runtime - Core C++ runtime. These components are used to execute the ExecuTorch program. Please refer to the runtime documentation for more information.

│ ├── backend - Backend delegate runtime APIs.

│ ├── core - Core structures used across all levels of the runtime. Basic components such as Tensor, EValue, Error and Result etc.

-│ ├── executor - Model loading, initialization, and execution. Runtime components that execute the ExecuTorch program, such as Program, Method. Refer to the runtime API documentation for more information.

+│ ├── executor - Model loading, initialization, and execution. Runtime components that execute the ExecuTorch program, such as Program, Method. Refer to the runtime API documentation for more information.

│ ├── kernel - Kernel registration and management.

│ └── platform - Layer between architecture specific code and portable C++.

├── schema - ExecuTorch PTE file format flatbuffer schemas.

@@ -102,7 +102,7 @@ executorch

## Contributing workflow

We actively welcome your pull requests (PRs).

-If you're completely new to open-source projects, GitHub, or ExecuTorch, please see our [New Contributor Guide](./docs/source/new-contributor-guide.md) for a step-by-step walkthrough on making your first contribution. Otherwise, read on.

+If you're completely new to open-source projects, GitHub, or ExecuTorch, please see our [New Contributor Guide](docs/source/new-contributor-guide.md) for a step-by-step walkthrough on making your first contribution. Otherwise, read on.

1. [Claim an issue](#claiming-issues), if present, before starting work. If an

issue doesn't cover the work you plan to do, consider creating one to provide

@@ -245,7 +245,7 @@ modifications to the Google C++ style guide.

### C++ Portability Guidelines

-See also [Portable C++ Programming](/docs/source/portable-cpp-programming.md)

+See also [Portable C++ Programming](docs/source/portable-cpp-programming.md)

for detailed advice.

#### C++ language version

@@ -417,9 +417,9 @@ for basics.

## For Backend Delegate Authors

-- Use [this](/docs/source/backend-delegates-integration.md) guide when

+- Use [this](docs/source/backend-delegates-integration.md) guide when

integrating your delegate with ExecuTorch.

-- Refer to [this](/docs/source/backend-delegates-dependencies.md) set of

+- Refer to [this](docs/source/backend-delegates-dependencies.md) set of

guidelines when including a third-party depenency for your delegate.

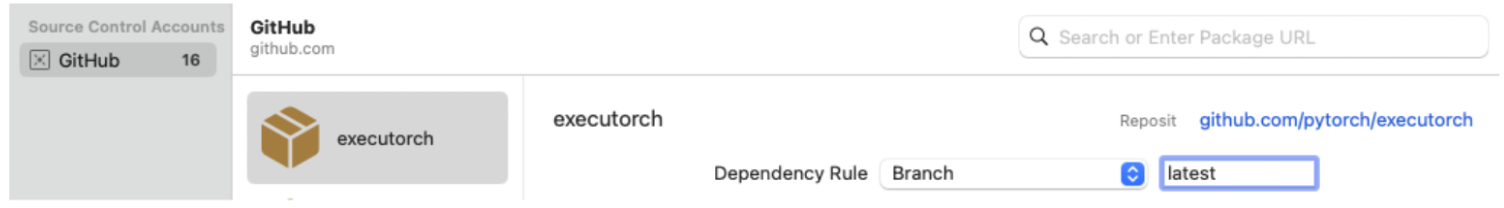

diff --git a/Package.swift b/Package.swift

index 1322b918c07..b8a8b7d064b 100644

--- a/Package.swift

+++ b/Package.swift

@@ -15,7 +15,7 @@

//

// For details on building frameworks locally or using prebuilt binaries,

// see the documentation:

-// https://pytorch.org/executorch/main/using-executorch-ios.html

+// https://pytorch.org/executorch/main/using-executorch-ios

import PackageDescription

diff --git a/README-wheel.md b/README-wheel.md

index 9f074ab5ee3..12752bcabfa 100644

--- a/README-wheel.md

+++ b/README-wheel.md

@@ -10,32 +10,21 @@ The `executorch` pip package is in beta.

The prebuilt `executorch.runtime` module included in this package provides a way

to run ExecuTorch `.pte` files, with some restrictions:

-* Only [core ATen

- operators](https://pytorch.org/executorch/stable/ir-ops-set-definition.html)

- are linked into the prebuilt module

-* Only the [XNNPACK backend

- delegate](https://pytorch.org/executorch/main/native-delegates-executorch-xnnpack-delegate.html)

- is linked into the prebuilt module.

-* \[macOS only] [Core ML](https://pytorch.org/executorch/main/build-run-coreml.html)

- and [MPS](https://pytorch.org/executorch/main/build-run-mps.html) backend

- delegates are also linked into the prebuilt module.

+* Only [core ATen operators](docs/source/ir-ops-set-definition.md) are linked into the prebuilt module

+* Only the [XNNPACK backend delegate](docs/source/backends-xnnpack.md) is linked into the prebuilt module.

+* \[macOS only] [Core ML](docs/source/backends-coreml.md) and [MPS](docs/source/backends-mps.md) backend

+ are also linked into the prebuilt module.

-Please visit the [ExecuTorch website](https://pytorch.org/executorch/) for

+Please visit the [ExecuTorch website](https://pytorch.org/executorch) for

tutorials and documentation. Here are some starting points:

-* [Getting

- Started](https://pytorch.org/executorch/stable/getting-started-setup.html)

+* [Getting Started](https://pytorch.org/executorch/main/getting-started-setup)

* Set up the ExecuTorch environment and run PyTorch models locally.

-* [Working with

- local LLMs](https://pytorch.org/executorch/stable/llm/getting-started.html)

+* [Working with local LLMs](docs/source/llm/getting-started.md)

* Learn how to use ExecuTorch to export and accelerate a large-language model

from scratch.

-* [Exporting to

- ExecuTorch](https://pytorch.org/executorch/main/tutorials/export-to-executorch-tutorial.html)

+* [Exporting to ExecuTorch](https://pytorch.org/executorch/main/tutorials/export-to-executorch-tutorial)

* Learn the fundamentals of exporting a PyTorch `nn.Module` to ExecuTorch, and

optimizing its performance using quantization and hardware delegation.

-* Running LLaMA on

- [iOS](https://pytorch.org/executorch/stable/llm/llama-demo-ios.html) and

- [Android](https://pytorch.org/executorch/stable/llm/llama-demo-android.html)

- devices.

+* Running LLaMA on [iOS](docs/source/llm/llama-demo-ios) and [Android](docs/source/llm/llama-demo-android) devices.

* Build and run LLaMA in a demo mobile app, and learn how to integrate models

with your own apps.

diff --git a/README.md b/README.md

index 025a8780739..c0d594e7733 100644

--- a/README.md

+++ b/README.md

@@ -1,5 +1,5 @@

+

+

-

-  +

+

diff --git a/examples/devtools/README.md b/examples/devtools/README.md

index e4fbadfcca0..0b516ad629e 100644

--- a/examples/devtools/README.md

+++ b/examples/devtools/README.md

@@ -17,7 +17,7 @@ examples/devtools

We will use an example model (in `torch.nn.Module`) and its representative inputs, both from [`models/`](../models) directory, to generate a [BundledProgram(`.bpte`)](../../docs/source/bundled-io.md) file using the [script](scripts/export_bundled_program.py). Then we will use [devtools/example_runner](example_runner/example_runner.cpp) to execute the `.bpte` model on the ExecuTorch runtime and verify the model on BundledProgram API.

-1. Sets up the basic development environment for ExecuTorch by [Setting up ExecuTorch from GitHub](https://pytorch.org/executorch/stable/getting-started-setup).

+1. Sets up the basic development environment for ExecuTorch by [Setting up ExecuTorch from GitHub](https://pytorch.org/executorch/main/getting-started-setup).

2. Using the [script](scripts/export_bundled_program.py) to generate a BundledProgram binary file by retreiving a `torch.nn.Module` model and its representative inputs from the list of available models in the [`models/`](../models) dir.

diff --git a/examples/models/llama/UTILS.md b/examples/models/llama/UTILS.md

index dd014240ace..2d4d4dfd788 100644

--- a/examples/models/llama/UTILS.md

+++ b/examples/models/llama/UTILS.md

@@ -25,7 +25,7 @@ From `executorch` root:

## Smaller model delegated to other backends

Currently we supported lowering the stories model to other backends, including, CoreML, MPS and QNN. Please refer to the instruction

-for each backend ([CoreML](https://pytorch.org/executorch/main/build-run-coreml.html), [MPS](https://pytorch.org/executorch/main/build-run-mps.html), [QNN](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend.html)) before trying to lower them. After the backend library is installed, the script to export a lowered model is

+for each backend ([CoreML](https://pytorch.org/executorch/main/backends-coreml), [MPS](https://pytorch.org/executorch/main/backends-mps), [QNN](https://pytorch.org/executorch/main/backend-qualcomm)) before trying to lower them. After the backend library is installed, the script to export a lowered model is

- Lower to CoreML: `python -m examples.models.llama.export_llama -kv --disable_dynamic_shape --coreml -c stories110M.pt -p params.json `

- MPS: `python -m examples.models.llama.export_llama -kv --disable_dynamic_shape --mps -c stories110M.pt -p params.json `

diff --git a/examples/models/llama/non_cpu_backends.md b/examples/models/llama/non_cpu_backends.md

index 1ee594ebd83..f414582a3c1 100644

--- a/examples/models/llama/non_cpu_backends.md

+++ b/examples/models/llama/non_cpu_backends.md

@@ -2,7 +2,7 @@

# Running Llama 3/3.1 8B on non-CPU backends

### QNN

-Please follow [the instructions](https://pytorch.org/executorch/stable/llm/build-run-llama3-qualcomm-ai-engine-direct-backend.html) to deploy Llama 3 8B to an Android smartphone with Qualcomm SoCs.

+Please follow [the instructions](https://pytorch.org/executorch/main/llm/build-run-llama3-qualcomm-ai-engine-direct-backend) to deploy Llama 3 8B to an Android smartphone with Qualcomm SoCs.

### MPS

Export:

@@ -10,7 +10,7 @@ Export:

python -m examples.models.llama2.export_llama --checkpoint llama3.pt --params params.json -kv --disable_dynamic_shape --mps --use_sdpa_with_kv_cache -d fp32 -qmode 8da4w -G 32 --embedding-quantize 4,32

```

-After exporting the MPS model .pte file, the [iOS LLAMA](https://pytorch.org/executorch/main/llm/llama-demo-ios.html) app can support running the model. ` --embedding-quantize 4,32` is an optional args for quantizing embedding to reduce the model size.

+After exporting the MPS model .pte file, the [iOS LLAMA](https://pytorch.org/executorch/main/llm/llama-demo-ios) app can support running the model. ` --embedding-quantize 4,32` is an optional args for quantizing embedding to reduce the model size.

### CoreML

Export:

diff --git a/examples/models/phi-3-mini-lora/README.md b/examples/models/phi-3-mini-lora/README.md

index 2b7cc0ba401..5bc48bd48f4 100644

--- a/examples/models/phi-3-mini-lora/README.md

+++ b/examples/models/phi-3-mini-lora/README.md

@@ -16,7 +16,7 @@ To see how you can use the model exported for training in a fully involved finet

python export_model.py

```

-2. Run the inference model using an example runtime. For more detailed steps on this, check out [Build & Run](https://pytorch.org/executorch/stable/getting-started-setup.html#build-run).

+2. Run the inference model using an example runtime. For more detailed steps on this, check out [Build & Run](https://pytorch.org/executorch/main/getting-started-setup#build-run).

```

# Clean and configure the CMake build system. Compiled programs will appear in the executorch/cmake-out directory we create here.

./install_executorch.sh --clean

diff --git a/examples/portable/README.md b/examples/portable/README.md

index a6658197da3..6bfc9aa7281 100644

--- a/examples/portable/README.md

+++ b/examples/portable/README.md

@@ -20,7 +20,7 @@ We will walk through an example model to generate a `.pte` file in [portable mod

from the [`models/`](../models) directory using scripts in the `portable/scripts` directory. Then we will run on the `.pte` model on the ExecuTorch runtime. For that we will use `executor_runner`.

-1. Following the setup guide in [Setting up ExecuTorch](https://pytorch.org/executorch/stable/getting-started-setup)

+1. Following the setup guide in [Setting up ExecuTorch](https://pytorch.org/executorch/main/getting-started-setup)

you should be able to get the basic development environment for ExecuTorch working.

2. Using the script `portable/scripts/export.py` generate a model binary file by selecting a

diff --git a/examples/portable/custom_ops/README.md b/examples/portable/custom_ops/README.md

index db517e84a0c..bf17d6a6753 100644

--- a/examples/portable/custom_ops/README.md

+++ b/examples/portable/custom_ops/README.md

@@ -3,7 +3,7 @@ This folder contains examples to register custom operators into PyTorch as well

## How to run

-Prerequisite: finish the [setting up wiki](https://pytorch.org/executorch/stable/getting-started-setup).

+Prerequisite: finish the [setting up wiki](https://pytorch.org/executorch/main/getting-started-setup).

Run:

diff --git a/examples/qualcomm/README.md b/examples/qualcomm/README.md

index bdac58d2bfc..1f7e2d1e476 100644

--- a/examples/qualcomm/README.md

+++ b/examples/qualcomm/README.md

@@ -22,7 +22,7 @@ Here are some general information and limitations.

## Prerequisite

-Please finish tutorial [Setting up executorch](https://pytorch.org/executorch/stable/getting-started-setup).

+Please finish tutorial [Setting up executorch](https://pytorch.org/executorch/main/getting-started-setup).

Please finish [setup QNN backend](../../docs/source/build-run-qualcomm-ai-engine-direct-backend.md).

diff --git a/examples/qualcomm/oss_scripts/llama/README.md b/examples/qualcomm/oss_scripts/llama/README.md

index 9b6ec9574eb..27abd5689a0 100644

--- a/examples/qualcomm/oss_scripts/llama/README.md

+++ b/examples/qualcomm/oss_scripts/llama/README.md

@@ -28,7 +28,7 @@ Hybrid Mode: Hybrid mode leverages the strengths of both AR-N model and KV cache

### Step 1: Setup

1. Follow the [tutorial](https://pytorch.org/executorch/main/getting-started-setup) to set up ExecuTorch.

-2. Follow the [tutorial](https://pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html) to build Qualcomm AI Engine Direct Backend.

+2. Follow the [tutorial](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend) to build Qualcomm AI Engine Direct Backend.

### Step 2: Prepare Model

diff --git a/examples/qualcomm/qaihub_scripts/llama/README.md b/examples/qualcomm/qaihub_scripts/llama/README.md

index 0fec6ea867f..4d010b5d474 100644

--- a/examples/qualcomm/qaihub_scripts/llama/README.md

+++ b/examples/qualcomm/qaihub_scripts/llama/README.md

@@ -12,7 +12,7 @@ Note that the pre-compiled context binaries could not be futher fine-tuned for o

### Instructions

#### Step 1: Setup

1. Follow the [tutorial](https://pytorch.org/executorch/main/getting-started-setup) to set up ExecuTorch.

-2. Follow the [tutorial](https://pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html) to build Qualcomm AI Engine Direct Backend.

+2. Follow the [tutorial](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend) to build Qualcomm AI Engine Direct Backend.

#### Step2: Prepare Model

1. Create account for https://aihub.qualcomm.com/

@@ -40,7 +40,7 @@ Note that the pre-compiled context binaries could not be futher fine-tuned for o

### Instructions

#### Step 1: Setup

1. Follow the [tutorial](https://pytorch.org/executorch/main/getting-started-setup) to set up ExecuTorch.

-2. Follow the [tutorial](https://pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html) to build Qualcomm AI Engine Direct Backend.

+2. Follow the [tutorial](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend) to build Qualcomm AI Engine Direct Backend.

#### Step2: Prepare Model

1. Create account for https://aihub.qualcomm.com/

diff --git a/examples/qualcomm/qaihub_scripts/stable_diffusion/README.md b/examples/qualcomm/qaihub_scripts/stable_diffusion/README.md

index b008d3135d4..998c97d78e3 100644

--- a/examples/qualcomm/qaihub_scripts/stable_diffusion/README.md

+++ b/examples/qualcomm/qaihub_scripts/stable_diffusion/README.md

@@ -11,7 +11,7 @@ The model architecture, scheduler, and time embedding are from the [stabilityai/

### Instructions

#### Step 1: Setup

1. Follow the [tutorial](https://pytorch.org/executorch/main/getting-started-setup) to set up ExecuTorch.

-2. Follow the [tutorial](https://pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html) to build Qualcomm AI Engine Direct Backend.

+2. Follow the [tutorial](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend) to build Qualcomm AI Engine Direct Backend.

#### Step2: Prepare Model

1. Download the context binaries for TextEncoder, UNet, and VAEDecoder under https://huggingface.co/qualcomm/Stable-Diffusion-v2.1/tree/main

diff --git a/examples/selective_build/README.md b/examples/selective_build/README.md

index 6c655e18a3d..97706d70c48 100644

--- a/examples/selective_build/README.md

+++ b/examples/selective_build/README.md

@@ -3,7 +3,7 @@ To optimize binary size of ExecuTorch runtime, selective build can be used. This

## How to run

-Prerequisite: finish the [setting up wiki](https://pytorch.org/executorch/stable/getting-started-setup).

+Prerequisite: finish the [setting up wiki](https://pytorch.org/executorch/main/getting-started-setup).

Run:

diff --git a/examples/xnnpack/README.md b/examples/xnnpack/README.md

index 179e47004a1..56deff928af 100644

--- a/examples/xnnpack/README.md

+++ b/examples/xnnpack/README.md

@@ -1,8 +1,8 @@

# XNNPACK Backend

[XNNPACK](https://github.com/google/XNNPACK) is a library of optimized neural network operators for ARM and x86 CPU platforms. Our delegate lowers models to run using these highly optimized CPU operators. You can try out lowering and running some example models in the demo. Please refer to the following docs for information on the XNNPACK Delegate

-- [XNNPACK Backend Delegate Overview](https://pytorch.org/executorch/stable/native-delegates-executorch-xnnpack-delegate.html)

-- [XNNPACK Delegate Export Tutorial](https://pytorch.org/executorch/stable/tutorial-xnnpack-delegate-lowering.html)

+- [XNNPACK Backend Delegate Overview](https://pytorch.org/executorch/main/native-delegates-executorch-xnnpack-delegate)

+- [XNNPACK Delegate Export Tutorial](https://pytorch.org/executorch/main/tutorial-xnnpack-delegate-lowering)

## Directory structure

@@ -60,7 +60,7 @@ Now finally you should be able to run this model with the following command

```

## Quantization

-First, learn more about the generic PyTorch 2 Export Quantization workflow in the [Quantization Flow Docs](https://pytorch.org/executorch/stable/quantization-overview.html), if you are not familiar already.

+First, learn more about the generic PyTorch 2 Export Quantization workflow in the [Quantization Flow Docs](https://pytorch.org/executorch/main/quantization-overview), if you are not familiar already.

Here we will discuss quantizing a model suitable for XNNPACK delegation using XNNPACKQuantizer.

diff --git a/exir/program/_program.py b/exir/program/_program.py

index ef857ffd011..da5ca06c927 100644

--- a/exir/program/_program.py

+++ b/exir/program/_program.py

@@ -1325,7 +1325,7 @@ def to_edge(

class EdgeProgramManager:

"""

Package of one or more `ExportedPrograms` in Edge dialect. Designed to simplify

- lowering to ExecuTorch. See: https://pytorch.org/executorch/stable/ir-exir.html

+ lowering to ExecuTorch. See: https://pytorch.org/executorch/main/ir-exir

Allows easy applications of transforms across a collection of exported programs

including the delegation of subgraphs.

@@ -1565,7 +1565,7 @@ def to_executorch(

class ExecutorchProgramManager:

"""

Package of one or more `ExportedPrograms` in Execution dialect. Designed to simplify

- lowering to ExecuTorch. See: https://pytorch.org/executorch/stable/ir-exir.html

+ lowering to ExecuTorch. See: https://pytorch.org/executorch/main/ir-exir

When the ExecutorchProgramManager is constructed the ExportedPrograms in execution dialect

are used to form the executorch binary (in a process called emission) and then serialized

diff --git a/extension/android/README.md b/extension/android/README.md

index 8972e615173..1274a18e447 100644

--- a/extension/android/README.md

+++ b/extension/android/README.md

@@ -28,7 +28,7 @@ export ANDROID_NDK=/path/to/ndk

sh scripts/build_android_library.sh

```

-Please see [Android building from source](https://pytorch.org/executorch/main/using-executorch-android.html#building-from-source) for details

+Please see [Android building from source](https://pytorch.org/executorch/main/using-executorch-android#building-from-source) for details

## Test

diff --git a/extension/pybindings/pybindings.pyi b/extension/pybindings/pybindings.pyi

index 64ea14f08ff..7aede1c29a9 100644

--- a/extension/pybindings/pybindings.pyi

+++ b/extension/pybindings/pybindings.pyi

@@ -161,7 +161,7 @@ def _load_for_executorch(

Args:

path: File path to the ExecuTorch program as a string.

enable_etdump: If true, enables an ETDump which can store profiling information.

- See documentation at https://pytorch.org/executorch/stable/etdump.html

+ See documentation at https://pytorch.org/executorch/main/etdump

for how to use it.

debug_buffer_size: If non-zero, enables a debug buffer which can store

intermediate results of each instruction in the ExecuTorch program.

@@ -192,7 +192,7 @@ def _load_for_executorch_from_bundled_program(

) -> ExecuTorchModule:

"""Same as _load_for_executorch, but takes a bundled program instead of a file path.

- See https://pytorch.org/executorch/stable/bundled-io.html for documentation.

+ See https://pytorch.org/executorch/main/bundled-io for documentation.

.. warning::

diff --git a/runtime/COMPATIBILITY.md b/runtime/COMPATIBILITY.md

index 7d9fd47c590..583dab172cc 100644

--- a/runtime/COMPATIBILITY.md

+++ b/runtime/COMPATIBILITY.md

@@ -1,7 +1,7 @@

# Runtime Compatibility Policy

This document describes the compatibility guarantees between the [PTE file

-format](https://pytorch.org/executorch/stable/pte-file-format.html) and the

+format](https://pytorch.org/executorch/main/pte-file-format) and the

ExecuTorch runtime.

> [!IMPORTANT]

diff --git a/examples/devtools/README.md b/examples/devtools/README.md

index e4fbadfcca0..0b516ad629e 100644

--- a/examples/devtools/README.md

+++ b/examples/devtools/README.md

@@ -17,7 +17,7 @@ examples/devtools

We will use an example model (in `torch.nn.Module`) and its representative inputs, both from [`models/`](../models) directory, to generate a [BundledProgram(`.bpte`)](../../docs/source/bundled-io.md) file using the [script](scripts/export_bundled_program.py). Then we will use [devtools/example_runner](example_runner/example_runner.cpp) to execute the `.bpte` model on the ExecuTorch runtime and verify the model on BundledProgram API.

-1. Sets up the basic development environment for ExecuTorch by [Setting up ExecuTorch from GitHub](https://pytorch.org/executorch/stable/getting-started-setup).

+1. Sets up the basic development environment for ExecuTorch by [Setting up ExecuTorch from GitHub](https://pytorch.org/executorch/main/getting-started-setup).

2. Using the [script](scripts/export_bundled_program.py) to generate a BundledProgram binary file by retreiving a `torch.nn.Module` model and its representative inputs from the list of available models in the [`models/`](../models) dir.

diff --git a/examples/models/llama/UTILS.md b/examples/models/llama/UTILS.md

index dd014240ace..2d4d4dfd788 100644

--- a/examples/models/llama/UTILS.md

+++ b/examples/models/llama/UTILS.md

@@ -25,7 +25,7 @@ From `executorch` root:

## Smaller model delegated to other backends

Currently we supported lowering the stories model to other backends, including, CoreML, MPS and QNN. Please refer to the instruction

-for each backend ([CoreML](https://pytorch.org/executorch/main/build-run-coreml.html), [MPS](https://pytorch.org/executorch/main/build-run-mps.html), [QNN](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend.html)) before trying to lower them. After the backend library is installed, the script to export a lowered model is

+for each backend ([CoreML](https://pytorch.org/executorch/main/backends-coreml), [MPS](https://pytorch.org/executorch/main/backends-mps), [QNN](https://pytorch.org/executorch/main/backend-qualcomm)) before trying to lower them. After the backend library is installed, the script to export a lowered model is

- Lower to CoreML: `python -m examples.models.llama.export_llama -kv --disable_dynamic_shape --coreml -c stories110M.pt -p params.json `

- MPS: `python -m examples.models.llama.export_llama -kv --disable_dynamic_shape --mps -c stories110M.pt -p params.json `

diff --git a/examples/models/llama/non_cpu_backends.md b/examples/models/llama/non_cpu_backends.md

index 1ee594ebd83..f414582a3c1 100644

--- a/examples/models/llama/non_cpu_backends.md

+++ b/examples/models/llama/non_cpu_backends.md

@@ -2,7 +2,7 @@

# Running Llama 3/3.1 8B on non-CPU backends

### QNN

-Please follow [the instructions](https://pytorch.org/executorch/stable/llm/build-run-llama3-qualcomm-ai-engine-direct-backend.html) to deploy Llama 3 8B to an Android smartphone with Qualcomm SoCs.

+Please follow [the instructions](https://pytorch.org/executorch/main/llm/build-run-llama3-qualcomm-ai-engine-direct-backend) to deploy Llama 3 8B to an Android smartphone with Qualcomm SoCs.

### MPS

Export:

@@ -10,7 +10,7 @@ Export:

python -m examples.models.llama2.export_llama --checkpoint llama3.pt --params params.json -kv --disable_dynamic_shape --mps --use_sdpa_with_kv_cache -d fp32 -qmode 8da4w -G 32 --embedding-quantize 4,32

```

-After exporting the MPS model .pte file, the [iOS LLAMA](https://pytorch.org/executorch/main/llm/llama-demo-ios.html) app can support running the model. ` --embedding-quantize 4,32` is an optional args for quantizing embedding to reduce the model size.

+After exporting the MPS model .pte file, the [iOS LLAMA](https://pytorch.org/executorch/main/llm/llama-demo-ios) app can support running the model. ` --embedding-quantize 4,32` is an optional args for quantizing embedding to reduce the model size.

### CoreML

Export:

diff --git a/examples/models/phi-3-mini-lora/README.md b/examples/models/phi-3-mini-lora/README.md

index 2b7cc0ba401..5bc48bd48f4 100644

--- a/examples/models/phi-3-mini-lora/README.md

+++ b/examples/models/phi-3-mini-lora/README.md

@@ -16,7 +16,7 @@ To see how you can use the model exported for training in a fully involved finet

python export_model.py

```

-2. Run the inference model using an example runtime. For more detailed steps on this, check out [Build & Run](https://pytorch.org/executorch/stable/getting-started-setup.html#build-run).

+2. Run the inference model using an example runtime. For more detailed steps on this, check out [Build & Run](https://pytorch.org/executorch/main/getting-started-setup#build-run).

```

# Clean and configure the CMake build system. Compiled programs will appear in the executorch/cmake-out directory we create here.

./install_executorch.sh --clean

diff --git a/examples/portable/README.md b/examples/portable/README.md

index a6658197da3..6bfc9aa7281 100644

--- a/examples/portable/README.md

+++ b/examples/portable/README.md

@@ -20,7 +20,7 @@ We will walk through an example model to generate a `.pte` file in [portable mod

from the [`models/`](../models) directory using scripts in the `portable/scripts` directory. Then we will run on the `.pte` model on the ExecuTorch runtime. For that we will use `executor_runner`.

-1. Following the setup guide in [Setting up ExecuTorch](https://pytorch.org/executorch/stable/getting-started-setup)

+1. Following the setup guide in [Setting up ExecuTorch](https://pytorch.org/executorch/main/getting-started-setup)

you should be able to get the basic development environment for ExecuTorch working.

2. Using the script `portable/scripts/export.py` generate a model binary file by selecting a

diff --git a/examples/portable/custom_ops/README.md b/examples/portable/custom_ops/README.md

index db517e84a0c..bf17d6a6753 100644

--- a/examples/portable/custom_ops/README.md

+++ b/examples/portable/custom_ops/README.md

@@ -3,7 +3,7 @@ This folder contains examples to register custom operators into PyTorch as well

## How to run

-Prerequisite: finish the [setting up wiki](https://pytorch.org/executorch/stable/getting-started-setup).

+Prerequisite: finish the [setting up wiki](https://pytorch.org/executorch/main/getting-started-setup).

Run:

diff --git a/examples/qualcomm/README.md b/examples/qualcomm/README.md

index bdac58d2bfc..1f7e2d1e476 100644

--- a/examples/qualcomm/README.md

+++ b/examples/qualcomm/README.md

@@ -22,7 +22,7 @@ Here are some general information and limitations.

## Prerequisite

-Please finish tutorial [Setting up executorch](https://pytorch.org/executorch/stable/getting-started-setup).

+Please finish tutorial [Setting up executorch](https://pytorch.org/executorch/main/getting-started-setup).

Please finish [setup QNN backend](../../docs/source/build-run-qualcomm-ai-engine-direct-backend.md).

diff --git a/examples/qualcomm/oss_scripts/llama/README.md b/examples/qualcomm/oss_scripts/llama/README.md

index 9b6ec9574eb..27abd5689a0 100644

--- a/examples/qualcomm/oss_scripts/llama/README.md

+++ b/examples/qualcomm/oss_scripts/llama/README.md

@@ -28,7 +28,7 @@ Hybrid Mode: Hybrid mode leverages the strengths of both AR-N model and KV cache

### Step 1: Setup

1. Follow the [tutorial](https://pytorch.org/executorch/main/getting-started-setup) to set up ExecuTorch.

-2. Follow the [tutorial](https://pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html) to build Qualcomm AI Engine Direct Backend.

+2. Follow the [tutorial](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend) to build Qualcomm AI Engine Direct Backend.

### Step 2: Prepare Model

diff --git a/examples/qualcomm/qaihub_scripts/llama/README.md b/examples/qualcomm/qaihub_scripts/llama/README.md

index 0fec6ea867f..4d010b5d474 100644

--- a/examples/qualcomm/qaihub_scripts/llama/README.md

+++ b/examples/qualcomm/qaihub_scripts/llama/README.md

@@ -12,7 +12,7 @@ Note that the pre-compiled context binaries could not be futher fine-tuned for o

### Instructions

#### Step 1: Setup

1. Follow the [tutorial](https://pytorch.org/executorch/main/getting-started-setup) to set up ExecuTorch.

-2. Follow the [tutorial](https://pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html) to build Qualcomm AI Engine Direct Backend.

+2. Follow the [tutorial](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend) to build Qualcomm AI Engine Direct Backend.

#### Step2: Prepare Model

1. Create account for https://aihub.qualcomm.com/

@@ -40,7 +40,7 @@ Note that the pre-compiled context binaries could not be futher fine-tuned for o

### Instructions

#### Step 1: Setup

1. Follow the [tutorial](https://pytorch.org/executorch/main/getting-started-setup) to set up ExecuTorch.

-2. Follow the [tutorial](https://pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html) to build Qualcomm AI Engine Direct Backend.

+2. Follow the [tutorial](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend) to build Qualcomm AI Engine Direct Backend.

#### Step2: Prepare Model

1. Create account for https://aihub.qualcomm.com/

diff --git a/examples/qualcomm/qaihub_scripts/stable_diffusion/README.md b/examples/qualcomm/qaihub_scripts/stable_diffusion/README.md

index b008d3135d4..998c97d78e3 100644

--- a/examples/qualcomm/qaihub_scripts/stable_diffusion/README.md

+++ b/examples/qualcomm/qaihub_scripts/stable_diffusion/README.md

@@ -11,7 +11,7 @@ The model architecture, scheduler, and time embedding are from the [stabilityai/

### Instructions

#### Step 1: Setup

1. Follow the [tutorial](https://pytorch.org/executorch/main/getting-started-setup) to set up ExecuTorch.

-2. Follow the [tutorial](https://pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html) to build Qualcomm AI Engine Direct Backend.

+2. Follow the [tutorial](https://pytorch.org/executorch/main/build-run-qualcomm-ai-engine-direct-backend) to build Qualcomm AI Engine Direct Backend.

#### Step2: Prepare Model

1. Download the context binaries for TextEncoder, UNet, and VAEDecoder under https://huggingface.co/qualcomm/Stable-Diffusion-v2.1/tree/main

diff --git a/examples/selective_build/README.md b/examples/selective_build/README.md

index 6c655e18a3d..97706d70c48 100644

--- a/examples/selective_build/README.md

+++ b/examples/selective_build/README.md

@@ -3,7 +3,7 @@ To optimize binary size of ExecuTorch runtime, selective build can be used. This

## How to run

-Prerequisite: finish the [setting up wiki](https://pytorch.org/executorch/stable/getting-started-setup).

+Prerequisite: finish the [setting up wiki](https://pytorch.org/executorch/main/getting-started-setup).

Run:

diff --git a/examples/xnnpack/README.md b/examples/xnnpack/README.md

index 179e47004a1..56deff928af 100644

--- a/examples/xnnpack/README.md

+++ b/examples/xnnpack/README.md

@@ -1,8 +1,8 @@

# XNNPACK Backend

[XNNPACK](https://github.com/google/XNNPACK) is a library of optimized neural network operators for ARM and x86 CPU platforms. Our delegate lowers models to run using these highly optimized CPU operators. You can try out lowering and running some example models in the demo. Please refer to the following docs for information on the XNNPACK Delegate

-- [XNNPACK Backend Delegate Overview](https://pytorch.org/executorch/stable/native-delegates-executorch-xnnpack-delegate.html)

-- [XNNPACK Delegate Export Tutorial](https://pytorch.org/executorch/stable/tutorial-xnnpack-delegate-lowering.html)

+- [XNNPACK Backend Delegate Overview](https://pytorch.org/executorch/main/native-delegates-executorch-xnnpack-delegate)

+- [XNNPACK Delegate Export Tutorial](https://pytorch.org/executorch/main/tutorial-xnnpack-delegate-lowering)

## Directory structure

@@ -60,7 +60,7 @@ Now finally you should be able to run this model with the following command

```

## Quantization

-First, learn more about the generic PyTorch 2 Export Quantization workflow in the [Quantization Flow Docs](https://pytorch.org/executorch/stable/quantization-overview.html), if you are not familiar already.

+First, learn more about the generic PyTorch 2 Export Quantization workflow in the [Quantization Flow Docs](https://pytorch.org/executorch/main/quantization-overview), if you are not familiar already.

Here we will discuss quantizing a model suitable for XNNPACK delegation using XNNPACKQuantizer.

diff --git a/exir/program/_program.py b/exir/program/_program.py

index ef857ffd011..da5ca06c927 100644

--- a/exir/program/_program.py

+++ b/exir/program/_program.py

@@ -1325,7 +1325,7 @@ def to_edge(

class EdgeProgramManager:

"""

Package of one or more `ExportedPrograms` in Edge dialect. Designed to simplify

- lowering to ExecuTorch. See: https://pytorch.org/executorch/stable/ir-exir.html

+ lowering to ExecuTorch. See: https://pytorch.org/executorch/main/ir-exir

Allows easy applications of transforms across a collection of exported programs

including the delegation of subgraphs.

@@ -1565,7 +1565,7 @@ def to_executorch(

class ExecutorchProgramManager:

"""

Package of one or more `ExportedPrograms` in Execution dialect. Designed to simplify

- lowering to ExecuTorch. See: https://pytorch.org/executorch/stable/ir-exir.html

+ lowering to ExecuTorch. See: https://pytorch.org/executorch/main/ir-exir

When the ExecutorchProgramManager is constructed the ExportedPrograms in execution dialect

are used to form the executorch binary (in a process called emission) and then serialized

diff --git a/extension/android/README.md b/extension/android/README.md

index 8972e615173..1274a18e447 100644

--- a/extension/android/README.md

+++ b/extension/android/README.md

@@ -28,7 +28,7 @@ export ANDROID_NDK=/path/to/ndk

sh scripts/build_android_library.sh

```

-Please see [Android building from source](https://pytorch.org/executorch/main/using-executorch-android.html#building-from-source) for details

+Please see [Android building from source](https://pytorch.org/executorch/main/using-executorch-android#building-from-source) for details

## Test

diff --git a/extension/pybindings/pybindings.pyi b/extension/pybindings/pybindings.pyi

index 64ea14f08ff..7aede1c29a9 100644

--- a/extension/pybindings/pybindings.pyi

+++ b/extension/pybindings/pybindings.pyi

@@ -161,7 +161,7 @@ def _load_for_executorch(

Args:

path: File path to the ExecuTorch program as a string.

enable_etdump: If true, enables an ETDump which can store profiling information.

- See documentation at https://pytorch.org/executorch/stable/etdump.html

+ See documentation at https://pytorch.org/executorch/main/etdump

for how to use it.

debug_buffer_size: If non-zero, enables a debug buffer which can store

intermediate results of each instruction in the ExecuTorch program.

@@ -192,7 +192,7 @@ def _load_for_executorch_from_bundled_program(

) -> ExecuTorchModule:

"""Same as _load_for_executorch, but takes a bundled program instead of a file path.

- See https://pytorch.org/executorch/stable/bundled-io.html for documentation.

+ See https://pytorch.org/executorch/main/bundled-io for documentation.

.. warning::

diff --git a/runtime/COMPATIBILITY.md b/runtime/COMPATIBILITY.md

index 7d9fd47c590..583dab172cc 100644

--- a/runtime/COMPATIBILITY.md

+++ b/runtime/COMPATIBILITY.md

@@ -1,7 +1,7 @@

# Runtime Compatibility Policy

This document describes the compatibility guarantees between the [PTE file

-format](https://pytorch.org/executorch/stable/pte-file-format.html) and the

+format](https://pytorch.org/executorch/main/pte-file-format) and the

ExecuTorch runtime.

> [!IMPORTANT]