Replies: 11 comments 13 replies

-

|

That's unexpected (we fixed a similar issue in 3.9.8 where connection-related memory usage would be classified as Also, if you have the Grafana/Prometheus deployed, at least some screenshots from what's going on in the cluster would be helpful. |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

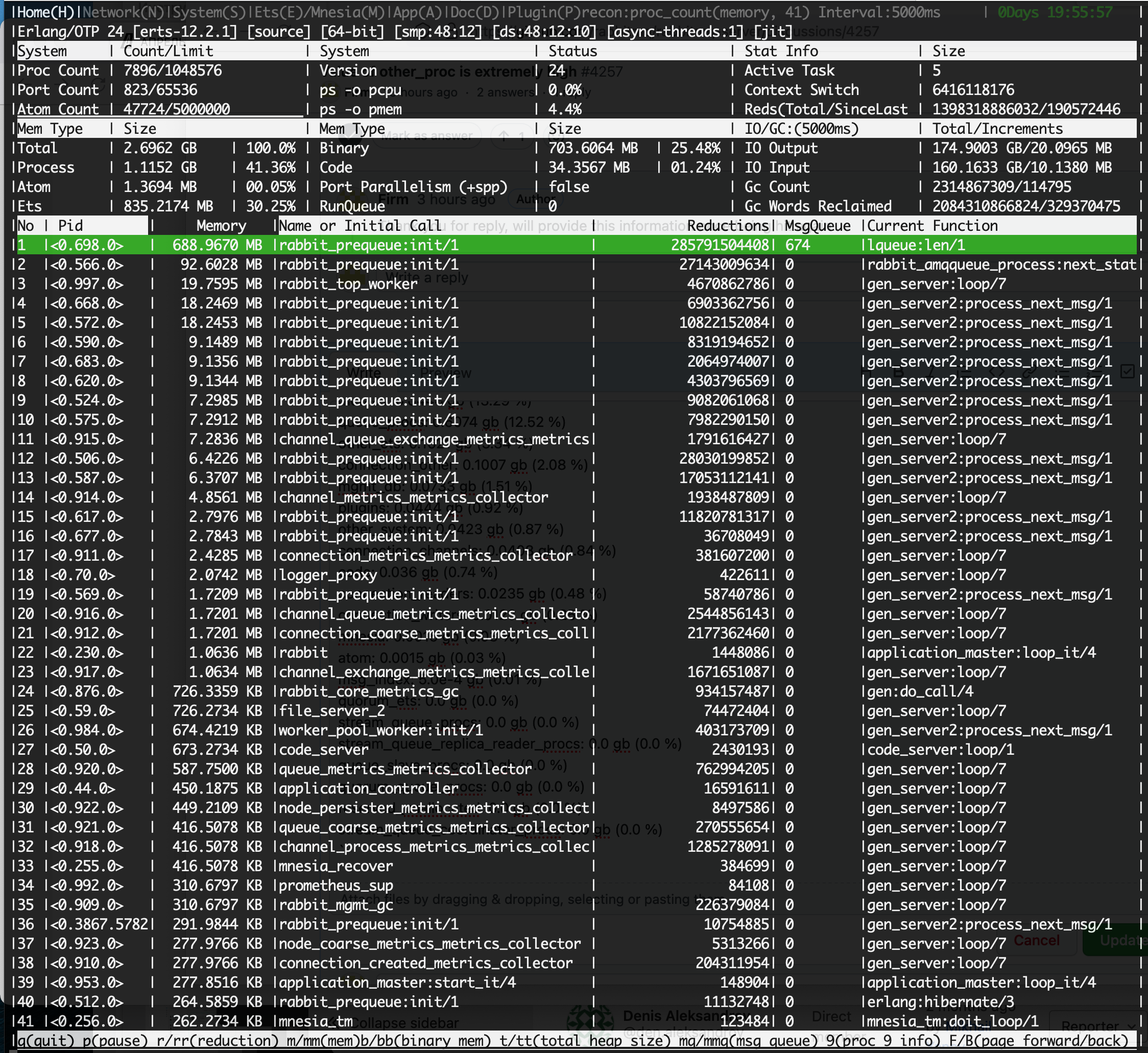

System is somewhere unresponsive: |

Beta Was this translation helpful? Give feedback.

-

|

One more detail. When it (rabbitmq-server) hangs and is restarted, then it's very slow/unresponsive on startup with log messages like |

Beta Was this translation helpful? Give feedback.

-

|

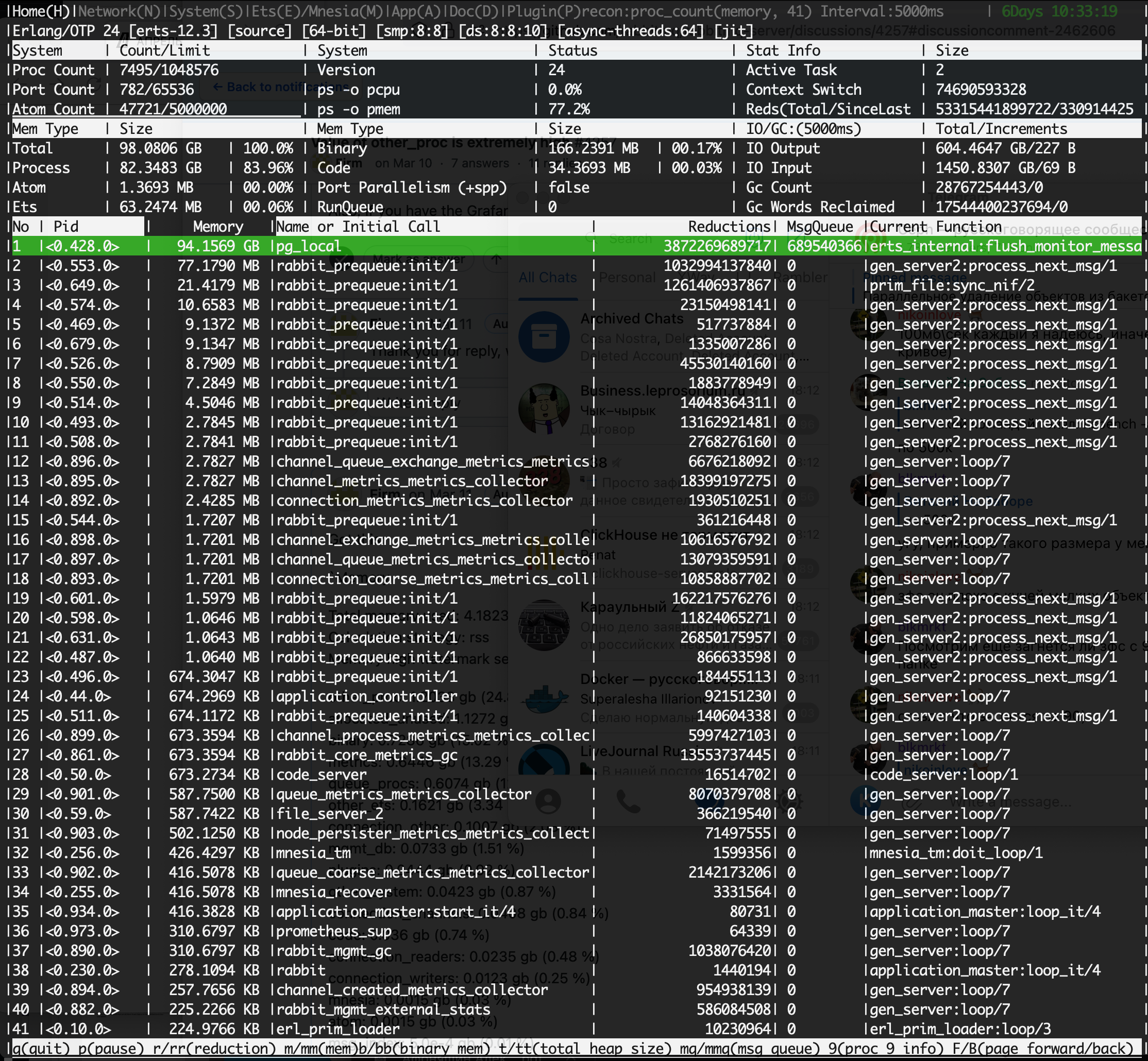

Was able to catch diagnostic data from almost unresponsive server. |

Beta Was this translation helpful? Give feedback.

-

|

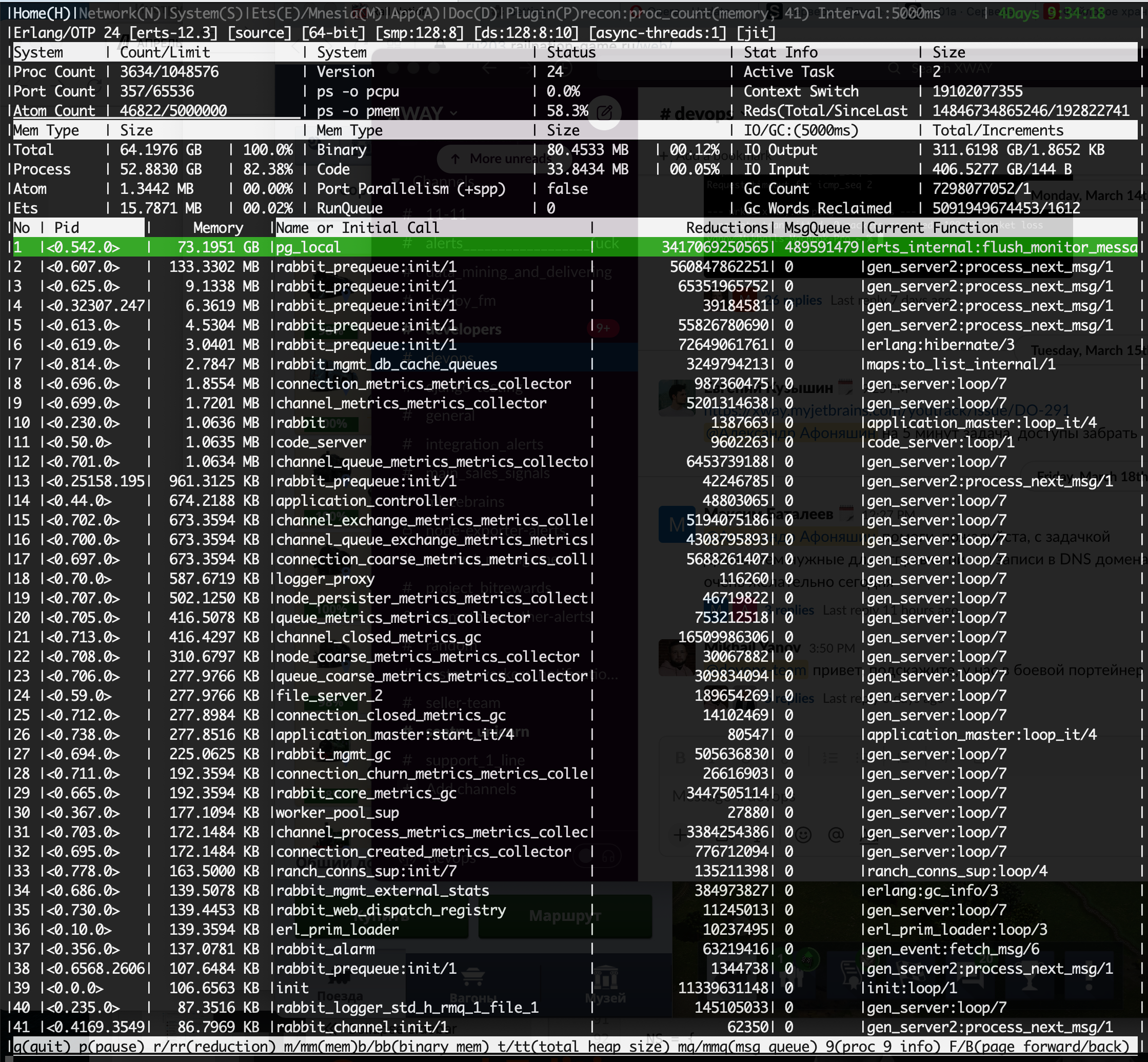

Btw, interesting thing: number of async-threads is 1, although it was set to 64 in #212 (almost 7 years ago). Currently I'm running 3.9.13-1 with 24.3.2 erlang package version. |

Beta Was this translation helpful? Give feedback.

-

|

Questions / asks:

|

Beta Was this translation helpful? Give feedback.

-

|

Another screenshot. 30 channels, 80 queues, around 40M messages total. |

Beta Was this translation helpful? Give feedback.

-

|

rabbitmqctl eval 'recon:info(pg_local).' [{meta, |

Beta Was this translation helpful? Give feedback.

-

|

rabbitmqctl eval 'ets:info(pg_local_table, size).' |

Beta Was this translation helpful? Give feedback.

-

rabbitmqctl list_policies Listing policies for vhost "/" ... |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Hi,

Running 3.9.13 version with 5-10K rps. Set watermarks both for memory (0.7) and disk. Having

other_procvalue more than 60-70% (of 72GB) I see unresponsive server process. How could I determine what eats memory? And how to fix that.Regards,

Beta Was this translation helpful? Give feedback.

All reactions