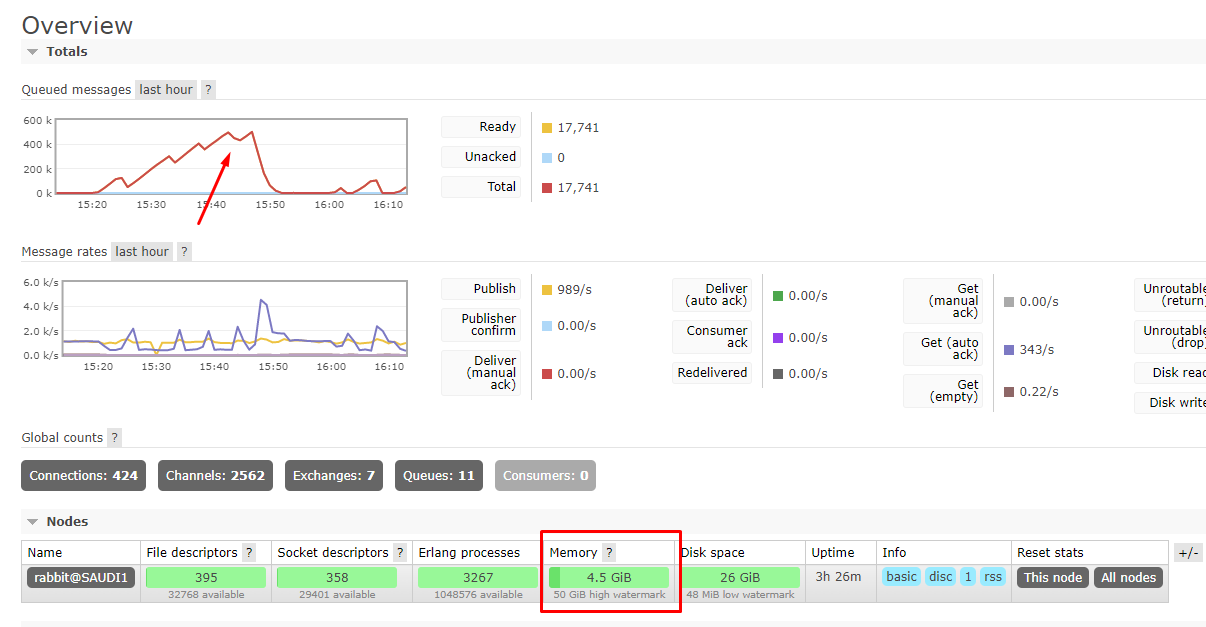

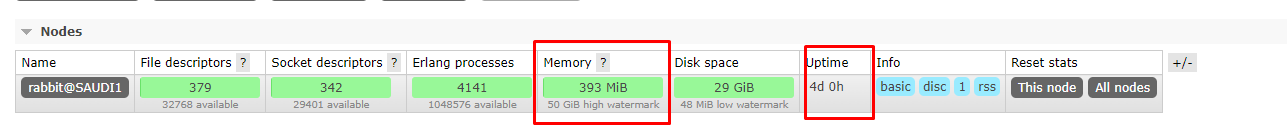

other_proc consumes 57% of memory on the node #5093

-

Beta Was this translation helpful? Give feedback.

Replies: 6 comments 10 replies

-

|

There is no evidence of a memory leak. A Most likely this is a result of a few factors or some combination of them:

Increasing the number of CPU cores available to the node and reducing the churn applications produce |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

It's not the number of entities (connections or queues) that's important here. It's the number of internally emitted events, which is RabbitMQ 3.6 had few types of events, so high churn probably did not affect it as much. For example, it did not have an event for failed authentication attempts. Do that 10K times a second and the Logs and churn metrics available via the management UI and Prometheus/Grafana will help narrow things down. I doubt this can be reproduced with PerfTest because it's not the message rates that are important here. Something heavily floods |

Beta Was this translation helpful? Give feedback.

-

|

Is any method to clean this backlog "on the fly" ? Or may be settings to not generate? Because this is not the thing I want from Rabbit. I only want it to fast receive messages and fast pass on. And memory only for storing messages not for some unknown events. |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

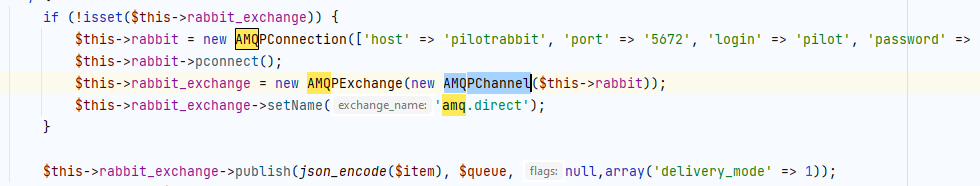

It looks like you're using PHP. I suggest using this proxy: https://github.com/cloudamqp/amqproxy |

Beta Was this translation helpful? Give feedback.

It looks like you're using PHP. I suggest using this proxy: https://github.com/cloudamqp/amqproxy