Replies: 4 comments 10 replies

-

|

Thanks for the report. I used your steps to try out some scenarios, all using the Scenario 1

During publishing, I can see memory use of each node grow, as expected, because there is sufficient RAM to keep them in memory without triggering paging to disk. After clearing Scenario 2Same as 1, with During publishing I can see memory stay well below the high watermark as RabbitMQ pages messages to disk. When publishing completes, almost all of the messages are paged to disk (there is only about 10-20MiB in RAM) and memory usage is between 300 - 400MiB per node. When I clear the policy, nothing exciting happens at all, and I can see that the queues are fully "lazy" i.e. only 2K messages in RAM. So it seems that I can't immediately reproduce this issue. I will return to it tomorrow using RabbitMQ 3.9.20. What version of Erlang are you using? |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for looking into this. To reproduce, I used:

I'll also add that I'm reproducing this using edit: may also try increasing the number of messages. I found it was fairly reliably reproducible at 1 mil messages, but it does seem the fewer the number of messages the less reliable it becomes. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for the reproduction repro and scripts. I was able to reproduce the issue again using your repo (with some adjustments). I reproduced using only the 1 mil messages and 3 nodes. The adjustments are:

|

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Hi there,

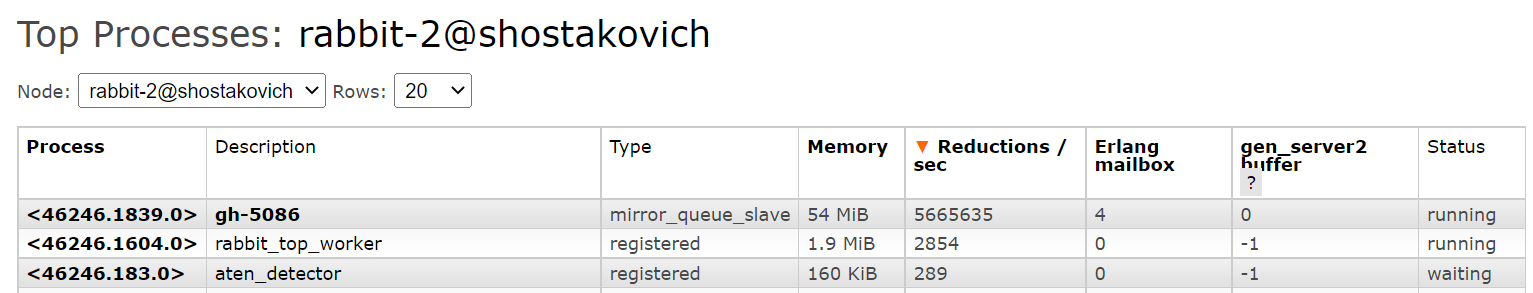

I am experiencing a stuck classic mirrored queue on my test RabbitMQ 3.9.20 cluster broker due to a queue’s effective policy change. While stuck, the queue blocks both publishing and consumption of messages and is listed as unresponsive by

rabbitmqctl list_unresponsive_queues.Otherwise I can still query the queue metadata from the management API, which reports the queue as having “running” state.When this happens, scheduler CPU utilization increases and stays elevated. Observing rabbitmq_top, it appears that RabbitMQ has a high number of reductions on the queue’s mirror_queue_slave process and is unable to complete

rabbit_variable_queue:convert_to_lazy.Reproduction

It can be reproduced by deleting the policy in use by a queue, causing the queue to apply a lower priority policy that has lazy queues enabled:

rabbitmqctl -n node1@localhost set_policy policy-0 ".*" '{"ha-mode":"all", "ha-sync-mode": "automatic", "queue-mode": "lazy"}' —priority 0rabbitmqctl -n node1@localhost set_policy policy-1 ".*" '{"ha-mode":"all", "ha-sync-mode": "automatic"}' —priority 1rabbitmqctl -n node1@localhost clear_policy policy-1Node1 configuration:

Node2 configuration:

Beta Was this translation helpful? Give feedback.

All reactions