tls_sender processes holding on to memory during memory alarm #5346

-

|

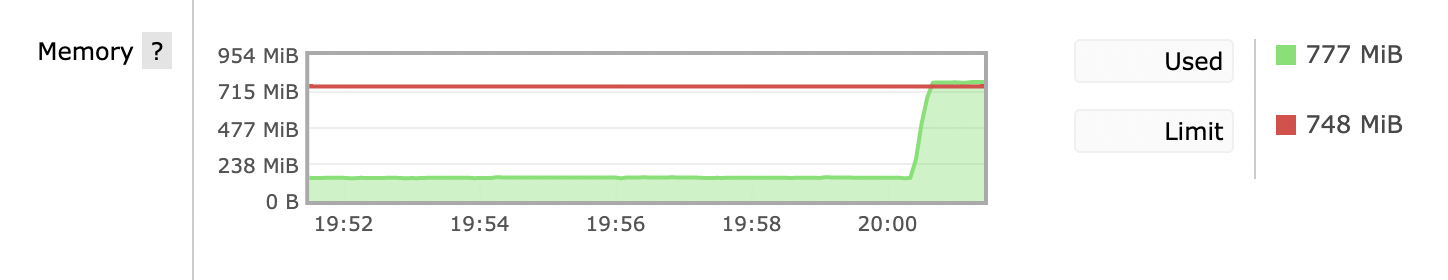

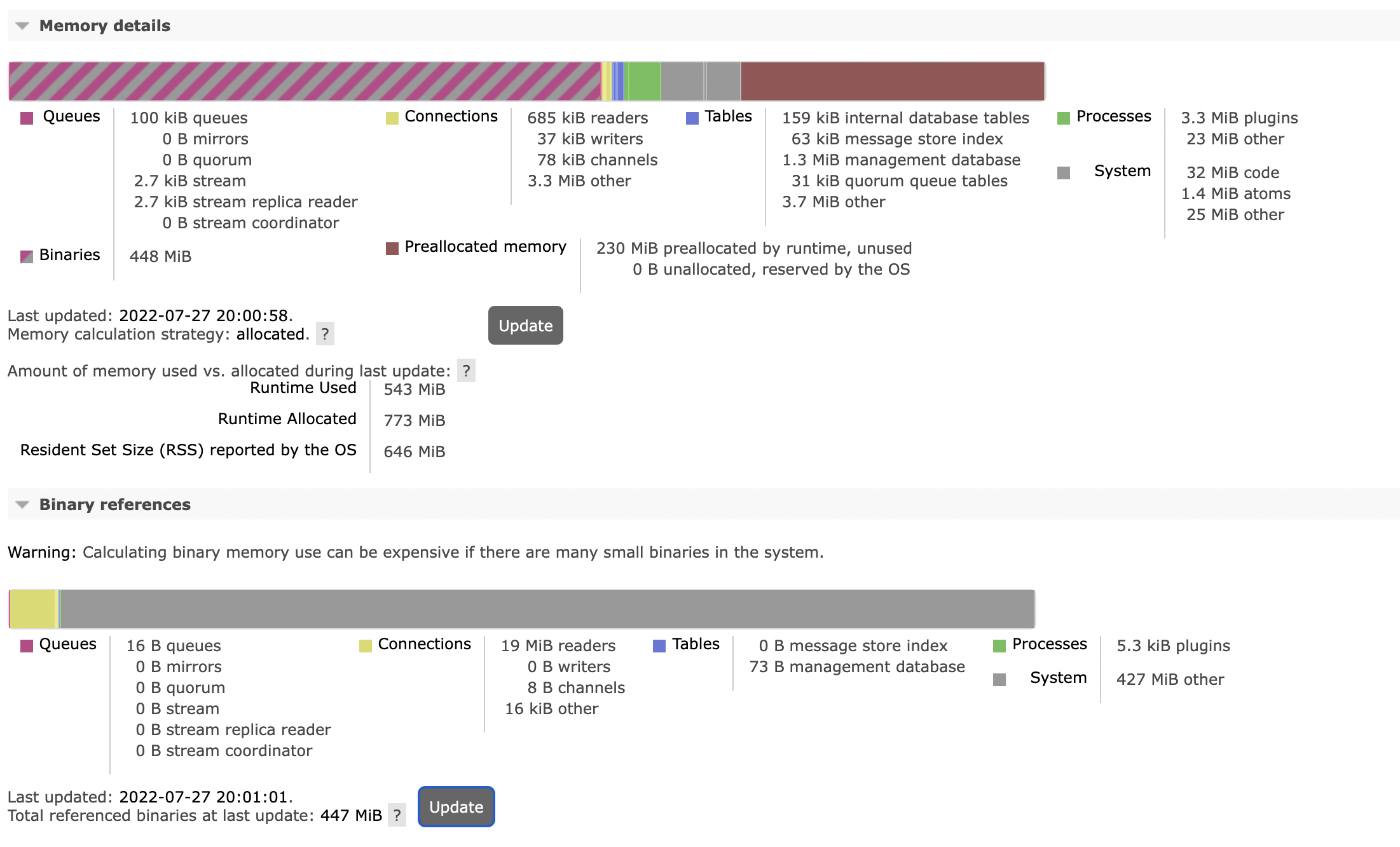

We noticed that after a spike of traffic, BEAM memory grew to a level which set the memory resource limit alarm. At this point publishers were blocked, consumers consumed all messages however memory still did not decrease. A large amount was associated to binaries > other. The level of memory did not decrease until a GC of all processes was executed. The issue here is that because of the inactivity of the node, GC would never trigger automatically, so RabbitMQ cannot recover from the memory alarm. As it turns out that large amount of binaries are referenced by tls_sender processes. RabbitMQ has a smart way to trigger GC of its own processes (https://github.com/rabbitmq/rabbitmq-server/blob/master/deps/rabbit_common/src/rabbit_writer.erl#L412) however if the protocol uses TLS instead of TCP, there are extra OTP proxy processes which are prone to the same issue. Question Wonder if there is anything to do about this on the RabbitMQ side (eg could To reproduce

The issue needs TLS connection and few large messages instead of many smaller ones. The customer had few 100MB messages (I know, anti-pattern :( ) Also if a connection is closed, the associated tls_sender process terminates obviously freeing up the binaries. screenshots from reproduction: |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 7 replies

-

|

I am not aware of a way to obtain a reference to their 20 MB messages arguably belong to a blob store. |

Beta Was this translation helpful? Give feedback.

-

|

NOTE: see the following Erlang SSL application setting that may help in this situation: https://www.erlang.org/doc/man/ssl.html#type-hibernate_after |

Beta Was this translation helpful? Give feedback.

I am not aware of a way to obtain a reference to their

tls_sender. You can enable background GC which runs periodically for such environments.20 MB messages arguably belong to a blob store.