Replies: 4 comments 5 replies

-

|

@vjvel I personally find it outright rude when you instantly @-mention individual contributors you have never chatted to before. Seems like you found the author of the last commit and decided that your issue is so important you can demand their attention. And then completely ignored the issue template that mentioned that our team does not use GitHub issues for questions. Start with the metrics from the Runtime guide. We also cannot suggest if the nodes may be OOM-killed, see pod status and events. Neither we know the number of queues and their types. Having tens of thousands of queues will have a certain memory footprint even if they are empty. Finally, we have no idea what limits or resource quotas [1] are applied to the pods. We do not guess in this community. So before you start mentioning individual contributors asking for free help, consider collecting some data for them to work with. |

Beta Was this translation helpful? Give feedback.

-

|

A workload with 100K queues with 10 messages each would require a pretty different resource allocation compared to a 10 streams with 100K messages each, in particular if quorum queues are used in RabbitMQ versions before 3.11 |

Beta Was this translation helpful? Give feedback.

-

|

resource request/limits CPU : 6/8 There is no OOM issue on all 3 pods , Only CPU is heavily used. We have total 160 queues with classic type. around 30 to 50 queus will be in running state .. It has 2k to 15k messages. As I told you, for 12 hours, application will reject the message for the queues and its gets redelivered. During that time we have lot of CPU issue. Application side also we are changing the logic but need to understand the recommended parameters for erlang on the cpu usage.. i will go through the runtime document. I really apologies if you feel bad and I am new to this rabbitmq and I thought you could help on this matter. Let me know what are the other things you need to give your suggestion. |

Beta Was this translation helpful? Give feedback.

-

|

160 queues is not a lot but you can still consider following these recommendations on reducing per-queue CPU footprint. It can be reduced linearly by bumping the metric emission interval, e.g. from 5s to 30s (which will result in ≈ 1/6 of the original load). In 3.11, quorum queues have smaller memory footprint under active operation, and you don't have to configure anything. Streams and possibly superstreams in 3.11 sounds like a good fit for this workload with a retention period of 12+ hours. I don't see any runtime thread metrics we requested so cannot really suggest much more. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

I have no name!@rabbitmq-0:/$ rabbitmqctl version

3.10.5

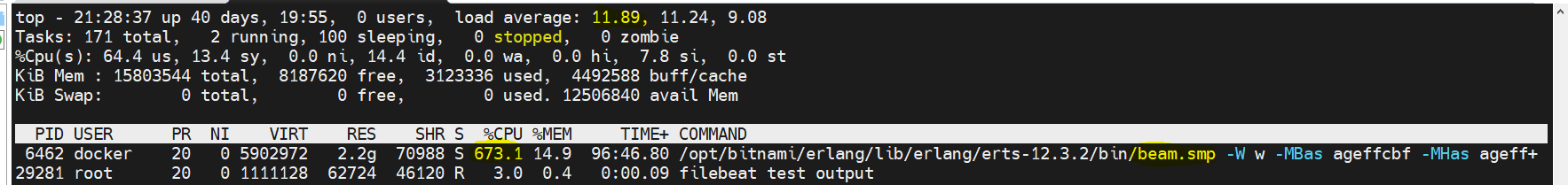

beam.smp is using more CPU.

@michaelklishin We have prod rabbitmq which is used for queuing millions of messages and which will not be delivered/ack for 12 hours as per the CITC rule. we have to keep this queue until the application acknowledges after 12 hours. During this time we get lot of issue. Crashing rabbitmq pods.

We need to apply some recommended configuration for this this. currently it runs with 8vcp/12GB memory (70% memory water leak). Kindly suggest what else we can apply to minimize the CPU usage and keep the queues durable.

Beta Was this translation helpful? Give feedback.

All reactions