Growing memory footprint of a node while using streams #7362

-

|

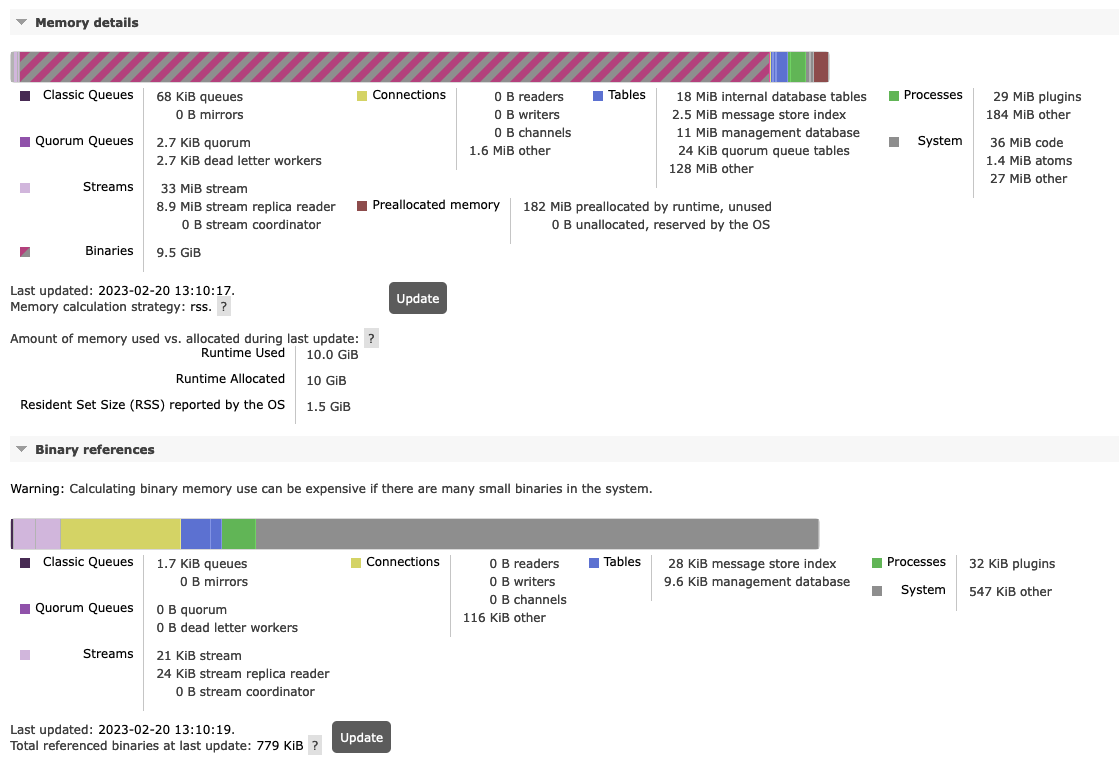

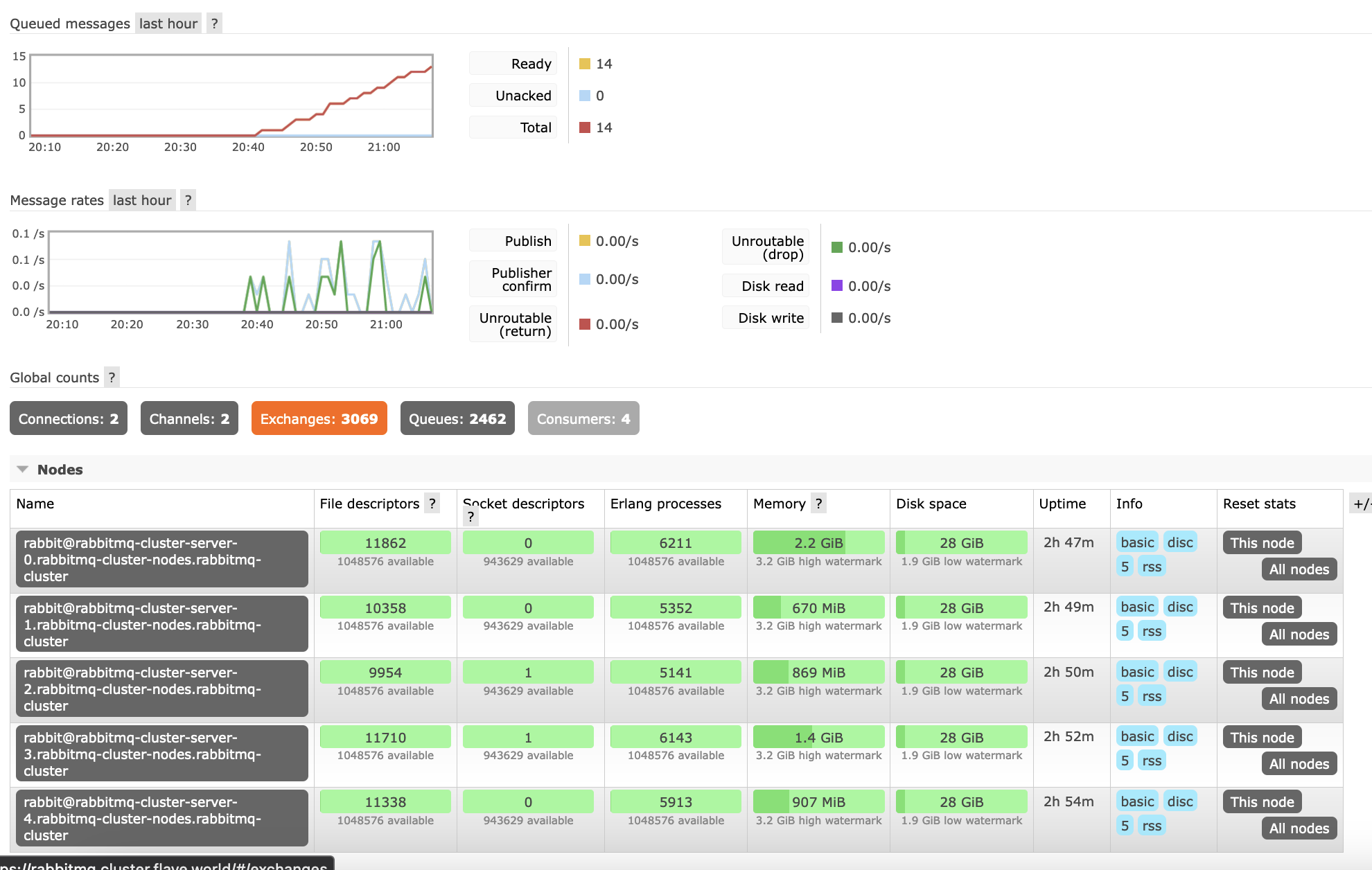

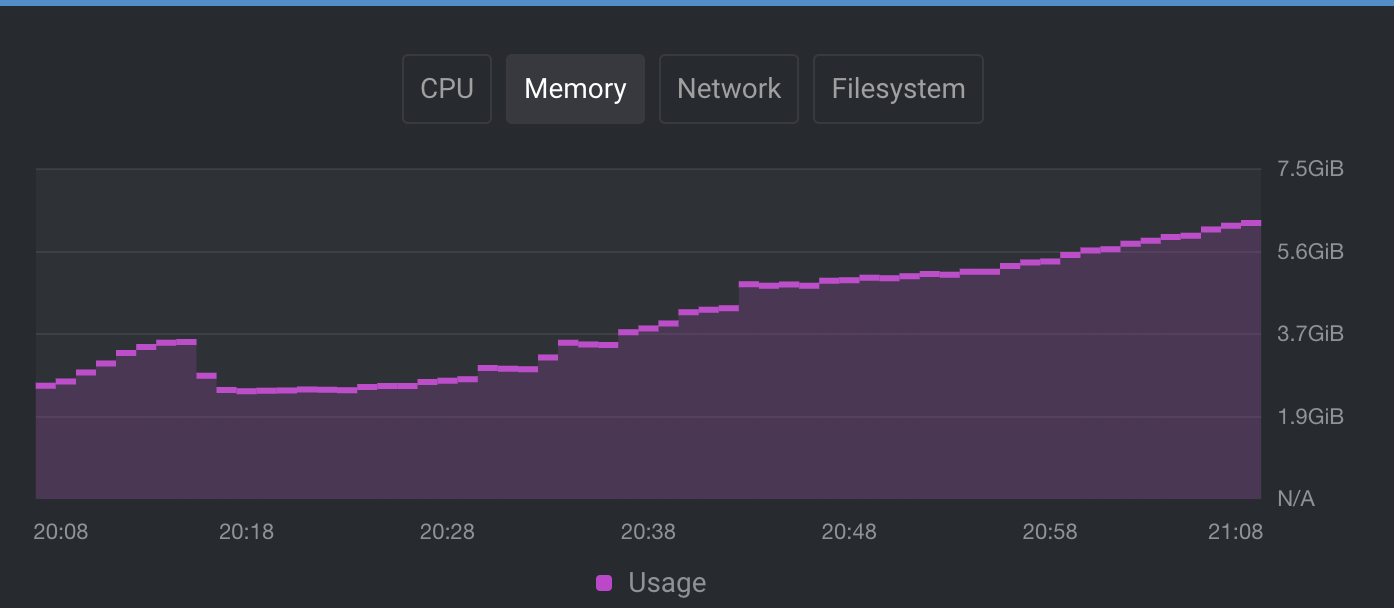

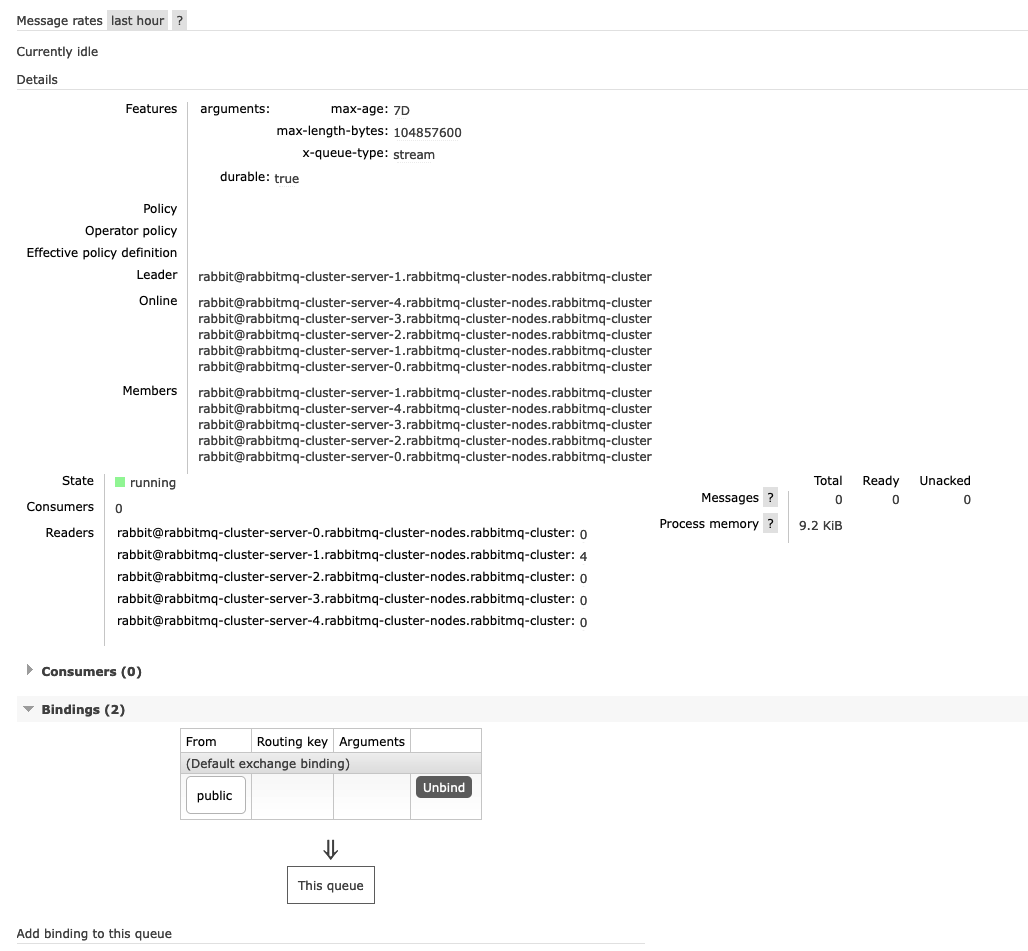

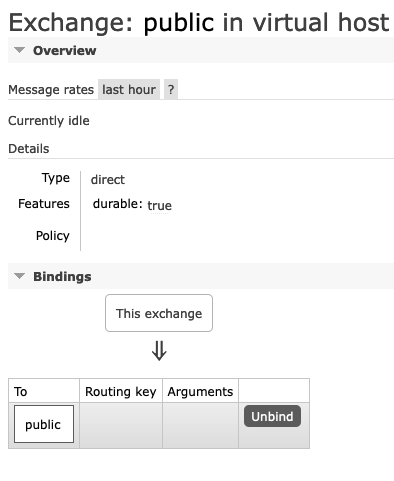

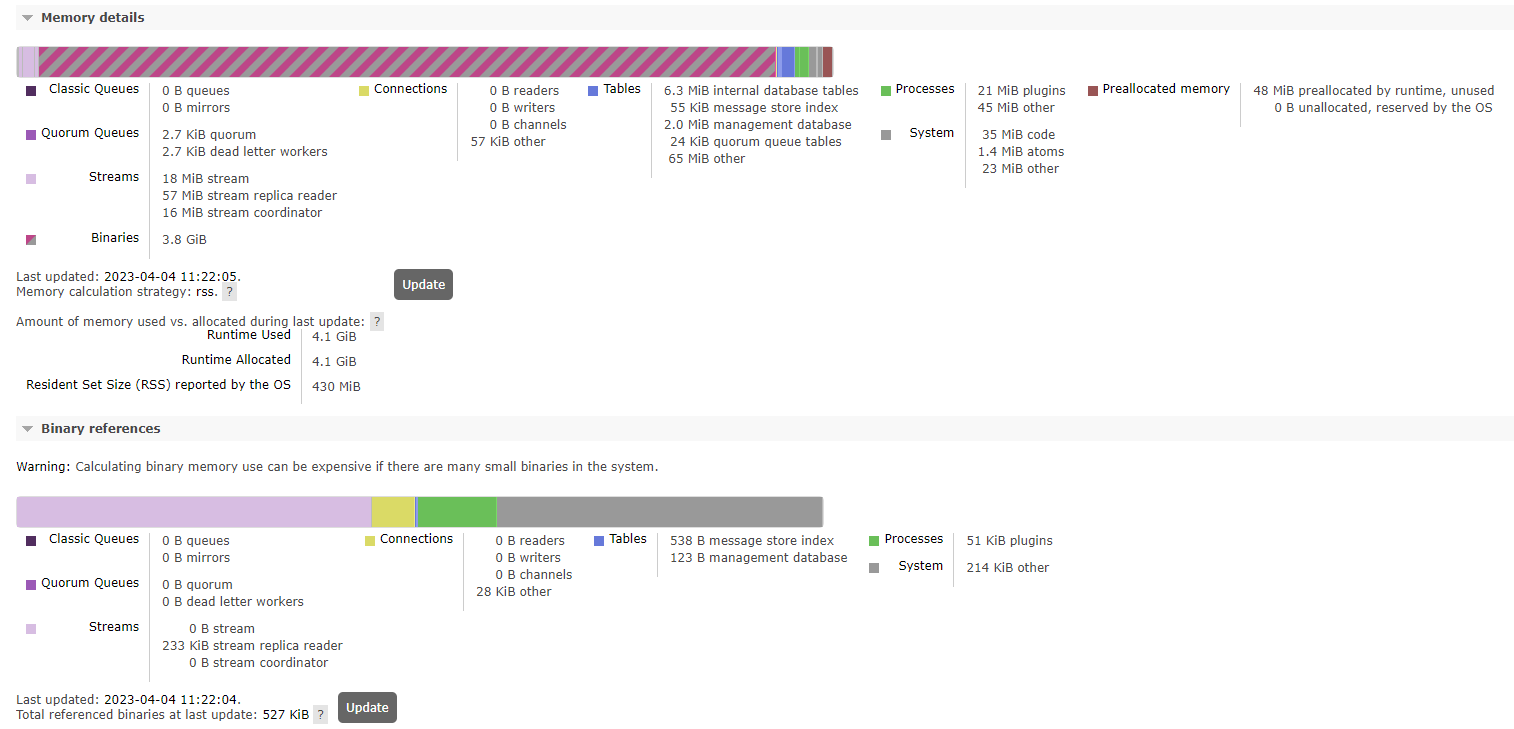

Hi, we rolled out RabbitMq with Webstomp+Stream-Queues for our vhosts (around 50 customers). Right now it seems that there is a memory leak somewhere, since our cluster grows in size after 120 minutes so I have to restart the nodes - but I can't really understand it. We have only 13 connections, but are running out of RAM after 1-2 hours? Also there are not that many messages send (biggest queue has 150 messages in total). The memory inspection shows that this is related to binaries (message metadata etc) - but how can this be if this are stream queues? Would be great if anyone could give me a hint... :( RabbitMQ 3.11.8 |

Beta Was this translation helpful? Give feedback.

Replies: 27 comments 150 replies

-

|

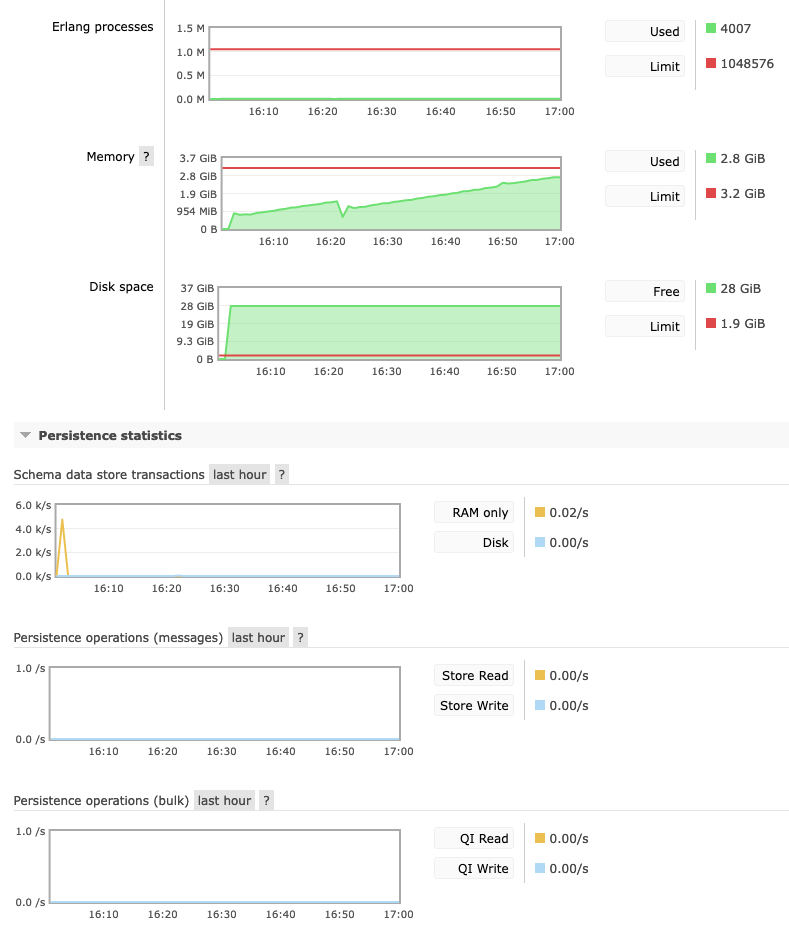

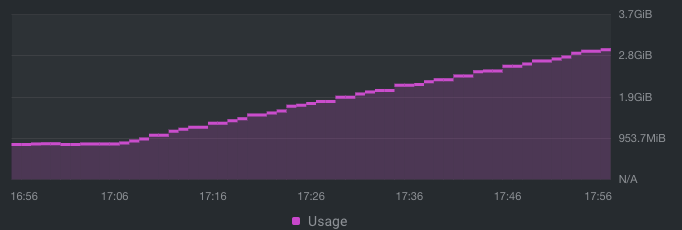

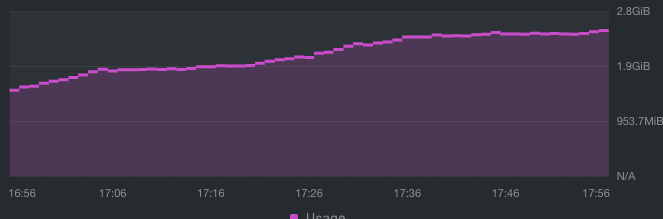

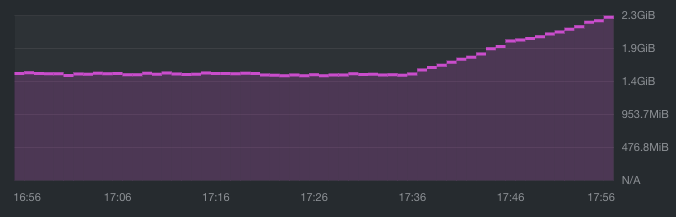

I really don't understand it, the memory consumption moves up linear, even though there is nearly nothing happening on the cluster.. |

Beta Was this translation helpful? Give feedback.

-

|

Also the connection churn stats are not bad.. |

Beta Was this translation helpful? Give feedback.

-

|

Which version of RabbitMQ are you using?

…On Mon, 20 Feb 2023 at 15:26, h0jeZvgoxFepBQ2C ***@***.***> wrote:

[image: Bildschirmfoto 2023-02-20 um 16 25 15]

<https://user-images.githubusercontent.com/10075/220145997-18d09d76-ce06-41b0-80b1-3f63d715cda9.png>

Also the connection churn stats are not bad..

—

Reply to this email directly, view it on GitHub

<#7362 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFC4YCB5C5KYWPPTBRDWYOEKBANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you are subscribed to this thread.Message

ID: <rabbitmq/rabbitmq-server/repo-discussions/7362/comments/5054676@

github.com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

Streams don't record persistence operations in the RabbitMQ node metrics.

Use OS level counters to see what IO is actually being performed.

Question: do your clients create a new connection per message, or very

often?

…On Mon, 20 Feb 2023 at 16:02, h0jeZvgoxFepBQ2C ***@***.***> wrote:

It's also strange that it seems that there are no persistance operations -

even though streams are durable and lazy inherently as far as I read?

[image: Bildschirmfoto 2023-02-20 um 17 01 57]

<https://user-images.githubusercontent.com/10075/220153667-5482d1b1-3814-4a39-852b-58b17d5f6869.png>

[image: Bildschirmfoto 2023-02-20 um 17 02 38]

<https://user-images.githubusercontent.com/10075/220153807-e2e61250-cc9c-416c-a011-8f8e121f938e.png>

—

Reply to this email directly, view it on GitHub

<#7362 (reply in thread)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFBFWFOJK6HTT6D7VOLWYOIS7ANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you commented.Message ID:

***@***.***

com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

Ok I have a suspicion as to what it can be. It may be that with your

pattern of use we may end up creating a lot of writer de-duplication ids

which would show up as binary data. These IDs are only trimmed when a

stream segment fills up which if the throughput is low would not be very

often.

I will take a look to see if this is the case but a quick look at the code

suggests it is.

What you can do for now is to adjust your publisher to retain a long

lived channel and connection rather than creating a new one for each

message. Also you could configure a smaller max segment size for these

streams.

…On Mon, 20 Feb 2023 at 16:22, h0jeZvgoxFepBQ2C ***@***.***> wrote:

We are using rabbitmq in our case nearly as readonly-websocket connection

(so only one publisher and many read only consumers).

The publisher is connecting, then publishing and then closing the channel

and connection again correctly. In general we don't have many messages

(like 10 published messages per hour), so it shouldn't be related to this

connections?

—

Reply to this email directly, view it on GitHub

<#7362 (reply in thread)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFFGUVLBQC7KY6DAPETWYOK55ANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you commented.Message ID:

***@***.***

com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

Yes you can update it with a policy but it may not take effect until next

time the stream is restarted.

the best option is to retain a long lived channel/connection

…On Mon, 20 Feb 2023 at 16:39, h0jeZvgoxFepBQ2C ***@***.***> wrote:

Oh ok, sounds great that you have maybe an idea about this. Any

recommendations for the segment size? as I mentioned, we don't have that

many updates, so I guess the publish-traffic is something like max. 10mb

per hour?

You mean also this configuration key or: x-stream-max-segment-size-bytes?

—

Reply to this email directly, view it on GitHub

<#7362 (reply in thread)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFDE6AHAOY23ZOF7MRTWYOM4FANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you commented.Message ID:

***@***.***

com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

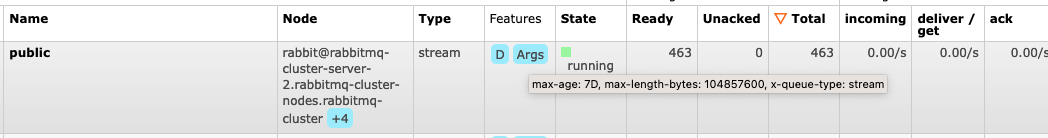

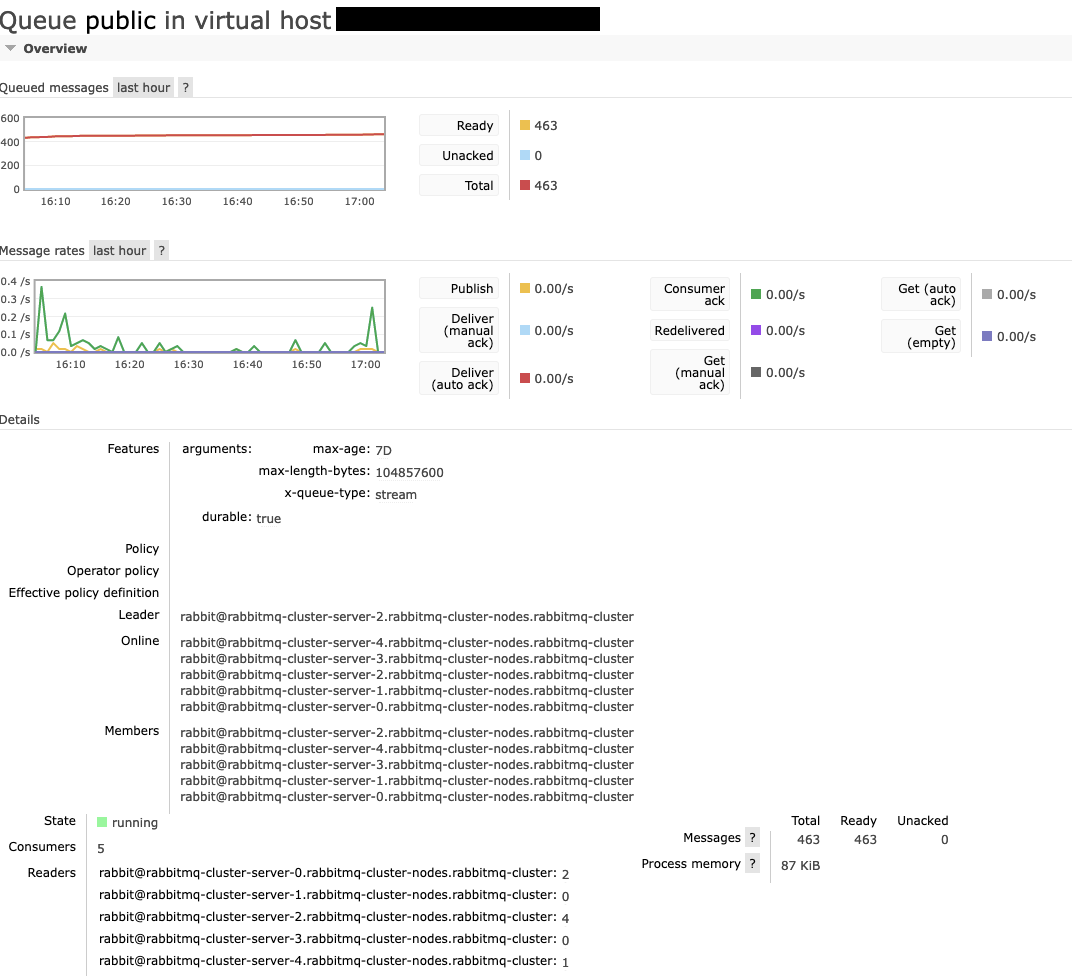

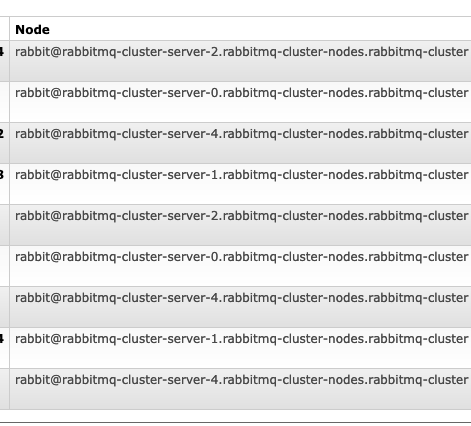

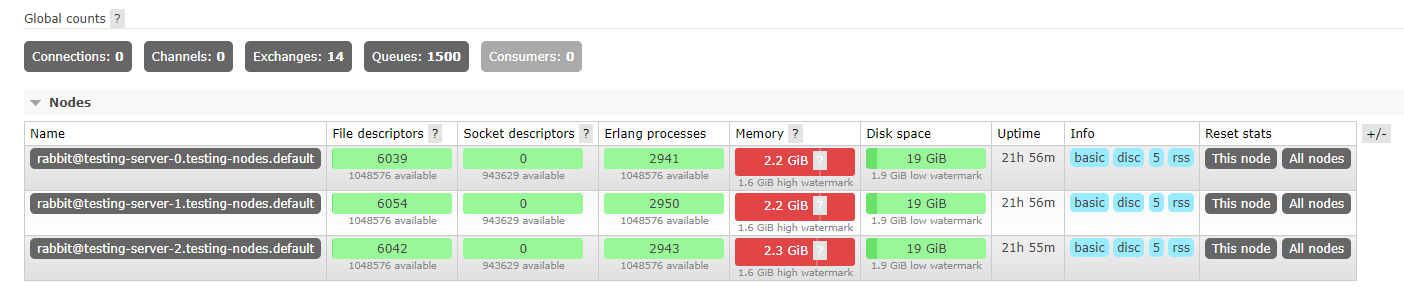

The strange thing is also that it looks like this is happening only on 2 of the 5 nodes (server 0 and 2), whereas connections are to all others too? |

Beta Was this translation helpful? Give feedback.

-

|

It's the stream leader node that hangs on to these ids not the connection

nodes.

…On Mon, 20 Feb 2023 at 17:04, h0jeZvgoxFepBQ2C ***@***.***> wrote:

[image: Bildschirmfoto 2023-02-20 um 18 03 06]

<https://user-images.githubusercontent.com/10075/220165923-42dc1105-bcd2-4256-b6f2-ba0e38d24d45.png>

The strange thing is also that it looks like this is happening only on 2

of the 5 nodes (server 0 and 2), whereas connections are to all others too?

[image: Bildschirmfoto 2023-02-20 um 18 03 56]

<https://user-images.githubusercontent.com/10075/220166050-6c8fe701-099f-412e-9a0d-a78773562f70.png>

—

Reply to this email directly, view it on GitHub

<#7362 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFCQI6FNRDLYZWZ226TWYOP3TANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you commented.Message ID:

***@***.***

com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

That said I just did a test and my scenario does result in increased memory

use but not for binaries so there is something else going on here.

Can you tell me more about your consumers? How they connect, if they are

long running etc. Also what kind of message sizes are used?

…On Mon, 20 Feb 2023 at 17:29, Karl Nilsson ***@***.***> wrote:

It's the stream leader node that hangs on to these ids not the connection

nodes.

On Mon, 20 Feb 2023 at 17:04, h0jeZvgoxFepBQ2C ***@***.***>

wrote:

> [image: Bildschirmfoto 2023-02-20 um 18 03 06]

> <https://user-images.githubusercontent.com/10075/220165923-42dc1105-bcd2-4256-b6f2-ba0e38d24d45.png>

>

> The strange thing is also that it looks like this is happening only on 2

> of the 5 nodes (server 0 and 2), whereas connections are to all others too?

>

> [image: Bildschirmfoto 2023-02-20 um 18 03 56]

> <https://user-images.githubusercontent.com/10075/220166050-6c8fe701-099f-412e-9a0d-a78773562f70.png>

>

> —

> Reply to this email directly, view it on GitHub

> <#7362 (comment)>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AAJAHFCQI6FNRDLYZWZ226TWYOP3TANCNFSM6AAAAAAVBZRNTI>

> .

> You are receiving this because you commented.Message ID:

> ***@***.***

> com>

>

--

*Karl Nilsson*

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

I recreated our cluster and set the segment size to 4MB, but it didn't change anything unfortunately - there were only 10 messages deployed and it's already growing and growing.. |

Beta Was this translation helpful? Give feedback.

-

|

Another strange thing is that I only have 2 connections, not more. So I'm not sure if it's really related to our publisher connecting. Since we connect and reconnect, only 10 connections happend in the last hour.. I dont think that 10 single sequential connections lead to such a memory leak? |

Beta Was this translation helpful? Give feedback.

-

|

Is there any chance you could procure a small as possible application that

reproduces the problem so we can test it here?

On Mon, 20 Feb 2023 at 20:14, h0jeZvgoxFepBQ2C ***@***.***> wrote:

Another strange thing is that I only have 2 connections, not more. So I'm

not sure if it's really related to our publisher connecting. Since we

connect and reconnect, only 10 connections happend in the last hour.. I

dont think that 10 single sequential connections lead to such a memory leak?

—

Reply to this email directly, view it on GitHub

<#7362 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFDFO2ZVM2CGB6DLVWDWYPGBPANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you commented.Message ID:

***@***.***

com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

If your version of RabbitMq ships with the Also outputs of You can also try |

Beta Was this translation helpful? Give feedback.

-

|

Did you try rabbitmqctl force_gc?

…On Tue, 21 Feb 2023 at 10:22, h0jeZvgoxFepBQ2C ***@***.***> wrote:

I tried it already with forcing the garbage collection, but it didn't

change anything.

I also removed the ws binary frames setting and recreated everything, but

it didnt change anything, so it's not related to this option I guess.

node 0

Memory breakdown:

rabbitmq-cluster-server-0:/$ rabbitmq-diagnostics memory_breakdown

Reporting memory breakdown on node ***@***.***

binary: 7.9682 gb (91.69%)

other_proc: 0.2033 gb (2.34%)

allocated_unused: 0.1656 gb (1.91%)

other_ets: 0.0909 gb (1.05%)

stream_queue_replica_reader_procs: 0.0779 gb (0.9%)

code: 0.0381 gb (0.44%)

stream_queue_procs: 0.0366 gb (0.42%)

plugins: 0.0357 gb (0.41%)

other_system: 0.0317 gb (0.36%)

mgmt_db: 0.0189 gb (0.22%)

mnesia: 0.0179 gb (0.21%)

msg_index: 0.0025 gb (0.03%)

atom: 0.0015 gb (0.02%)

metrics: 0.0014 gb (0.02%)

connection_other: 0.0002 gb (0.0%)

connection_readers: 0.0 gb (0.0%)

quorum_ets: 0.0 gb (0.0%)

connection_channels: 0.0 gb (0.0%)

quorum_queue_procs: 0.0 gb (0.0%)

quorum_queue_dlx_procs: 0.0 gb (0.0%)

connection_writers: 0.0 gb (0.0%)

queue_procs: 0.0 gb (0.0%)

queue_slave_procs: 0.0 gb (0.0%)

stream_queue_coordinator_procs: 0.0 gb (0.0%)

reserved_unallocated: 0.0 gb (0.0%)

Node 1

rabbitmq-cluster-server-1:/$ rabbitmq-diagnostics memory_breakdown

Reporting memory breakdown on node ***@***.***

binary: 7.4785 gb (90.95%)

other_proc: 0.1916 gb (2.33%)

allocated_unused: 0.1551 gb (1.89%)

other_ets: 0.1211 gb (1.47%)

stream_queue_replica_reader_procs: 0.1036 gb (1.26%)

code: 0.0381 gb (0.46%)

stream_queue_procs: 0.0367 gb (0.45%)

other_system: 0.0338 gb (0.41%)

plugins: 0.0295 gb (0.36%)

mnesia: 0.0179 gb (0.22%)

mgmt_db: 0.0107 gb (0.13%)

msg_index: 0.0025 gb (0.03%)

metrics: 0.0015 gb (0.02%)

atom: 0.0015 gb (0.02%)

connection_other: 0.0008 gb (0.01%)

quorum_ets: 0.0 gb (0.0%)

queue_procs: 0.0 gb (0.0%)

quorum_queue_procs: 0.0 gb (0.0%)

quorum_queue_dlx_procs: 0.0 gb (0.0%)

connection_readers: 0.0 gb (0.0%)

connection_writers: 0.0 gb (0.0%)

connection_channels: 0.0 gb (0.0%)

queue_slave_procs: 0.0 gb (0.0%)

stream_queue_coordinator_procs: 0.0 gb (0.0%)

reserved_unallocated: 0.0 gb (0.0%)

Node 2

rabbitmq-cluster-server-2:/$ rabbitmq-diagnostics memory_breakdown

Reporting memory breakdown on node ***@***.***

binary: 8.4574 gb (91.55%)

other_proc: 0.1975 gb (2.14%)

allocated_unused: 0.1769 gb (1.91%)

other_ets: 0.1337 gb (1.45%)

stream_queue_replica_reader_procs: 0.0589 gb (0.64%)

plugins: 0.0552 gb (0.6%)

code: 0.0381 gb (0.41%)

stream_queue_procs: 0.0345 gb (0.37%)

other_system: 0.0319 gb (0.35%)

mgmt_db: 0.0301 gb (0.33%)

mnesia: 0.0179 gb (0.19%)

msg_index: 0.0025 gb (0.03%)

atom: 0.0015 gb (0.02%)

metrics: 0.0012 gb (0.01%)

connection_other: 0.0003 gb (0.0%)

connection_readers: 0.0 gb (0.0%)

quorum_ets: 0.0 gb (0.0%)

connection_channels: 0.0 gb (0.0%)

connection_writers: 0.0 gb (0.0%)

quorum_queue_procs: 0.0 gb (0.0%)

quorum_queue_dlx_procs: 0.0 gb (0.0%)

queue_procs: 0.0 gb (0.0%)

queue_slave_procs: 0.0 gb (0.0%)

stream_queue_coordinator_procs: 0.0 gb (0.0%)

reserved_unallocated: 0.0 gb (0.0%)

Node 3

rabbitmq-cluster-server-3:/$ rabbitmq-diagnostics memory_breakdown

Reporting memory breakdown on node ***@***.***

binary: 7.7231 gb (90.03%)

other_proc: 0.203 gb (2.37%)

allocated_unused: 0.1799 gb (2.1%)

other_ets: 0.172 gb (2.01%)

stream_queue_replica_reader_procs: 0.0958 gb (1.12%)

plugins: 0.0458 gb (0.53%)

code: 0.0381 gb (0.44%)

stream_queue_procs: 0.0374 gb (0.44%)

other_system: 0.0329 gb (0.38%)

mgmt_db: 0.026 gb (0.3%)

mnesia: 0.0179 gb (0.21%)

msg_index: 0.0025 gb (0.03%)

atom: 0.0015 gb (0.02%)

metrics: 0.0014 gb (0.02%)

connection_other: 0.0007 gb (0.01%)

connection_readers: 0.0 gb (0.0%)

quorum_ets: 0.0 gb (0.0%)

connection_channels: 0.0 gb (0.0%)

quorum_queue_procs: 0.0 gb (0.0%)

quorum_queue_dlx_procs: 0.0 gb (0.0%)

connection_writers: 0.0 gb (0.0%)

queue_procs: 0.0 gb (0.0%)

queue_slave_procs: 0.0 gb (0.0%)

stream_queue_coordinator_procs: 0.0 gb (0.0%)

reserved_unallocated: 0.0 gb (0.0%)

Node 4

rabbitmq-cluster-server-4:/$ rabbitmq-diagnostics memory_breakdown

Reporting memory breakdown on node ***@***.***

binary: 8.0914 gb (91.7%)

allocated_unused: 0.2626 gb (2.98%)

other_proc: 0.1243 gb (1.41%)

other_ets: 0.0844 gb (0.96%)

stream_queue_replica_reader_procs: 0.0834 gb (0.95%)

code: 0.0381 gb (0.43%)

stream_queue_procs: 0.0348 gb (0.39%)

plugins: 0.0335 gb (0.38%)

other_system: 0.0329 gb (0.37%)

mnesia: 0.0179 gb (0.2%)

mgmt_db: 0.014 gb (0.16%)

msg_index: 0.0025 gb (0.03%)

atom: 0.0015 gb (0.02%)

metrics: 0.0014 gb (0.02%)

connection_other: 0.0005 gb (0.01%)

connection_readers: 0.0001 gb (0.0%)

quorum_ets: 0.0 gb (0.0%)

queue_procs: 0.0 gb (0.0%)

connection_channels: 0.0 gb (0.0%)

connection_writers: 0.0 gb (0.0%)

quorum_queue_procs: 0.0 gb (0.0%)

quorum_queue_dlx_procs: 0.0 gb (0.0%)

queue_slave_procs: 0.0 gb (0.0%)

stream_queue_coordinator_procs: 0.0 gb (0.0%)

reserved_unallocated: 0.0 gb (0.0%)

The diagnostics report is a bit hard to publish, since it contains a lot

of customer names which I'm required to keep private..

But in general, when I enable the debug output, I can see many messages

like this:

2023-02-21 10:14:22.141661+00:00 <0.1551.1005> osiris: initialising reader. Spec: next

2023-02-21 10:14:25.938101+00:00 <0.11389.988> accepting AMQP connection <0.11389.988> (10.244.47.54:58210 -> 10.244.47.72:5672)

2023-02-21 10:14:25.938311+00:00 <0.11389.988> closing AMQP connection <0.11389.988> (10.244.47.54:58210 -> 10.244.47.72:5672):

2023-02-21 10:14:25.938311+00:00 <0.11389.988> connection_closed_with_no_data_received

2023-02-21 10:14:25.938678+00:00 <0.11391.988> Closing all channels from connection '10.244.47.54:58210 -> 10.244.47.72:5672' because it has been closed

2023-02-21 10:14:26.524878+00:00 <0.12018.988> Authentication using an OAuth 2/JWT token failed: provided token is invalid

2023-02-21 10:14:26.525008+00:00 <0.12018.988> User 'myadmin' failed authenticatation by backend rabbit_auth_backend_oauth2

2023-02-21 10:14:26.525082+00:00 <0.12018.988> User 'myadmin' authenticated successfully by backend rabbit_auth_backend_internal

2023-02-21 10:14:28.529266+00:00 <0.11477.1000> accepting AMQP connection <0.11477.1000> (10.244.48.46:35448 -> 10.244.48.103:5672)

2023-02-21 10:14:28.529421+00:00 <0.11477.1000> closing AMQP connection <0.11477.1000> (10.244.48.46:35448 -> 10.244.48.103:5672):

2023-02-21 10:14:28.529421+00:00 <0.11477.1000> connection_closed_with_no_data_received

2023-02-21 10:14:28.530113+00:00 <0.11481.1000> Closing all channels from connection '10.244.48.46:35448 -> 10.244.48.103:5672' because it has been closed

2023-02-21 10:14:29.214553+00:00 <0.27168.1014> accepting AMQP connection <0.27168.1014> (10.244.49.174:52152 -> 10.244.49.143:5672)

2023-02-21 10:14:29.214708+00:00 <0.27168.1014> closing AMQP connection <0.27168.1014> (10.244.49.174:52152 -> 10.244.49.143:5672):

2023-02-21 10:14:29.214708+00:00 <0.27168.1014> connection_closed_with_no_data_received

2023-02-21 10:14:29.215284+00:00 <0.27173.1014> Closing all channels from connection '10.244.49.174:52152 -> 10.244.49.143:5672' because it has been closed

2023-02-21 10:14:30.101644+00:00 <0.12697.1005> accepting AMQP connection <0.12697.1005> (10.244.45.187:34544 -> 10.244.45.188:5672)

2023-02-21 10:14:30.101801+00:00 <0.12697.1005> closing AMQP connection <0.12697.1005> (10.244.45.187:34544 -> 10.244.45.188:5672):

2023-02-21 10:14:30.101801+00:00 <0.12697.1005> connection_closed_with_no_data_received

2023-02-21 10:14:30.102272+00:00 <0.12700.1005> Closing all channels from connection '10.244.45.187:34544 -> 10.244.45.188:5672' because it has been closed

2023-02-21 10:14:31.094929+00:00 <0.32559.991> accepting AMQP connection <0.32559.991> (10.244.47.233:52442 -> 10.244.47.235:5672)

2023-02-21 10:14:31.095125+00:00 <0.32559.991> closing AMQP connection <0.32559.991> (10.244.47.233:52442 -> 10.244.47.235:5672):

2023-02-21 10:14:31.095125+00:00 <0.32559.991> connection_closed_with_no_data_received

2023-02-21 10:14:31.095624+00:00 <0.32564.991> Closing all channels from connection '10.244.47.233:52442 -> 10.244.47.235:5672' because it has been closed

2023-02-21 10:14:31.533355+00:00 <0.15800.1000> Authentication using an OAuth 2/JWT token failed: provided token is invalid

2023-02-21 10:14:31.533450+00:00 <0.15800.1000> User 'myadmin' failed authenticatation by backend rabbit_auth_backend_oauth2

2023-02-21 10:14:31.533666+00:00 <0.15800.1000> User 'myadmin' authenticated successfully by backend rabbit_auth_backend_internal

2023-02-21 10:14:35.938988+00:00 <0.21267.988> accepting AMQP connection <0.21267.988> (10.244.47.54:44842 -> 10.244.47.72:5672)

2023-02-21 10:14:35.939200+00:00 <0.21267.988> closing AMQP connection <0.21267.988> (10.244.47.54:44842 -> 10.244.47.72:5672):

2023-02-21 10:14:35.939200+00:00 <0.21267.988> connection_closed_with_no_data_received

2023-02-21 10:14:35.939628+00:00 <0.21271.988> Closing all channels from connection '10.244.47.54:44842 -> 10.244.47.72:5672' because it has been closed

2023-02-21 10:14:36.533006+00:00 <0.2245.1015> Authentication using an OAuth 2/JWT token failed: provided token is invalid

2023-02-21 10:14:36.533112+00:00 <0.2245.1015> User 'myadmin' failed authenticatation by backend rabbit_auth_backend_oauth2

2023-02-21 10:14:36.533206+00:00 <0.2245.1015> User 'myadmin' authenticated successfully by backend rabbit_auth_backend_internal

2023-02-21 10:14:38.528889+00:00 <0.23580.1000> accepting AMQP connection <0.23580.1000> (10.244.48.46:48284 -> 10.244.48.103:5672)

2023-02-21 10:14:38.529088+00:00 <0.23580.1000> closing AMQP connection <0.23580.1000> (10.244.48.46:48284 -> 10.244.48.103:5672):

2023-02-21 10:14:38.529088+00:00 <0.23580.1000> connection_closed_with_no_data_received

2023-02-21 10:14:38.529765+00:00 <0.23587.1000> Closing all channels from connection '10.244.48.46:48284 -> 10.244.48.103:5672' because it has been closed

2023-02-21 10:14:39.214338+00:00 <0.5888.1015> accepting AMQP connection <0.5888.1015> (10.244.49.174:37432 -> 10.244.49.143:5672)

2023-02-21 10:14:39.214485+00:00 <0.5888.1015> closing AMQP connection <0.5888.1015> (10.244.49.174:37432 -> 10.244.49.143:5672):

2023-02-21 10:14:39.214485+00:00 <0.5888.1015> connection_closed_with_no_data_received

2023-02-21 10:14:39.214781+00:00 <0.5890.1015> Closing all channels from connection '10.244.49.174:37432 -> 10.244.49.143:5672' because it has been closed

2023-02-21 10:14:40.100060+00:00 <0.23157.1005> accepting AMQP connection <0.23157.1005> (10.244.45.187:50550 -> 10.244.45.188:5672)

2023-02-21 10:14:40.100267+00:00 <0.23157.1005> closing AMQP connection <0.23157.1005> (10.244.45.187:50550 -> 10.244.45.188:5672):

2023-02-21 10:14:40.100267+00:00 <0.23157.1005> connection_closed_with_no_data_received

2023-02-21 10:14:40.100593+00:00 <0.23159.1005> Closing all channels from connection '10.244.45.187:50550 -> 10.244.45.188:5672' because it has been closed

2023-02-21 10:14:41.094479+00:00 <0.11513.992> accepting AMQP connection <0.11513.992> (10.244.47.233:52058 -> 10.244.47.235:5672)

2023-02-21 10:14:41.094620+00:00 <0.11513.992> closing AMQP connection <0.11513.992> (10.244.47.233:52058 -> 10.244.47.235:5672):

2023-02-21 10:14:41.094620+00:00 <0.11513.992> connection_closed_with_no_data_received

2023-02-21 10:14:41.094876+00:00 <0.11132.992> Closing all channels from connection '10.244.47.233:52058 -> 10.244.47.235:5672' because it has been closed

2023-02-21 10:14:41.541248+00:00 <0.23580.1005> Authentication using an OAuth 2/JWT token failed: provided token is invalid

2023-02-21 10:14:41.541367+00:00 <0.23580.1005> User 'myadmin' failed authenticatation by backend rabbit_auth_backend_oauth2

2023-02-21 10:14:41.541464+00:00 <0.23580.1005> User 'myadmin' authenticated successfully by backend rabbit_auth_backend_internal

2023-02-21 10:14:45.938707+00:00 <0.62.989> accepting AMQP connection <0.62.989> (10.244.47.54:35626 -> 10.244.47.72:5672)

2023-02-21 10:14:45.938886+00:00 <0.62.989> closing AMQP connection <0.62.989> (10.244.47.54:35626 -> 10.244.47.72:5672):

2023-02-21 10:14:45.938886+00:00 <0.62.989> connection_closed_with_no_data_received

2023-02-21 10:14:45.939219+00:00 <0.64.989> Closing all channels from connection '10.244.47.54:35626 -> 10.244.47.72:5672' because it has been closed

2023-02-21 10:14:46.094383+00:00 <0.31416.1000> Closing all channels from connection '10.244.48.211:38040 -> 10.244.48.103:15674' because it has been closed

2023-02-21 10:14:46.542348+00:00 <0.18947.992> Authentication using an OAuth 2/JWT token failed: provided token is invalid

2023-02-21 10:14:46.542466+00:00 <0.18947.992> User 'myadmin' failed authenticatation by backend rabbit_auth_backend_oauth2

2023-02-21 10:14:46.542595+00:00 <0.18947.992> User 'myadmin' authenticated successfully by backend rabbit_auth_backend_internal

2023-02-21 10:14:48.529033+00:00 <0.32376.1000> accepting AMQP connection <0.32376.1000> (10.244.48.46:54630 -> 10.244.48.103:5672)

2023-02-21 10:14:48.529211+00:00 <0.32376.1000> closing AMQP connection <0.32376.1000> (10.244.48.46:54630 -> 10.244.48.103:5672):

2023-02-21 10:14:48.529211+00:00 <0.32376.1000> connection_closed_with_no_data_received

2023-02-21 10:14:48.529697+00:00 <0.32379.1000> Closing all channels from connection '10.244.48.46:54630 -> 10.244.48.103:5672' because

Unfortunately my version doesn't ship with rabbitmq-streams restart_streamas

it seem?

rabbitmq-cluster-server-4:/$ rabbitmq-streams restart_stream

Command 'restart_stream' not found.

Usage

rabbitmq-streams [--node <node>] [--timeout <timeout>] [--longnames] [--quiet] <command> [<command options>]

Available commands:

Replication:

add_replica Adds a stream queue replica on the given node.

delete_replica Removes a stream queue replica on the given node.

Monitoring, observability and health checks:

stream_status Displays the status of a stream

Policies:

set_stream_retention_policy Sets the retention policy of a stream queue

Use 'rabbitmq-streams help <command>' to learn more about a specific command

I'm thinking about two reasons which could maybe trigger this:

- a Kubernetes problem where network connections are terminated before

they are closed correctly

- a Kubernetes problem with the readiness check (but since there is no

livenesscheck i guess this is not the case)

- webstomp websocket connections which are not closed correctly (since

they try to reconnect permanently if connection cannot be established)

- or its something different...

—

Reply to this email directly, view it on GitHub

<#7362 (reply in thread)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFAGBAZL6G6OCHBNTF3WYSJORANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you commented.Message ID:

***@***.***

com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

Another command that may provide some info:

you only need to run this one one node |

Beta Was this translation helpful? Give feedback.

-

|

@h0jeZvgoxFepBQ2C - on nodes that are experiencing continuous memory growth, could you please run this and attach the generated Thanks. |

Beta Was this translation helpful? Give feedback.

-

|

I've deployed a cluster with idle streams and cannot reproduce. Initially there were 3 nodes and 100 streams. Later I went up to 2500 streams. Later on, I scaled the cluster to 7 nodes. Memory usage remains stable (all nodes are scraped by Prometheus). There is not much we can do without steps to reproduce. |

Beta Was this translation helpful? Give feedback.

-

No, it is not (if you add it, it is propagated, but not with the correct type). We will add it. |

Beta Was this translation helpful? Give feedback.

-

|

Ok so I tried now to delete the vhosts again, but it's not working... in the logs I can only see following timeouts:

|

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

You need a full stop after recon:bin_leak(10).

…On Wed, 5 Apr 2023 at 09:55, Tatiana Neuer ***@***.***> wrote:

Just to confirm: you are saying that you see this problem without any

client connections, correct? Just declare and wait?

Exactly

I repeated the steps and waited for the memory usage to stabilize (higher

than the watermark) then I ran the command but it does not print anything

(same on other nodes):

$ kubectl exec --stdin --tty testing-server-0 -- /bin/bash

Defaulted container "rabbitmq" out of: rabbitmq, setup-container (init)

***@***.***:/$ rabbitmq-diagnostics remote_shell

Starting an interactive Erlang shell on node ***@***.*** Press 'Ctrl+G' then 'q' to exit.

Erlang/OTP 25 [erts-13.2] [source] [64-bit] [smp:4:3] [ds:4:3:10] [async-threads:1] [jit:ns]

Eshell V13.2 (abort with ^G)

***@***.***)1> recon:bin_leak(20)

***@***.***)1>

The memory usage did not drop after executing the command.

—

Reply to this email directly, view it on GitHub

<#7362 (reply in thread)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFEDBD74XVLAQ65FYTTW7UXQXANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you were mentioned.Message ID:

***@***.***

com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

is the the same shell session? try it again

…On Wed, 5 Apr 2023 at 10:08, Tatiana Neuer ***@***.***> wrote:

Adding the full stop triggers a syntax error:

***@***.***)1> recon:bin_leak(20).

* 2:1: syntax error before: recon

***@***.***)1>

—

Reply to this email directly, view it on GitHub

<#7362 (reply in thread)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJAHFCFXFOGKQVNL3I6OOTW7UZB3ANCNFSM6AAAAAAVBZRNTI>

.

You are receiving this because you were mentioned.Message ID:

***@***.***

com>

--

*Karl Nilsson*

|

Beta Was this translation helpful? Give feedback.

-

|

We are having the same issue with version 3.11.5. We already have non stream topology working on rabbitmq. We wanted store some records the replay afterwards to train algorithms. So I created a stream and connected it to the already existing fanout. In a minute, the ram usage sky rocketed. The whole kubernetes cluster became unstable. I deleted the stream to revert things back. In overview section of rabbitmq management there were ready 300k message belonging to the stream even tho I deleted the stream. I waited for a while to things to stabilize but they did not, so I restarted rabbitmq then everything went back to normal. |

Beta Was this translation helpful? Give feedback.

-

|

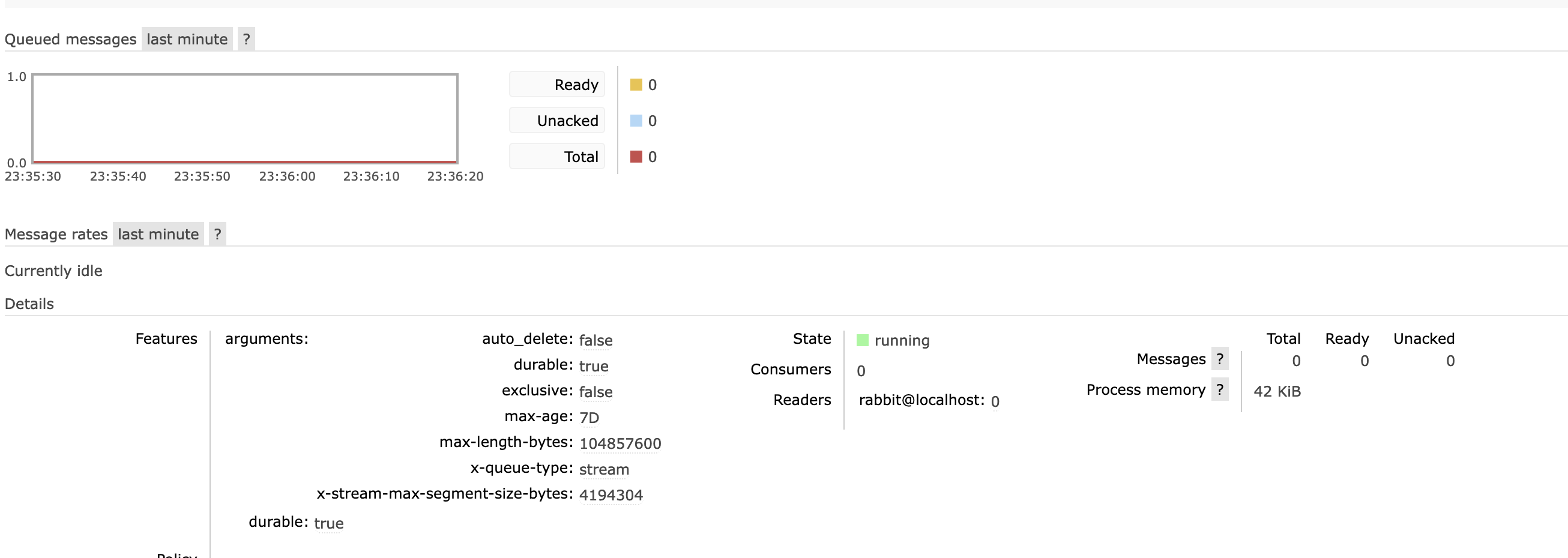

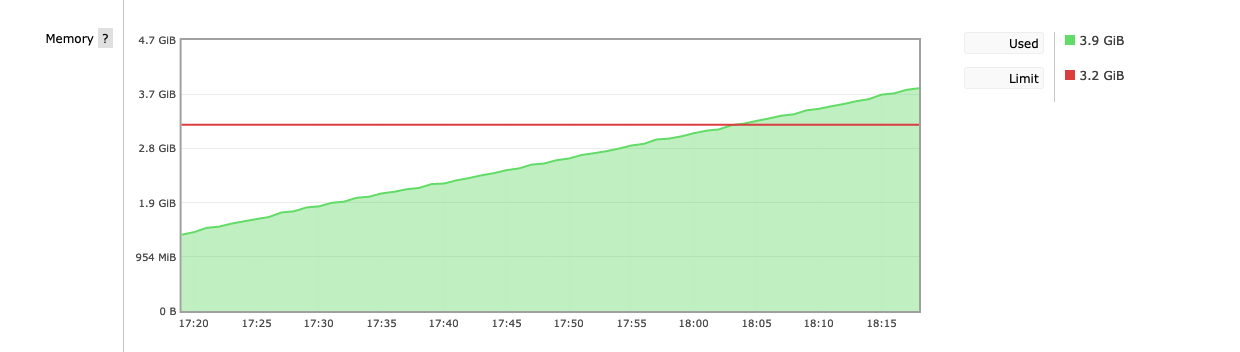

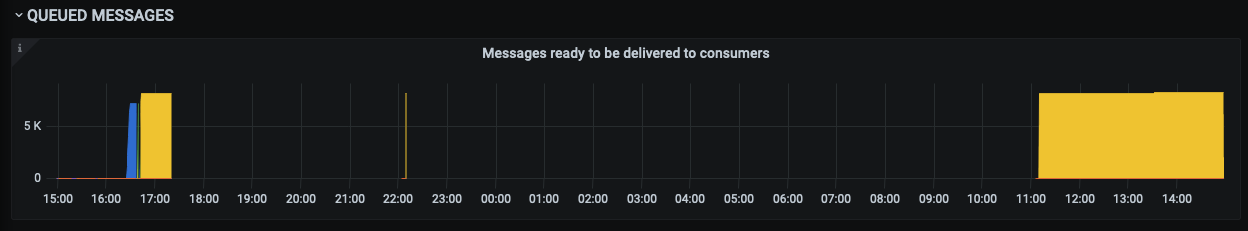

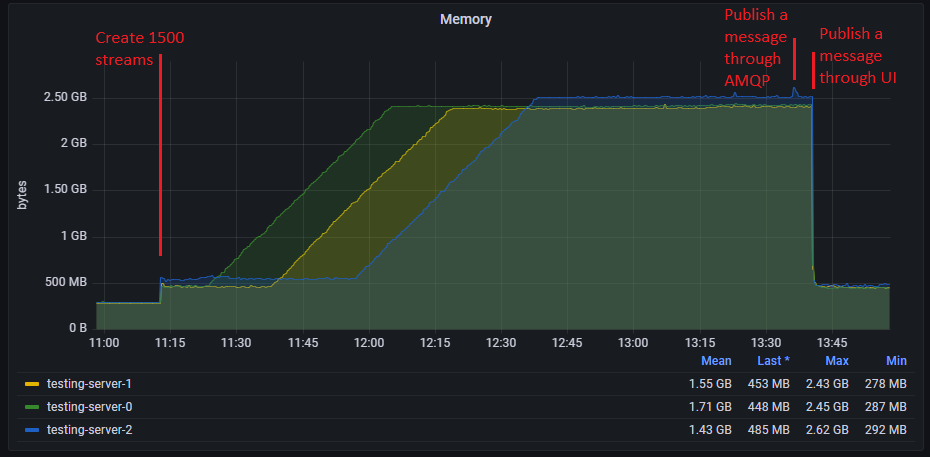

To summarize today's findings from another environment with a similar behavior:

|

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

This findings from this and a couple of similar discussions with Kubernetes users have been briefly documented. This now attracts all kinds of "here is my memory footprint profile" comments, not related to streams, to the kernel page cache, or even to modern versions (3.8 has been out of support for some 15 months now). New discussions should be started instead => closing. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks to some research by @Kraego, we now know what specific combination of Kubernetes and cgroups version exhibits this behavior: upgrading to Kubernetes 1.25 with cgroups v2 should make it go away, see kubernetes/kubernetes#43916 (comment) and https://kubernetes.io/blog/2022/08/31/cgroupv2-ga-1-25/#:~:text=cgroup%20v2%20provides%20a%20unified,has%20graduated%20to%20general%20availability |

Beta Was this translation helpful? Give feedback.

This findings from this and a couple of similar discussions with Kubernetes users have been briefly documented.

This now attracts all kinds of "here is my memory footprint profile" comments, not related to streams, to the kernel page cache, or even to modern versions (3.8 has been out of support for some 15 months now). New discussions should be started instead => closing.