Replies: 1 comment 6 replies

-

|

Please start by upgrading to a supported version. Lots of issues have been fixed since 3.8/3.9. |

Beta Was this translation helpful? Give feedback.

6 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

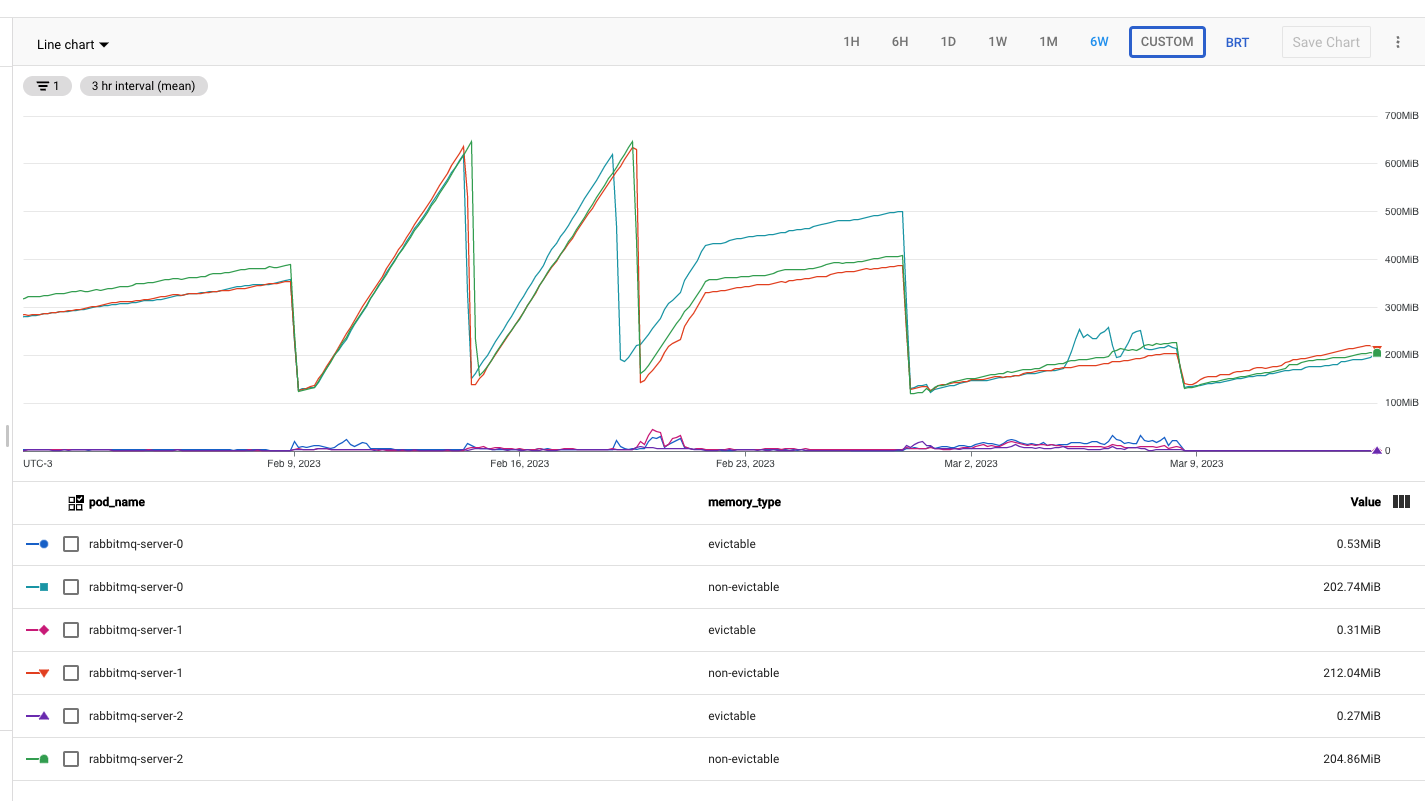

We have been experiencing a continuous increase on the memory usage of the

other_etstable that can lead to an OOM issue. We have a cluster with 3 nodes and we have to perfomr every 2 to 3 months a restart as it reachs 50% memory usage we have available on the server, then memory is freed. What could be causing theother_etsmemory increase? We have been using rabbitmq alongside 5 celery instances and around 80 mqtt connections.We have tried updating the rabbitmq to newer version from 3.8.12 to 3.11.9 but that did not help.

Logs of memory usage at this moment:

Logs of memory usage on previous version 3.8.12 (note that quorum_ets also included other_ets in the memory report on previous version I guess) :

My suspicion would be the reconnect of the mqtt being the cause of the problem, as we do not have not fully stable connections and reconnections happen from time to time. We have also noticed that when a node was reconnecting every 1 second, that caused the memory consumption to increase at a faster rate. Is there anything we can do to prevent the increase in memory usage due to the reconnections?

Beta Was this translation helpful? Give feedback.

All reactions