Rabbitmq stream coordinator fails to start after the restore of PVC snapshot backup using Kasten k10 #8060

Replies: 3 comments 3 replies

-

|

We cannot suggest anything without full RabbitMQ logs from all nodes.

A node-level backup tool that just stops the node, takes a volume snapshot (or something like that) and starts it can result in a stream or quorum queue leader re-election. It's also important to understand what exactly this tool does on restore. RabbitMQ nodes assume they can contact their previously known peers and if that's not the case, they will refuse to boot after a timeout. Specifically this means that their hostnames do not change. |

Beta Was this translation helpful? Give feedback.

-

|

Bitnami chart versioning do not make it easy to figure out what RabbitMQ version they ship but looks like I would not speculate further without logs and stream declaration properties (the screenshot only tells us that it is durable but streams are always durable). |

Beta Was this translation helpful? Give feedback.

-

|

This is unlikely to be related to what is happening to streams but nonetheless: someone reminds me that a good idea for such backup tools is to mark the node for maintenance first, before shutting them down. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Describe the bug

We need to backup the snapshot of the volumes for rabbitmq streams with all its messages. in order to recover it.

Rabbitmq it's deployed in a AKS cluster through the bitnami chart (version 11.13.0) in High Availabilty mode with 3 nodes (PodAntiaffnityPreset: Hard).

Kasten is deployed with version 1.0.31

https://docs.kasten.io/latest/install/azure/azure.html

Accordingly with documentation of rabbitmq:

https://www.rabbitmq.com/backup.html

that I quotes:

we made a custom blueprint with pre-backup hook and post-backup hook like this:

what we are trying to achive is that the rabbitmq application stay up and running during the backup of the PersistentVolume so as you can see we stopped the node 0 of the statefulset and restart it after backup. We backup and restore JUST the node 0 of the rabbitmq application.

That's seems to work.

we expecting that restoring of node 0 would mirror all the stream queue in the other nodes.

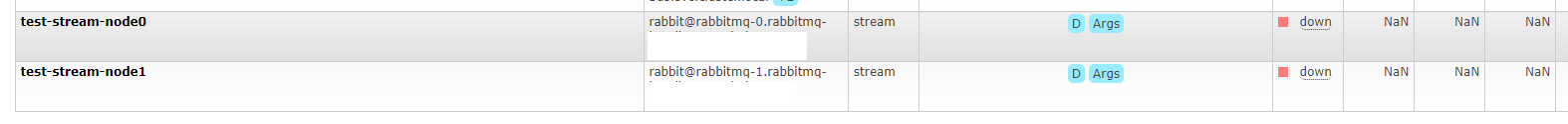

the restore of kasten is completed successfully but the stream queues restored are all in down state

as you can see here:

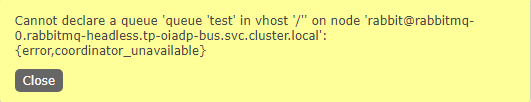

even if we try to create a new stream rabbitmq freeze for some seconds and after few seconds this error is prompt:

logs says:

I would really appreciate to have your support, any idea would be useful.

Let me know if you need some details.

Thank you all

Reproduction steps

...

Expected behavior

Stream queue should be up and running after the restore of the volume

Additional context

Kubernetes cluster

Beta Was this translation helpful? Give feedback.

All reactions