|

| 1 | +# Benchmark Runner - Score Sampling |

| 2 | +[](https://github.com/simphotonics/benchmark_runner/actions/workflows/dart.yml) |

| 3 | + |

| 4 | + |

| 5 | +## Introduction |

| 6 | + |

| 7 | +When benchmarking a function, one has to take into consideration that |

| 8 | +the test is not performed on an isolated system that is only handling the |

| 9 | +instructions provided by our piece of code. Instead, the CPUs is very likely |

| 10 | +busy performing other tasks before eventually executing the benchmarked code. |

| 11 | +This introduces latency that is typically larger for the first few runs |

| 12 | +and then has a certain degree of randomness. The initial latency |

| 13 | +can be reduced to some extend by introducing a warm-up phase, |

| 14 | +where the first few runs are discarded. |

| 15 | + |

| 16 | +A second factor to consider are systematic measuring errors due to the fact |

| 17 | +that it takes (a small amount of) time to increment loop counters, perform |

| 18 | +loop checks, or access the elapsed time. |

| 19 | + |

| 20 | +The overhead introduced by repeatedly accessing the |

| 21 | +elapsed time can be reduced by averaging the |

| 22 | +benchmark score over many runs. |

| 23 | + |

| 24 | +The question is how to generate a score sample while minimizing |

| 25 | +systematic errors and keeping the |

| 26 | +total benchmark run times within acceptable limits. |

| 27 | + |

| 28 | + |

| 29 | +## Score Estimate |

| 30 | + |

| 31 | +In a first step, benchmark scores are *estimated* using the |

| 32 | +functions [`estimate`][estimate] |

| 33 | +or [`estimateAsync`][estimateAsync]. |

| 34 | +The function [`BenchmarkHelper.sampleSize`][sampleSize] |

| 35 | +uses the score estimate to determine a suitable sample size and number of inner |

| 36 | +iterations (for short run times each sample entry is averaged). |

| 37 | + |

| 38 | +### Default Sampling Method |

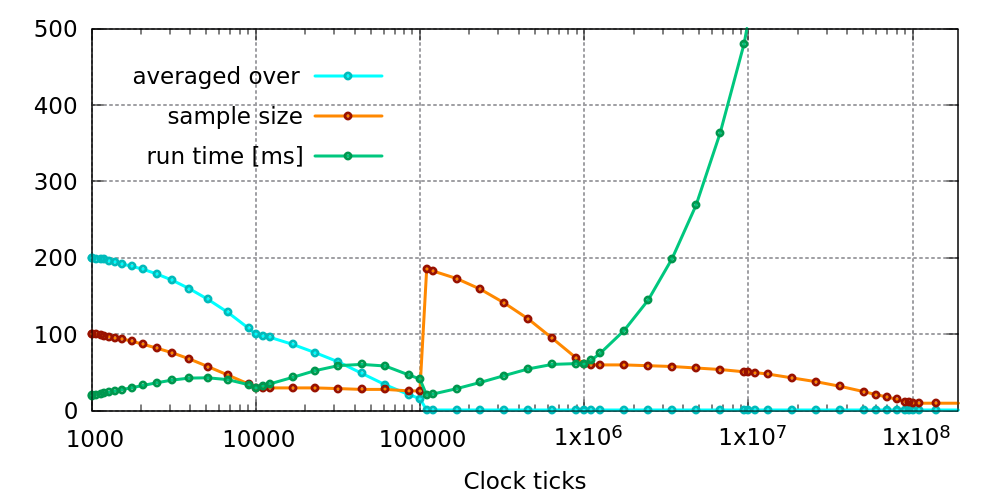

| 39 | +The graph below shows the sample size (orange curve) as calculated by the function |

| 40 | +[`BenchmarkHelper.sampleSize`][sampleSize]. |

| 41 | + |

| 42 | +The green curve shows the lower limit of the total microbenchmark duration |

| 43 | +in microseconds. |

| 44 | + |

| 45 | + |

| 46 | + |

| 47 | +For short run times below 1 microsecond each score sample is generated |

| 48 | +using the functions [`measure`][measure] or the equivalent |

| 49 | +asynchronous method [`measureAsync`][measureAsync]. The cyan curve shows |

| 50 | +approx. over how many runs a score entry is averaged. |

| 51 | + |

| 52 | +### Custom Sampling Method |

| 53 | + |

| 54 | +The parameter `sampleSize` of the functions [`benchmark`][benchmark] and |

| 55 | +[`asyncBenchmark`][asyncBenchmark] can be used to specify the lenght of the score |

| 56 | +sample list and the number of inner iterations used to generate each entry. |

| 57 | + |

| 58 | +To customize the score sampling process, *without* having to specify the parameter |

| 59 | +`sampleSize` for each call of [`benchmark`][benchmark] and |

| 60 | +[`asyncBenchmark`][asyncBenchmark], the static function |

| 61 | +[`BenchmarkHelper.sampleSize`][sampleSize] can be replaced with a custom function: |

| 62 | +```Dart |

| 63 | +/// Generates a sample containing 100 benchmark scores. |

| 64 | +BenchmarkHelper.sampleSize = (int clockTicks) { |

| 65 | + return (outer: 100, inner: 1) |

| 66 | +} |

| 67 | +``` |

| 68 | +To restore the default score sampling settings use: |

| 69 | +```Dart |

| 70 | +BenchmarkHelper.sampleSize = BenchmarkHelper.sampleSizeDefault; |

| 71 | +``` |

| 72 | +---- |

| 73 | +The graph shown above may be re-generated using the custom `sampleSize` |

| 74 | +function by copying and amending the file `gnuplot/sample_size.dart` |

| 75 | +and using the command: |

| 76 | +```Console |

| 77 | +dart sample_size.dart |

| 78 | +``` |

| 79 | +The command above lauches a process and runs a [`gnuplot`][gnuplot] script. |

| 80 | +For this reason, the program [`gnuplot`][gnuplot] must be installed (with |

| 81 | +the `qt` terminal enabled). |

| 82 | + |

| 83 | +</details> |

| 84 | + |

| 85 | + |

| 86 | +## Features and bugs |

| 87 | + |

| 88 | +Please file feature requests and bugs at the [issue tracker][tracker]. |

| 89 | + |

| 90 | +[tracker]: https://github.com/simphotonics/benchmark_runner/issues |

| 91 | + |

| 92 | +[asyncBenchmark]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/asyncBenchmark.html |

| 93 | + |

| 94 | +[asyncGroup]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/asyncGroup.html |

| 95 | + |

| 96 | +[benchmark_harness]: https://pub.dev/packages/benchmark_harness |

| 97 | + |

| 98 | +[benchmark_runner]: https://pub.dev/packages/benchmark_runner |

| 99 | + |

| 100 | +[benchmark]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/benchmark.html |

| 101 | + |

| 102 | +[ColorProfile]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/ColorProfile.html |

| 103 | + |

| 104 | +[gnuplot]: https://sourceforge.net/projects/gnuplot/ |

| 105 | + |

| 106 | +[group]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/group.html |

| 107 | + |

| 108 | +[measure]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/BenchmarkHelper/measure.html |

| 109 | + |

| 110 | +[measureAsync]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/BenchmarkHelper/measureAsync.html |

| 111 | + |

| 112 | +[sampleSize]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/BenchmarkHelper/sampleSize.html |

| 113 | + |

| 114 | +[estimate]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/BenchmarkHelper/estimate.html |

| 115 | + |

| 116 | +[estimateAsync]: https://pub.dev/documentation/benchmark_runner/latest/benchmark_runner/BenchmarkHelper/estimateUpAsync.html |

0 commit comments