index:only`. If it returns no results, the repository has not been indexed.

-

-### Sourcegraph is making unauthorized requests to the git server

-

-This is normal and happens whenever git is used over HTTP. To avoid unnecessarily sending a password over HTTP, git first

-makes a request without the password included. If a 401 Unauthorized is returned, git sends the request with the password.

-

-More information can be found [here](https://confluence.atlassian.com/bitbucketserverkb/two-401-responses-for-every-git-opperation-938854756.html).

-

-If this behaviour is undesired, the `gitURLType` in the [external service configuration](/admin/code_hosts/github#configuration)

-should be set to `ssh` instead of `http`. This will also require [ssh keys to be set up](/admin/repo/auth#repositories-that-need-http-s-or-ssh-authentication).

diff --git a/docs/admin/updates/grpc/index.mdx b/docs/admin/updates/grpc/index.mdx

deleted file mode 100644

index 0fd599411..000000000

--- a/docs/admin/updates/grpc/index.mdx

+++ /dev/null

@@ -1,115 +0,0 @@

-import { CURRENT_VERSION_STRING_NO_V, CURRENT_VERSION_STRING } from 'src/components/PreCodeBlock'

-

-# Sourcegraph 5.3 gRPC Configuration Guide

-

-## Overview

-

-As part of our continuous effort to enhance performance and reliability, in Sourcegraph 5.3 we’ve fully transitioned to using [gRPC](https://grpc.io/) as the primary communication method for our internal services.

-

-This guide will help you understand this change and its implications for your setup.

-

-## Quick Overview

-

-- **What’s changing?** In Sourcegraph `5.3`, we've transitioned to [gRPC](https://grpc.io/) for internal communication between Sourcegraph services.

-- **Why?** gRPC, a high-performance Remote Procedure Call (RPC) framework by Google, brings about several benefits like a more efficient serialization format, faster speeds, and a better development experience.

-- **Is any action needed?** If you don’t have restrictions on Sourcegraph’s **internal** (service to service) traffic, you shouldn't need to take any action—the change should be invisible. If you do have restrictions, some firewall or security configurations may be necessary. See the ["Who needs to Act"](#who-needs-to-act) section for more details.

-- **Can I disable gRPC if something goes wrong?** In Sourcegraph `5.3.X`, gRPC can no longer be disabled. However, if you run into an issue it is possible to downgrade to Sourcegraph `5.2.X`, which includes a [toggle to enable/disable gRPC](#sourcegraph-52x-only-enabling--disabling-grpc) while you troubleshoot the issue. Contact our customer support team for more information.

-

-## gRPC: A Brief Intro

-

-[gRPC](https://grpc.io/) is an open-source [RPC](https://en.wikipedia.org/wiki/Remote_procedure_call) framework developed by Google. Compared to [REST](https://en.wikipedia.org/wiki/REST), gRPC is faster, more efficient, has built-in backwards compatibility support, and offers a superior development experience.

-

-## Key Changes

-

-### 1. Internal Service Communication

-

-For Sourcegraph version `5.3.X` onwards, our microservices like `repo-updater` and `gitserver` will use mainly gRPC instead of REST for their internal traffic. This affects only communication *between* our services. Interactions you have with Sourcegraph's UI and external APIs remain unchanged.

-

-### 2. Rollout Plan

-

-| Version | gRPC Status |

-|---------------------------------|--------------------------------------------------------------------------|

-| `5.2.X` (Released on October 4th, 2023) | On by default but can be disabled via a feature flag. |

-| `5.3.X` (Releasing Feburary 15th, 2024)| Fully integrated and can't be turned off. Able to temporarily downgrade to `5.2.X` if there are any issues. |

-

-## Preparing for the Change

-

-### Who Needs to Act?

-

-Our use of gRPC only affects traffic **_between_** our microservices (e.x. `searcher` ↔ `gitserver`). Traffic between the Sourcegraph Web UI and the rest of the application is unaffected (e.x. `sourcegraph.example.com` ↔ `frontend`’s GraphQL API).

-

-**If Sourcegraph's internal traffic faces no security restrictions in your environment, no action is required.**

-

-However, if you’ve applied security measures or have firewall restrictions on this traffic, adjustments might be needed to accommodate gRPC communication. The following is a more technical description of the protocol that can help you configure your security settings:

-

-### gRPC Technical Details

-

-- **Protocol Description**: gRPC runs on-top of [HTTP/2](https://en.wikipedia.org/wiki/HTTP/2) (which, in turn, runs on top of [TCP](https://en.wikipedia.org/wiki/Transmission_Control_Protocol). It transfers (binary-encoded, not human-readable plain-text) [Protocol Buffer](https://protobuf.dev/) payloads. Our current gRPC implementation does not use any encryption.

-

-- **List of services**: The following services will now _speak mainly gRPC in addition_ to their previous traffic:

- - [frontend](https://github.com/sourcegraph/deploy-sourcegraph-k8s/blob/main/base/sourcegraph/frontend/sourcegraph-frontend.Service.yaml)

- - [gitserver](https://github.com/sourcegraph/deploy-sourcegraph-k8s/blob/main/base/sourcegraph/gitserver/gitserver.Service.yaml)

- - [searcher](https://github.com/sourcegraph/deploy-sourcegraph-k8s/blob/main/base/sourcegraph/searcher/searcher.StatefulSet.yaml)

- - [zoekt-webserver](https://github.com/sourcegraph/deploy-sourcegraph-k8s/blob/main/base/sourcegraph/indexed-search/indexed-search.StatefulSet.yaml)

- - [zoekt-indexserver](https://github.com/sourcegraph/deploy-sourcegraph-k8s/blob/main/base/sourcegraph/indexed-search/indexed-search.StatefulSet.yaml)

-

-- The following aspects about Sourcegraph’s networking configuration **aren’t changing**:

- - **Ports**: all Sourcegraph services will use the same ports as they were in the **5.1.X** release.

- - **External traffic**: gRPC only affects how Sourcegraph’s microservices communicate amongst themselves - **no new external traffic is sent via gRPC**.

- - **Service dependencies:** each Sourcegraph service will communicate with the same set of services regardless of whether gRPC is enabled.

- - Example: `searcher` will still need to communicate with `gitserver` to fetch repository data. Whether gRPC is enabled doesn’t matter.

-

-### Sourcegraph `5.2.X` only: enabling / disabling GRPC

-

-In the `5.2.x` release, you are able to use the following methods to enable / disable gRPC if a problem occurs.

-

- In the `5.3.X` release, these options are removed and gRPC is always enabled. However, if you run into an issue it is possible to downgrade to Sourcegraph `5.2.X` and use the configuration below to temporarily disable gRPC while you troubleshoot the issue. Contact our customer support team for more assistance with downgrading.

-

-#### All services besides `zoekt-indexserver`

-

-Disabling gRPC on any service that is not `zoekt-indexserver` can be done by one of these options:

-

-##### Option 1: disable via site-configuration

-

-Set the `enableGRPC` experimental feature to `false` in the site configuration file:

-

-```json

-{

- "experimentalFeatures": {

- "enableGRPC": false // disabled

- }

-}

-```

-

-##### Option 2: disable via environment variables

-

-Set the environment variable `SG_FEATURE_FLAG_GRPC="false"` for every service.

-

-#### `zoekt-indexserver` service: disable via environment variable

-

-Set the environment variable `GRPC_ENABLED="false"` on the `zoekt-indexserver` container. (See [indexed-search.StatefulSet.yaml](https://github.com/sourcegraph/deploy-sourcegraph-k8s/blob/main/base/sourcegraph/indexed-search/indexed-search.StatefulSet.yaml) for the configuration):

-

-```yaml

-- name: zoekt-indexserver

- env:

- - name: GRPC_ENABLED

- value: 'false'

- image: docker.io/sourcegraph/search-indexer:{CURRENT_VERSION_NO_V}

-```

-

-_zoekt-indexserver can’t read from Sourcegraph’s site configuration, so we can only use environment variables to communicate this setting._

-

-If any issues arise with gRPC, admins have the option to disable it in version `5.2.X`. This will be phased out in `5.3.X`.

-

-## Monitoring gRPC

-

-To ensure the smooth operation of gRPC, we offer:

-

-- **gRPC Grafana Dashboards**: For every gRPC service, we provide dedicated dashboards. These boards present request and error rates for every method, aiding in performance tracking. See our [dashboard documentation](/admin/observability/dashboards).

-

-

-- **Internal Error Reporter**: For certain errors specifically from gRPC libraries or configurations, we've integrated an "internal error" reporter. Logs prefixed with `grpc.internal.error.reporter` signal issues with our gRPC execution and should be reported to customer support for more assistance.

-

-## Need Help?

-

-For any queries or concerns, reach out to our customer support team. We’re here to assist!

diff --git a/docs/admin/updates/index.mdx b/docs/admin/updates/index.mdx

index 023eb51d2..258510c02 100644

--- a/docs/admin/updates/index.mdx

+++ b/docs/admin/updates/index.mdx

@@ -132,4 +132,3 @@ If your instance has schema drift or unfinished oob migrations you may need to a

- [Upgrading Early Versions](/admin/updates/migrator/upgrading-early-versions)

- [Troubleshooting upgrades](/admin/updates/migrator/troubleshooting-upgrades)

- [Downgrading](/admin/updates/migrator/downgrading)

-- [Sourcegraph 5.2 gRPC Configuration Guide](/admin/updates/grpc/)

diff --git a/docs/admin/validation.mdx b/docs/admin/validation.mdx

index 24bec457f..8abaca66a 100644

--- a/docs/admin/validation.mdx

+++ b/docs/admin/validation.mdx

@@ -2,7 +2,6 @@

Sourcegraph Validation is currently experimental.

-

## Validate Sourcegraph Installation

Installation validation provides a quick way to check that a Sourcegraph installation functions properly after a fresh install

diff --git a/docs/cloud/index.mdx b/docs/cloud/index.mdx

index 9e90562b2..c8e28b2a7 100644

--- a/docs/cloud/index.mdx

+++ b/docs/cloud/index.mdx

@@ -242,7 +242,7 @@ Sourcegraph Cloud instances are single-tenant, limiting exposure to outages and

### Is data safe with Sourcegraph Cloud?

-Sourcegraph Cloud utilizes a single-tenant architecture. Each customer's data is isolated and stored in a dedicated GCP project. Data is [encrypted in transit](https://cloud.google.com/docs/security/encryption-in-transit) and [at rest](https://cloud.google.com/docs/security/encryption/default-encryption) and is backed up daily. Such data includes but is not limited to, customer source code, repository metadata, code host connection configuration, and user profile. Sourcegraph Cloud also has [4 supported regions](#multiple-region-availability) on GCP to meet data sovereignty requirements.

+Sourcegraph Cloud utilizes a single-tenant architecture. Each customer's data is isolated and stored in a dedicated GCP project. Data is [encrypted in transit](https://cloud.google.com/docs/security/encryption-in-transit) and [at rest](https://cloud.google.com/docs/security/encryption/default-encryption) and is backed up daily. Such data includes but is not limited to, customer source code, repository metadata, code host connection configuration, and user profile. Sourcegraph Cloud also has [5 supported regions](#multiple-region-availability) on GCP to meet data sovereignty requirements.

Sourcegraph continuously monitors Cloud instances for security vulnerability using manual reviews and automated tools. Third-party auditors regularly perform testing to ensure maximum protection against vulnerabilities and are automatically upgraded to fix any vulnerability in third-party dependencies. In addition, GCP’s managed offering regularly patches any vulnerability in the underlying infrastructure. Any infrastructure changes must pass security checks, which are tested against industry-standard controls.

diff --git a/docs/code_insights/references/common_reasons_code_insights_may_not_match_search_results.mdx b/docs/code_insights/references/common_reasons_code_insights_may_not_match_search_results.mdx

index 76cb28ea9..1f5d147fc 100644

--- a/docs/code_insights/references/common_reasons_code_insights_may_not_match_search_results.mdx

+++ b/docs/code_insights/references/common_reasons_code_insights_may_not_match_search_results.mdx

@@ -14,7 +14,7 @@ Because code insights historical search defaults to `fork:yes` and `archived:yes

All repositories in a historical search are unindexed, but a manual Sourcegraph search only includes indexed repositories. It's possible your manual searches are missing results from unindexed repositories.

-To investigate this, one can compare the list of repositories in the manual search (use a `select:repository` filter) with the list of repositories in the insight `series_points` database table. To see why a repository may not be indexing, refer to [this guide](/admin/troubleshooting#sourcegraph-is-not-returning-results-from-a-repository-unless-repo-is-included).

+To investigate this, one can compare the list of repositories in the manual search (use a `select:repository` filter) with the list of repositories in the insight `series_points` database table. To see why a repository may not be indexing, refer to [this guide](/admin/faq#sourcegraph-is-not-returning-results-from-a-repository-unless-repo-is-included).

## If the chart data point shows *lower* counts than a manual search

diff --git a/docs/pricing/faqs.mdx b/docs/pricing/faqs.mdx

index 5197afd8a..3c740a97f 100644

--- a/docs/pricing/faqs.mdx

+++ b/docs/pricing/faqs.mdx

@@ -1,6 +1,6 @@

# FAQs

-Learn about some of the most commonly asked questions about Sourcegraph.

+Learn about some of the most commonly asked questions about Sourcegraph.

## What's the difference between Free, Enterprise Starter, and Enterprise plans?

@@ -30,7 +30,7 @@ For Enterprise customers, Sourcegraph will not train on your company's data unle

## How are active users counted and billed for Cody?

-This only applies to Cody Enterprise contracts.

+This only applies to Cody Enterprise contracts.

A billable user is one who is signed in to their Enterprise account and actively interacts with the product (e.g., they see suggested autocompletions, run commands or chat with Cody, start new discussions, clear chat history, or copy text from chats, change settings, and more). Simply having Cody installed is not enough to be considered a billable user.

diff --git a/public/llms.txt b/public/llms.txt

index dfe8044ea..97feee713 100644

--- a/public/llms.txt

+++ b/public/llms.txt

@@ -13,6 +13,8 @@ Currently supported versions of Sourcegraph:

| **Release** | **General Availability Date** | **Supported** | **Release Notes** | **Install** |

|--------------|-------------------------------|---------------|--------------------------------------------------------------------|------------------------------------------------------|

+| 6.6 Patch 1 | June 2025 | ✅ | [Notes](https://sourcegraph.com/docs/technical-changelog#v66868) | [Install](https://sourcegraph.com/docs/admin/deploy) |

+| 6.6 Patch 0 | June 2025 | ✅ | [Notes](https://sourcegraph.com/docs/technical-changelog#v660) | [Install](https://sourcegraph.com/docs/admin/deploy) |

| 6.5 Patch 2 | June 2025 | ✅ | [Notes](https://sourcegraph.com/docs/technical-changelog#v652654) | [Install](https://sourcegraph.com/docs/admin/deploy) |

| 6.5 Patch 1 | June 2025 | ✅ | [Notes](https://sourcegraph.com/docs/technical-changelog#v651211) | [Install](https://sourcegraph.com/docs/admin/deploy) |

| 6.5 Patch 0 | June 2025 | ✅ | [Notes](https://sourcegraph.com/docs/technical-changelog#v650) | [Install](https://sourcegraph.com/docs/admin/deploy) |

@@ -521,7 +523,7 @@ Slack Support provides access to creating tickets directly from Slack, allowing

| Role-based access control | - | - | ✓ |

| Analytics | - | Basic | ✓ |

| Audit logs | - | - | ✓ |

-| Guardrails | - | - | Beta |

+| Guardrails (*Deprecated*) | - | - | Beta |

| Indexed code | - | Private | Private |

| Context Filters | - | - | ✓ |

| **Compatibility** | | | |

@@ -538,7 +540,7 @@ Slack Support provides access to creating tickets directly from Slack, allowing

# FAQs

-Learn about some of the most commonly asked questions about Sourcegraph.

+Learn about some of the most commonly asked questions about Sourcegraph.

## What's the difference between Free, Enterprise Starter, and Enterprise plans?

@@ -568,7 +570,7 @@ For Enterprise customers, Sourcegraph will not train on your company's data unle

## How are active users counted and billed for Cody?

-This only applies to Cody Enterprise contracts.

+This only applies to Cody Enterprise contracts.

A billable user is one who is signed in to their Enterprise account and actively interacts with the product (e.g., they see suggested autocompletions, run commands or chat with Cody, start new discussions, clear chat history, or copy text from chats, change settings, and more). Simply having Cody installed is not enough to be considered a billable user.

@@ -702,7 +704,7 @@ Here's a detailed breakdown of features included in the different Enterprise pla

| **AI features** | - Cody AI Assistant | - Cody AI Assistant

- Bring your own LLM key |

| **Code Search features** | - Everything in Enterprise Starter, plus:

- Batch Changes

- Code Insights

- Code Navigation | - Everything in Enterprise Starter, plus:

- Batch Changes

- Code Insights

- Code Navigation |

| **Deployment types** | - Single-tenant Coud | - Self- Hosted |

-| **Compatibility** | - Everything in Enterprise Starter, plus:

- Enterprise admin and security features

- All major code hosts

- Guardrails

- Context Filters | - Everything in Enterprise Starter, plus:

- Enterprise admin and security features

- All major code hosts

- Guardrails

- Context Filters |

+| **Compatibility** | - Everything in Enterprise Starter, plus:

- Enterprise admin and security features

- All major code hosts

- Guardrails (Deprecated)

- Context Filters | - Everything in Enterprise Starter, plus:

- Enterprise admin and security features

- All major code hosts

- Guardrails (Deprecated)

- Context Filters |

| **Support** | - 24x5 support with options like:

- TA support

- Premium Support Offerings

- Forward Deployed Engineer (FDE) | - Enterprise support with options like:

- Dedicated TA support

- Premium Support Offerings

- Forward Deployed Engineer (FDE) |

@@ -741,17 +743,17 @@ The Enterprise Starter plan supports a variety of search-based features like:

| ------------------------------ | --------------------------------------------------------- | ------------------------- |

| Indexed Code Search | Simplified admin experience with UI‑based repo‑management | Support with limited SLAs |

| Indexed Symbol Search | User management | - |

-| Searched‑based code‑navigation | GitHub code host integration | - |

+| Searched‑based code‑navigation | Code host integrations (GitHub, GitLab.com, Bitbucket Cloud) | - |

## Limits

Sourcegraph Enterprise Starter offers the following limits:

-- Max 50 users per workspace

+- Max 500 users per workspace

- Max 100 repos per workspace

-- Starts with 5 GB of storage

-- 1 GB storage per seat added

-- 10 GB max total storage

+- Starts with 25 GB of storage

+- 5 GB storage per seat added

+- 50 GB max total storage

## Workspace settings

@@ -767,9 +769,11 @@ After creating a new workspace, you can switch views between your personal and w

## Getting started with workspace

-A workspace admin can invite new members to their workspace using their team member's email address. Once the team member accepts the invitation, they will be added to the workspace and assigned the member role. Next, the member is asked to connect and authorize the code host (GitHub) to access the private repositories indexed in your Sourcegraph account.

+A workspace admin can invite new members to their workspace using their team member's email address. Once the team member accepts the invitation, they will be added to the workspace and assigned the member role.

-If you skip this step, the member won't be able to access any of the private repositories they have access to. However, they can still use the public search via the Sourcegraph code search bar.

+If the workspace includes GitHub repositories, the member will be asked to connect and authorize GitHub to access those private repositories. This authorization step is only required for GitHub repositories. For GitLab.com and Bitbucket Cloud repositories, no additional authorization is needed.

+

+Without GitHub authorization, members cannot access private GitHub repositories but can access all other repositories (GitLab.com, Bitbucket Cloud) and use the public search via the Sourcegraph code search bar.

@@ -777,7 +781,12 @@ If you skip this step, the member won't be able to access any of the private rep

From the Repository Management settings, workspace admins can configure various settings for connecting code hosts and indexing repositories in their workspace. You can index up to 100 repos per workspace.

-

+**Repository permissions**:

+

+- **GitHub** provides repository-level permissions that are reflected in Sourcegraph.

+- **GitLab.com** and **Bitbucket Cloud** repositories are accessible to all workspace members regardless of the member's permissions on the external code host.

+

+

From here, you can:

@@ -795,7 +804,7 @@ When you add a new organization, you must authorize access and permission for al

As you add more repos, you get logs for the number of repos added, storage used, and their status. To remove any repo from your workspace, click the repo name that changes the repo status **TO BE REMOVED**. Click the **Save Changes** button to confirm it.

-

+

@@ -6241,6 +6250,8 @@ Site administrators can set the duration of access tokens for users connecting C

## Guardrails

+Guardrails has been deprecated and is no longer recommended for use.

+

Guardrails for public code is only supported on VS Code, JetBrains IDEs extension, and Sourcegraph Web app for Cody Enterprise customers using [Cody Gateway](https://sourcegraph.com/docs/cody/core-concepts/cody-gateway#sourcegraph-cody-gateway). It is not supported for any BYOK (Bring Your Own Key) deployments.

Open source attribution guardrails for public code, commonly called copyright guardrails, reduce the exposure to copyrighted code. This involves implementing a verification mechanism within Cody to ensure that any code generated by the platform does not replicate open source code.

@@ -7766,7 +7777,7 @@ git diff | cody chat -m 'Write a commit message for this diff' -

| Single-repo context | ✅ | ✅ | ✅ | ✅ | ❌ |

| Multi-repo context | ✅ | ✅ | ✅ | ✅ | ❌ |

| Local context | ✅ | ✅ | ✅ | ❌ | ✅ |

-| Guardrails | ✅ | ✅ | ❌ | ✅ | ❌ |

+| Guardrails (*Deprecated*) | ✅ | ✅ | ❌ | ✅ | ❌ |

| Repo-based context filters | ✅ | ✅ | ✅ | ✅ | ✅ |

| **Prompts** | | | | | |

| Access to prompts and Prompt library | ✅ | ✅ | ✅ | ✅ | ❌ |

@@ -9082,86 +9093,117 @@ Since MCP is an open protocol and servers can be created by anyone, your mileage

-

-# Quickstart for code monitoring

+

+# Code monitoring

-## Introduction

+

+ Supported on [Enterprise](/pricing/enterprise) plans.

+

+ Currently available via the Web app.

+

+

-In this tutorial, we will create a new code monitor that monitors new appearances of the word "TODO" in your codebase.

+Keep on top of events in your codebase. Watch your code with code monitors and trigger actions to run automatically in response to events.

-## Creating a code monitor

+Code monitors allow you to keep track of and get notified about changes in your code. Some use cases for code monitors include getting notifications for potential secrets, anti-patterns, or common typos committed to your codebase.

-Prerequisite: Ensure [email notifications](/admin/observability/alerting#email) are configured in site configuration.

+Here are some starting points for your first code monitor:

-1. On your Sourcegraph instance, click the **Code monitoring** menu item at the top right of your page. Alternatively, go to `https://sourcegraph.example.com/code-monitoring` (where sourcegraph.example.com represents your unique Sourcegraph url).

-2. Click the **Create new code monitor** button at the top right of the page.

-3. Fill out the **Name** input with: "TODOs".

-4. Under the **Trigger** section, click **When there are new search results**.

-5. In the **Search query** input, enter the following search query:

-`TODO type:diff patternType:keyword`.

-(Note that `type:` and `patternType:` are required as part of the search query for code monitoring.)

-1. You can click **Preview results** to see all previous additions or removals of TODO to your codebase.

-2. Back in the code monitor form, click **Continue**.

-3. Click **Send email notifications** under the **Actions** section.

-4. Click **Done**.

-5. Click **Create code monitor**.

+**Watch for consumers of deprecated endpoints**

-You should now be on `https://sourcegraph.example.com/code-monitoring`, and be able to see the TODO code monitor on the page.

+```

+f:\.tsx?$ patterntype:regexp fetch\(['"`]/deprecated-endpoint

+```

-## Sending a test email notification

+If you’re deprecating an API or an endpoint, you may find it useful to set up a code monitor watching for new consumers. As an example, the above query will surface fetch() calls to `/deprecated-endpoint` within TypeScript files. Replace `/deprecated-endpoint` with the actual path of the endpoint being deprecated.

-If you want to preview the email notification alerting you of a new result with TODO, follow these steps:

+**Get notified when a file changes**

-1. In the **Send email notifications** action, click "Send test email".

-1. Within a few minutes, you should receive a test email from Sourcegraph with a preview of the email notification.

+```

+patterntype:regexp repo:^github\.com/sourcegraph/sourcegraph$ file:SourcegraphWebApp\.tsx$ type:diff

+```

-If you want to test receiving an email with a real new result, follow these steps:

+You may want to get notified when a given file is changed, regardless of the diff contents of the change: the above query will return all changes to the `SourcegraphWebApp.tsx` file on the `github.com/sourcegraph/sourcegraph` repo.

-1. In any repository that's on your Sourcegraph instance (for purposes of this tutorial, we recommend a dummy or test repo that's not used), add `TODO` to any file.

-1. Commit the change, and push it to your code host.

-1. Within a few minutes, you should see an email from Sourcegraph with a link to the new result you just pushed.

+**Get notified when a specific function call is added**

-

+```

+repo:^github\.com/sourcegraph/sourcegraph$ type:diff select:commit.diff.added Sprintf

+```

-

-# Code monitoring

+You may want to monitor new additions of a specific function call, for example a deprecated function or a function that introduces a security concern. This query will notify you whenever a new addition of `Sprintf` is added to the `sourcegraph/sourcegraph` repository. This query selects all diff additions marked as "+". If a call of `Sprintf` is both added and removed from a file, this query will still notify due to the addition.

-

- Supported on [Enterprise](/pricing/enterprise) plans.

-

- Currently available via the Web app.

-

-

+Code monitors are made up of two main elements: **Triggers** and **Actions**.

-Keep on top of events in your codebase

+## Triggers

-Watch your code with code monitors and trigger actions to run automatically in response to events.

+A _trigger_ is an event which causes execution of an action. Currently, code monitoring supports one kind of trigger: "When new search results are detected" for a particular search query. When creating a code monitor, users will be asked to specify a query as part of the trigger.

-## Getting started

+Sourcegraph will run the search query over every new commit for the searched repositories, and when new results for the query are detected, a trigger event is emitted. In response to the trigger event, any _actions_ attached to the code monitor will be executed.

-

-

-

-

+A query used in a "When new search results are detected" trigger must be a `type:commit` or `type:diff` search. This allows Sourcegraph to detect new search results periodically.

-## Questions & Feedback

+## Actions

-We want to hear your feedback! [Share your thoughts](mailto:feedback@sourcegraph.com)

+An _action_ is executed in response to a trigger event. Currently, code monitoring supports three different actions:

-

+### Sending a notification email to the owner of the code monitor

+

+Prerequisite: Ensure [email notifications](/admin/observability/alerting#email) are configured in site configuration.

+

+1. Click the _Code monitoring_ menu item at the top right of your page.

+2. Click the _Create new code monitor_ button at the top right of the page.

+3. Fill out the _Name_ input with: "TODOs".

+4. Under the _Trigger_ section, click _When there are new search results_.

+5. In the _Search query_ input, enter the following search query:

+`TODO type:diff patternType:keyword`.

+(Note that `type:` and `patternType:` are required as part of the search query for code monitoring.)

+1. You can click _Preview results_ to see all previous additions or removals of TODO to your codebase.

+2. Back in the code monitor form, click _Continue_.

+3. Click _Send email notifications_ under the _Actions_ section. You can use "Send test email" to verify you can properly receive notifications and to lean more about the format.

+4. Click _Done_.

+5. Click _Create code monitor_.

+

+You should now see the TODO code monitor on the page, and you will receive email notifications whenever the trigger fires.

+

+### Sending a Slack message to a channel

+

+You can set up code monitors to send notifications about new matching search results to Slack channels.

+

+#### Usage

-

-# Setting up Webhook notifications

+1. In Sourcegraph, click on the "Code Monitoring" nav item at the top of the page.

+1. Create a new code monitor or edit an existing monitor by clicking on the "Edit" button next to it.

+1. Under actions, select **Send Slack message to channel**.

+1. Paste your webhook URL into the "Webhook URL" field. (See "[Creating a Slack incoming webhook URL](#creating-a-slack-incoming-webhook-url)" below for detailed instructions.)

+1. Click on the "Continue" button, and then the "Save" button.

+

+##### Creating a Slack incoming webhook URL

+

+1. You must have permission to create apps in your organization's Slack workspace.

+1. Go to https://api.slack.com/apps and sign in to your Slack account if necessary.

+1. Click on the "Create an app" button.

+1. Create your app "From scratch".

+1. Give your app a name and select the workplace you want notifications sent to.

+

+1. Once your app is created, click on the "Incoming Webhooks" in the sidebar, under "Features".

+1. Click the toggle button to activate incoming webhooks.

+1. Scroll to the bottom of the page and click on "Add New Webhook to Workspace".

+1. Select the channel you want notifications sent to, then click on the "Allow" button.

+1. Your webhook URL is now created! Click the copy button to copy it to your clipboard.

+

+

+### Sending a webhook event to an endpoint of your choosing

Webhook notifications provide a way to execute custom responses to a code monitor notification.

They are implemented as a POST request to a URL of your choice. The body of the request is defined

by Sourcegraph, and contains all the information available about the cause of the notification.

-## Prerequisites

+#### Prerequisites

- You must have a service running that can accept the POST request triggered by the webhook notification

-## Creating a webhook receiver

+#### Creating a webhook receiver

A webhook receiver is a service that can accept an HTTP POST request with the contents of the webhook notification.

The receiver must be reachable from the Sourcegraph cluster using the URL that is configured below.

@@ -9179,7 +9221,8 @@ The HTTP POST request sent to the receiver will have a JSON-encoded body with th

- `message`: The matching commit message. Only set if the result is a commit match.

- `matchedMessageRanges`: The character ranges of `message` that matched `query`. Only set if the result is a commit match.

-Example payload:

+

+

```json

{

"monitorDescription": "My test monitor",

@@ -9207,155 +9250,37 @@ Example payload:

}

```

-## Configuring a code monitor to send Webhook notifications

-

-1. In Sourcegraph, click on the "Code Monitoring" nav item at the top of the page.

-1. Create a new code monitor or edit an existing monitor by clicking on the "Edit" button next to it.

-1. Go through the standard configuration steps for a code monitor and select action "Call a webhook".

-1. Paste your webhook URL into the "Webhook URL" field.

-1. Click on the "Continue" button, and then the "Save" button.

-

-

-

-

-# Start monitoring your code

-

-This page lists code monitors that are commonly used and can be used across most codebases.

-

-

-## Watch for consumers of deprecated endpoints

-

-```

-f:\.tsx?$ patterntype:regexp fetch\(['"`]/deprecated-endpoint

-```

-

-If you’re deprecating an API or an endpoint, you may find it useful to set up a code monitor watching for new consumers. As an example, the above query will surface fetch() calls to `/deprecated-endpoint` within TypeScript files. Replace `/deprecated-endpoint` with the actual path of the endpoint being deprecated.

-

-## Get notified when a file changes

-

-```

-patterntype:regexp repo:^github\.com/sourcegraph/sourcegraph$ file:SourcegraphWebApp\.tsx$ type:diff

-```

-

-You may want to get notified when a given file is changed, regardless of the diff contents of the change: the above query will return all changes to the `SourcegraphWebApp.tsx` file on the `github.com/sourcegraph/sourcegraph` repo.

-

-## Get notified when a specific function call is added

-

-```

-repo:^github\.com/sourcegraph/sourcegraph$ type:diff select:commit.diff.added Sprintf

-```

-

-You may want to monitor new additions of a specific function call, for example a deprecated function or a function that introduces a security concern. This query will notify you whenever a new addition of `Sprintf` is added to the `sourcegraph/sourcegraph` repository. This query selects all diff additions marked as "+". If a call of `Sprintf` is both added and removed from a file, this query will still notify due to the addition.

-

-

-

-

-# Slack notifications for code monitors

-

-You can set up [code monitors](/code_monitoring) to send notifications about new matching search results to Slack channels.

-

-## Requirements

-

-- You must have permission to create apps in your organization's Slack workspace.

-

-## Usage

-

-1. In Sourcegraph, click on the "Code Monitoring" nav item at the top of the page.

-1. Create a new code monitor or edit an existing monitor by clicking on the "Edit" button next to it.

-1. Go through the standard steps for a code monitor (if it's a new one) and select the action **Send Slack message to channel**.

-1. Paste your webhook URL into the "Webhook URL" field. (See "[Creating a Slack incoming webhook URL](#creating-a-slack-incoming-webhook-url)" below for detailed instructions.)

-1. Click on the "Continue" button, and then the "Save" button.

-

-### Creating a Slack incoming webhook URL

-

-1. Go to https://api.slack.com/apps and sign in to your Slack account if necessary.

-1. Click on the "Create an app" button.

-1. Create your app "From scratch".

-1. Give your app a name and select the workplace you want notifications sent to.

-

-1. Once your app is created, click on the "Incoming Webhooks" in the sidebar, under "Features".

-1. Click the toggle button to activate incoming webhooks.

-1. Scroll to the bottom of the page and click on "Add New Webhook to Workspace".

-1. Select the channel you want notifications sent to, then click on the "Allow" button.

-1. Your webhook URL is now created! Click the copy button to copy it to your clipboard.

-

-

-

-

-

-# How-tos

-

-* [Starting points](/code_monitoring/how-tos/starting_points)

-* [Setting up Slack notifications](/code_monitoring/how-tos/slack)

-* [Setting up Webhook notifications](/code_monitoring/how-tos/webhook)

-

-

-

-

-# Explanations

-

-* [Core concepts](/code_monitoring/explanations/core_concepts)

-* [Best practices](/code_monitoring/explanations/best_practices)

-

-

-

-

-# Core concepts

-

-Code monitors allow you to keep track of and get notified about changes in your code. Some use cases for code monitors include getting notifications for potential secrets, anti-patterns, or common typos committed to your codebase.

-

-Code monitors are made up of two main elements: **Triggers** and **Actions**.

-

-## Triggers

-

-A _trigger_ is an event which causes execution of an action. Currently, code monitoring supports one kind of trigger: "When new search results are detected" for a particular search query. When creating a code monitor, users will be asked to specify a query as part of the trigger.

-

-Sourcegraph will run the search query over every new commit for the searched repositories, and when new results for the query are detected, a trigger event is emitted. In response to the trigger event, any _actions_ attached to the code monitor will be executed.

-

-**Query requirements**

-

-A query used in a "When new search results are detected" trigger must be a diff or commit search. In other words, the query must contain `type:commit` or `type:diff`. This allows Sourcegraph to detect new search results periodically.

-

-## Actions

-

-An _action_ is executed in response to a trigger event. Currently, code monitoring supports three different actions:

-

-* Sending a notification email to the owner of the code monitor

-* Sending a Slack message to a preconfigured channel (Beta)

-* Sending a webhook event to an endpoint of your choosing (Beta)

+

-## Current flow

+### Current flow

To put it all together, a code monitor has a flow similar to the following:

A user creates a code monitor, which consists of:

- * a name for the monitor

- * a trigger, which consists of a search query to run periodically,

- * and an action, which is sending an email, sending a Slack message, or sending a webhook event

+- a name for the monitor

+- a trigger, which consists of a search query to run periodically,

+- and an action, which is sending an email, sending a Slack message, or sending a webhook event

Sourcegraph runs the query periodically over new commits. When new results are detected, a notification will be sent with the configured action. It will either contain a link to the search that provided new results, or if the "Include results" setting is enabled, it will include the result contents.

-

-

-

-# Best practices

+## Best practices

There are some best practices we recommend when creating code monitors.

-## Privacy and visibility

+### Privacy and visibility

-### Do not include confidential information in monitor names

+#### Do not include confidential information in monitor names

Every code monitor has a name that will be shown wherever the monitor is referenced. In notification actions this name is likely to be the only information about the event, so it’s important for identifying what was triggered, but also has to be “safe” to expose in plain text emails.

-### Do not include results when the notification destination is untrusted

+#### Do not include results when the notification destination is untrusted

Each code monitor action has the ability to include the result contents when sending a notification. This is often convenient because it lets you immediately see which results triggered the notification. However, because the result contents include the code that matched the search query, they may contain sensitive information. Care should be taken to only send result contents if the destination is secure.

For example, if sending the results to a Slack channel, every user that can view that channel will also be able to view the notification messages. The channel should be properly restricted to users who should be able to view that code.

-## Scale

+### Scale

Code monitors have been designed to be performant even for large Sourcegraph instances. There are no hard limits on the number of monitors or the volume of code monitored. However, depending on a number of factors such as the number of code monitors, the number of repos monitored, the frequency of commits, and the resources allocated to your instance, it's still possible to hit soft limits. If this happens, your code monitor will continue to work reliably, but it may execute more infrequently.

@@ -10464,7 +10389,7 @@ Because code insights historical search defaults to `fork:yes` and `archived:yes

All repositories in a historical search are unindexed, but a manual Sourcegraph search only includes indexed repositories. It's possible your manual searches are missing results from unindexed repositories.

-To investigate this, one can compare the list of repositories in the manual search (use a `select:repository` filter) with the list of repositories in the insight `series_points` database table. To see why a repository may not be indexing, refer to [this guide](/admin/troubleshooting#sourcegraph-is-not-returning-results-from-a-repository-unless-repo-is-included).

+To investigate this, one can compare the list of repositories in the manual search (use a `select:repository` filter) with the list of repositories in the insight `series_points` database table. To see why a repository may not be indexing, refer to [this guide](/admin/faq#sourcegraph-is-not-returning-results-from-a-repository-unless-repo-is-included).

## If the chart data point shows *lower* counts than a manual search

@@ -16103,25 +16028,35 @@ If the repository contains both a `lsif-java.json` file as well as `*.java`, `*.

-# Private Resources on on-prem data center via Sourcegraph Connect agent

+# Private Resources in On-Prem Data Centers via Sourcegraph Connect Agent

-This feature is in the Experimental stage. Please contact Sourcegraph directly via [preferred contact method](https://about.sourcegraph.com/contact) for more information.

+This feature is in the Experimental stage. [Contact us](https://about.sourcegraph.com/contact) for more information.

-As part of the [Enterprise tier](https://sourcegraph.com/pricing), Sourcegraph Cloud supports connecting private resources on any on-prem private network by running Sourcegraph Connect tunnel agent in customer infrastructure.

+As part of the [Enterprise tier](https://sourcegraph.com/pricing), Sourcegraph Cloud supports connecting to private code hosts and artifact registries in the customer's network by deploying the Sourcegraph Connect tunnel agent in the customer's network.

## How it works

-Sourcegraph will set up a tunnel server in a customer dedicated GCP project. Customer will start the tunnel agent provided by Sourcegraph with the provided credential. After start, the agent will authenticate and establish a secure connection with Sourcegraph tunnel server.

+Sourcegraph Connect consists of three components:

+

+### Tunnel Clients

+

+Forward proxy clients for the Sourcegraph Cloud instance's containers to reach the customer's private code hosts and artifact registries, through the tunnel server.

-Sourcegraph Connect consists of three major components:

+Managed by Sourcegraph, and deployed in the customer's Sourcegraph Cloud instance's VPC.

-Tunnel agent: deployed inside the customer network, which uses its own identity and encrypts traffic between the customer code host and client. Agent can only communicate with permitted customer code hosts inside the customer network. Only agents are allowed to establish secure connections with tunnel server, the server can only accept connections if agent identity is approved.

+### Tunnel Server

-Tunnel server: a centralized broker between client and agent managed by Sourcegraph. Its purpose is to set up mTLS, proxy encrypted traffic between clients and agents and enforce ACL.

+The broker between agents and clients, it authenticates agents and clients, enforces ACLs, sets up mTLS, and proxies encrypted traffic between agents and clients.

-Tunnel client: forward proxy clients managed by sourcegraph. Every client has its own identity and it cannot establish a direct connection with the customer agent, and has to go through tunnel server.

+Managed by Sourcegraph, and deployed in the customer's Sourcegraph Cloud instance's VPC.

-[link](https://link.excalidraw.com/readonly/453uvY8infI8wskSecGJ)

+### Tunnel Agents

+

+Deployed by the customer inside their network, agents proxy and encrypt traffic between the customer's private resources and the Sourcegraph Cloud tunnel clients.

+

+The agent has its own identity, and using credentials provided to the customer during deployment, the agent authenticates and establishes a secure connection with the tunnel server. Only agents are allowed to establish secure connections with the tunnel server, and the server only accepts a connection if the agent's identity is approved.

+

+Agents can only communicate with permitted code hosts and artifact registries.

+[Diagram link](https://link.excalidraw.com/readonly/453uvY8infI8wskSecGJ)

## Steps

### Initiate the process

-Customer should reach out to their account manager to initiate the process. The account manager will work with the customer to collect the required information and initiate the process, including but not limited to:

+The customer reaches out to their account manager to request this feature be enabled on their Sourcegraph Cloud instance.

-- The DNS name of the private code host, e.g. `gitlab.internal.company.net` or private artifact registry, e.g. `artifactory.internal.company.net`.

-- The port of the private code host, e.g., `443`, `80`, `22`.

-- The type of TLS certificate used by the private resource: either self-signed by an internal private CA or issued by a public CA.

+The account manager collects the required information from the customer, including but not limited to:

-Finally, Sourcegraph will provide the following:

+- The DNS names of the needed private resources (e.g. `gitlab.internal.company.net`, `artifactory.internal.company.net`)

+- The ports of the private resources (e.g. `443`, `80`, `22`)

+- The type of TLS certificates used by the private resources (e.g. self-signed, internal PKI, or issued by a public CA)

-- Instruction to run the agent along with credentials, and endpoint to allowlist egress traffic if needed.

+Sourcegraph provides:

+- The instructions, config file, and credentials to run the agent

+- The tunnel server's static public IPs and ports

### Create the connection

-Customer can follow the provided instructions and install the tunnel agent in the private network. At a high level:

+The customer installs the agent in their private network, following the instructions provided. At a high level:

-- Permit egress to the internet to a set of static IP addresses and corresponding ports to be provided by Sourcegraph.

-- Permit egress to the private resources at the given port.

-- Run the tunnel agent binary or docker images with the provided config files and credentials.

+- Configure internet egress to the provided tunnel server's static public IPs and ports

+- Configure intranet egress to the needed private resources

+- Deploy the tunnel agent via Docker container or binary, with the provided config file and credentials

-### Create the code host connection

+### Configure the code host connection

-Once the connection to private code host is established, the customer can create the [code host connection](/admin/code_hosts/) on their Sourcegraph Cloud instance.

+Once the tunnel is established between the agent and server, the customer can configure the [code host connection](/admin/code_hosts/) on their Sourcegraph Cloud instance.

## FAQ

+### Why TCP over gRPC?

+

+The tunnel between the client and agent is built using TCP over gRPC. gRPC is a high-performant and battle-tested framework, with built-in support for mTLS for a trusted secure connection. TCP and HTTP/2 are widely supported in the majority of customer environments. Compared to traditional VPN solutions, such as OpenVPN, IPSec, and SSH over bastion hosts, gRPC allows us to design our own protocol, and the programmable interface allows us to implement advanced features, such as fine-grained access control at a per-connection level, audit logging with effective metadata, etc.

+

### How are connections encrypted? Can anyone else inspect the traffic?

-Connections between the tunnel agent inside customer network and a tunnel server inside customer dedicated Sourcegraph GCP VPC use mTLS. Both agents, server and Sourcegraph clients have their own certificates and encrypt/decrypt traffic over TCP. mTLS enforce that both the client and the agent has to have a private key and present valid signed certificate from a trusted CA, which is not shared and this protects from [on-paths and spoofing attacks](https://www.cloudflare.com/en-gb/learning/access-management/what-is-mutual-tls/).

+Tunnel connections use mTLS between the agent in the customer's network and the clients in the customer's Sourcegraph Cloud instance's VPC. Agents, clients, and the server all have their own certificates and encrypt / decrypt traffic over TCP. mTLS requires agents and clients to have a private key, and present a valid signed certificate from a trusted CA, which is not shared. This protects customers and Sourcegraph from [on-path and spoofing attacks](https://www.cloudflare.com/en-gb/learning/access-management/what-is-mutual-tls/).

### How do you authenticate requests?

-Both tunnel clients and agents are assigned an identity corresponding to a GCP Service Account, and they are provided credentials to prove such identity. For tunnel agents, a Service Account key is distributed to the customer. For tunnel clients, it will utilize Workload Identity to prove its identity. They use them to authenticate against tunnel server by sending signed JWT tokens and public key. JWT token contains information about GCP service account credential public key required to validate signature and confirm identity of requestor. The server will then sign the requestor public key and respond with a signed certificate containing GCP Service Account email as a Subject Alternative Name (SAN).

+Both tunnel agents and clients are assigned an identity corresponding to a GCP Service Account, and they are provided credentials to prove this identity. Agents use the Service Account key provided to the customer during deployment, and clients use Workload Identity to prove their identity. They use these credentials to authenticate to the tunnel server, by sending signed JWT tokens and public keys. JWT tokens contain details to specify the GCP Service Account credential public key required to validate their signature to confirm the identity of the requestor. The server then signs the requestor's public key and responds with a signed certificate containing the GCP Service Account email as a Subject Alternative Name (SAN).

-Finally, if the customer NAT Gateway/Exit Gateway has stable CIDRs, we can provision firewall rules to restrict access to the tunnel server from the provided IP ranges only for an added layer of security.

+For an added layer of security, if the customer network's NAT / internet gateway uses public IPs in a stable CIDR range, Sourcegraph can provision firewall rules to restrict access to the tunnel server from the provided IP ranges.

-### How do you enforce authorization to restrict what requests can reach the private code host?

+### How do you enforce authorization to restrict which requests can reach private resources?

-The tunnel server is configured with ACLs. With mTLS every entity in the network has its own identity. The client's identity is used as a source for accessing customer private code hosts, while the agent's identity is used for destination. Tunnel server ensures that only clients with proven identity can communicate with customer tunnel agents.

+With mTLS, every entity in the network has its own identity. The tunnel server is configured with ACLs, using the client's identity as the source, and the agent's identity as the destination. This ensures only clients with a proven identity can communicate with agents.

-### Do you rotate the encryption keys?

+### How do you manage keys and certificates?

-Encryption keys are short-lived and both tunnel agents and clients have to refresh certificates every 24h. The customer may also manually rotate it by restarting the tunnel agent.

+We utilize GCP Certificate Authority Service (CAS), a managed Public Key Infrastructure (PKI) service. It is responsible for the storage of root and intermediate CA signing keys, and the signing of client certificates. Access to GCP CAS is governed by GCP IAM, and only necessary individuals and services can access CAS, with audit trails in GCP Logging.

-### How do you manage keys or certificates?

+The TLS private keys in the agents and clients only exist in memory, and are never transmitted or shared. Only the public key is sent to the tunnel server, to issue a signed certificate, to establish the mTLS connection.

-We utilize GCP Certificate Authority Service (CAS), a managed Public Key Infrastructure (PKI) service. It is responsible for the storage of all signing keys (e.g., root CAs, immediate CAs), and the signing of client certificates. Access to GCP CAS is governed by GCP IAM service and only necessary services or individuals will have access to the service with audit trails in GCP Logging.

+### How often do you rotate the encryption keys?

-The TLS private key on the tunnel agent or tunnel clients only exist in memory, and are never shared with other parties. Only the public key is sent to the tunnel server to issue a signed certificate to establish mTLS connection.

+Encryption keys are short-lived, and both tunnel agents and clients refresh their certificates every 24h. The customer may also manually rotate the agent's certificate by restarting the agent.

### How do you audit access?

-Tunnel server will log all critical operations with sufficient metadata to identify the requester to GCP Logging with a default 30-day retention policy. We will also be monitoring unauthorized access events to watch out for potential attackers.

+Tunnel server logs operations to GCP Logging, with sufficient metadata to identify the requester, and a 30-day retention policy. We also monitor unauthorized access events to watch for potential attacks.

-### Why TCP over gRPC?

+### What if an attacker gains access to the Sourcegraph Cloud instance?

-The tunnel is built using TCP over gRPC. gRPC is a high-performant and battle-tested framework, e.g., built-in support for mTLS for a trusted secure connection. We believe TCP and HTTP/2 are widely supported in majority of environments. Moreover, the simplicity of having a single endpoint for connection between customer environment and their Cloud instance greatly simplifies the work required for customer IT admin. Compared to traditional VPN solutions, such as OpenVPN, IPSec, and SSH over bastion hosts, gRPC allows us to design our own protocol, and the programmable interface allows us to implement advanced features, e.g., fine-grained access control at a per connection level, audit logging with rich metadata.

+If an attacker gains access to the Sourcegraph Cloud instance's containers, this would be a security breach, and trigger our Incident Response process. However, we have many controls in place to prevent this from happening, where Cloud infrastructure access always requires approval, and the Security team is on-call for unexpected usage patterns. Learn more in our [security portal](https://security.sourcegraph.com/).

-### How many agents can a customer start?

+Please reach out to us if you have any specific questions regarding our Cloud security posture, we are happy to provide more detail to address your concerns.

-To obtain high availability, customers can start multiple tunnel agents. Each of the agents will use the same GCP Service Account credentials, authenticate with the tunnel server and establish connection to it. Tunnel client will randomly select an available agent to forward the traffic.

+### How do I need to configure my network for the agent to work?

-### How does the customer configure the network to make the agent work?

+The tunnel agent needs to connect to the tunnel server, and your private resources. Sourcegraph provides dedicated static IPs and ports to connect to the tunnel server. The customer must configure network egress to allow TCP (HTTP/2) traffic access to these IPs and ports.

-The customer tunnel agent has to authenticate and establish connection with the tunnel server. Sourcegraph will provide a single dedicated static IP from customer dedicated GCP VPC which is used to connect with the tunnel server. Customer has to configure network egress to allow TCP (HTTP/2) traffic access to this static IP.

+### How can I restrict access to my private resources?

-### How can I restrict access to my private code host connection?

+The customer has full control of their network where they deploy the tunnel agent, and can configure, monitor, and terminate connections at will.

-The customer has full control over the tunnel agent configuration and they can terminate the connection at any time.

-What if the attacker gains access to the frontend?

+We recommend implementing an allowlist to restrict the egress traffic of the agent to the IP addresses provided by Sourcegraph and to the specific private resources your Sourcegraph Cloud instance needs to access, and configuring your firewall to alert you if this ACL is hit.

-In the event of an attacker gaining access to the Sourcegraph containers, we consider this to be a security breach and we have Incident response processes in place that we will follow. However, we have many controls in place to prevent this from happening where Cloud infrastructure access always requires approval and the Security team is on-call for unexpected usage patterns. You may learn more from our [Security Portal](https://security.sourcegraph.com/).

+If your code hosts or registries use DNS names, the agent needs access to the DNS server configured on its host.

-Please reach out to us if you have any specific questions regarding our Cloud security posture, and we are happy to provide more detail to address your concerns.

+### How can I harden the tunnel agent deployment?

-### How to harden the tunnel agent deployment?

+The tunnel agent is designed and built with a minimal footprint and attack surface, and is scanned for vulnerabilities.

-We recommend using an allowlist to limit the egress traffic of the agent to IP addresses provided by Sourcegraph and specific private resources you would like to permit access. This will prevent the agent to talk to arbitrary services, and reduce the blast radius in the event of a security event.

+You can:

-### How can I audit the data Sourcegraph has access to in my environment?

+- Deploy the agent on a hardened container platform

+- Store the agent credential and config content in a secrets management system and mount these secrets to the container

+- Forward the agent's logs to your log management system

-The tunnel is secured and authenticated by mTLS over gRPC, and everything is encrypted over transit. If a customer is looking to perform an audit, such as TLS inspection, on the connection between the private resources and Sourcegraph Cloud, we recommend only intercepting and inspecting traffic between the tunnel agent and private resources. The connection between the tunnel agent and Sourcegraph Cloud is using a custom protocol, and the decrypted payload has very little value.

+### How can I inspect the agent's traffic, and audit the data the agent is accessing in my environment?

-### Can I use self-signed TLS certificate for my private resources?

+If a customer needs to inspect and audit traffic, such as performing TLS inspection on the connection between the private resources and Sourcegraph Cloud, we recommend inspecting traffic on the connections between the tunnel agent and internal resources, as this traffic uses the protocols and encryption of the internal resources.

+

+The tunnel from the agent to the server is encrypted and authenticated by mTLS over gRPC, and uses a custom protocol, so the decrypted payload isn't usable for traffic inspection.

+

+### Can I use Internal PKI or self-signed TLS certificates for my private resources?

+

+Yes. Please work with your account team to add the public certificate chain of your internal CAs, and / or your private resources' self-signed certs, under `experimentalFeatures.tls.external.certificates` in your instance's [site configuration](/admin/config/site_config#experimentalFeatures).

+

+### Is this connection highly available?

-Yes. Please work with your account team to add the certificate chain of your internal CA to [site configuration](/admin/config/site_config#experimentalFeatures) at `experimentalFeatures.tls.external.certificates`

+To achieve high availability, customers can run multiple tunnel agents across their network. Each agent uses the same GCP Service Account credentials, authenticates with the tunnel server, and establishes their own connection to it. Tunnel clients randomly select an available agent to forward traffic through.

@@ -16571,9 +16522,10 @@ As part of this service you will receive a number of benefits from our team, inc

All of Sourcegraph's features are available on Sourcegraph Cloud instances out-of-the-box, including but not limited to:

- [Cody](/cody)

+- [Deep Search](/deep-search)

- [Server-side Batch Changes](/batch-changes/server-side)

- [Precise code navigation powered by auto-indexing](/code-search/code-navigation/auto_indexing)

-- [Code Monitoring](/code_monitoring/) (including [email delivery](#managed-smtp) of notifications)

+- [Code Monitoring](/code_monitoring/)

- [Code Insights](/code_insights/)

### Access restrictions

@@ -16778,7 +16730,7 @@ Sourcegraph Cloud instances are single-tenant, limiting exposure to outages and

### Is data safe with Sourcegraph Cloud?

-Sourcegraph Cloud utilizes a single-tenant architecture. Each customer's data is isolated and stored in a dedicated GCP project. Data is [encrypted in transit](https://cloud.google.com/docs/security/encryption-in-transit) and [at rest](https://cloud.google.com/docs/security/encryption/default-encryption) and is backed up daily. Such data includes but is not limited to, customer source code, repository metadata, code host connection configuration, and user profile. Sourcegraph Cloud also has [4 supported regions](#multiple-region-availability) on GCP to meet data sovereignty requirements.

+Sourcegraph Cloud utilizes a single-tenant architecture. Each customer's data is isolated and stored in a dedicated GCP project. Data is [encrypted in transit](https://cloud.google.com/docs/security/encryption-in-transit) and [at rest](https://cloud.google.com/docs/security/encryption/default-encryption) and is backed up daily. Such data includes but is not limited to, customer source code, repository metadata, code host connection configuration, and user profile. Sourcegraph Cloud also has [5 supported regions](#multiple-region-availability) on GCP to meet data sovereignty requirements.

Sourcegraph continuously monitors Cloud instances for security vulnerability using manual reviews and automated tools. Third-party auditors regularly perform testing to ensure maximum protection against vulnerabilities and are automatically upgraded to fix any vulnerability in third-party dependencies. In addition, GCP’s managed offering regularly patches any vulnerability in the underlying infrastructure. Any infrastructure changes must pass security checks, which are tested against industry-standard controls.

@@ -21262,6 +21214,43 @@ curl \

+

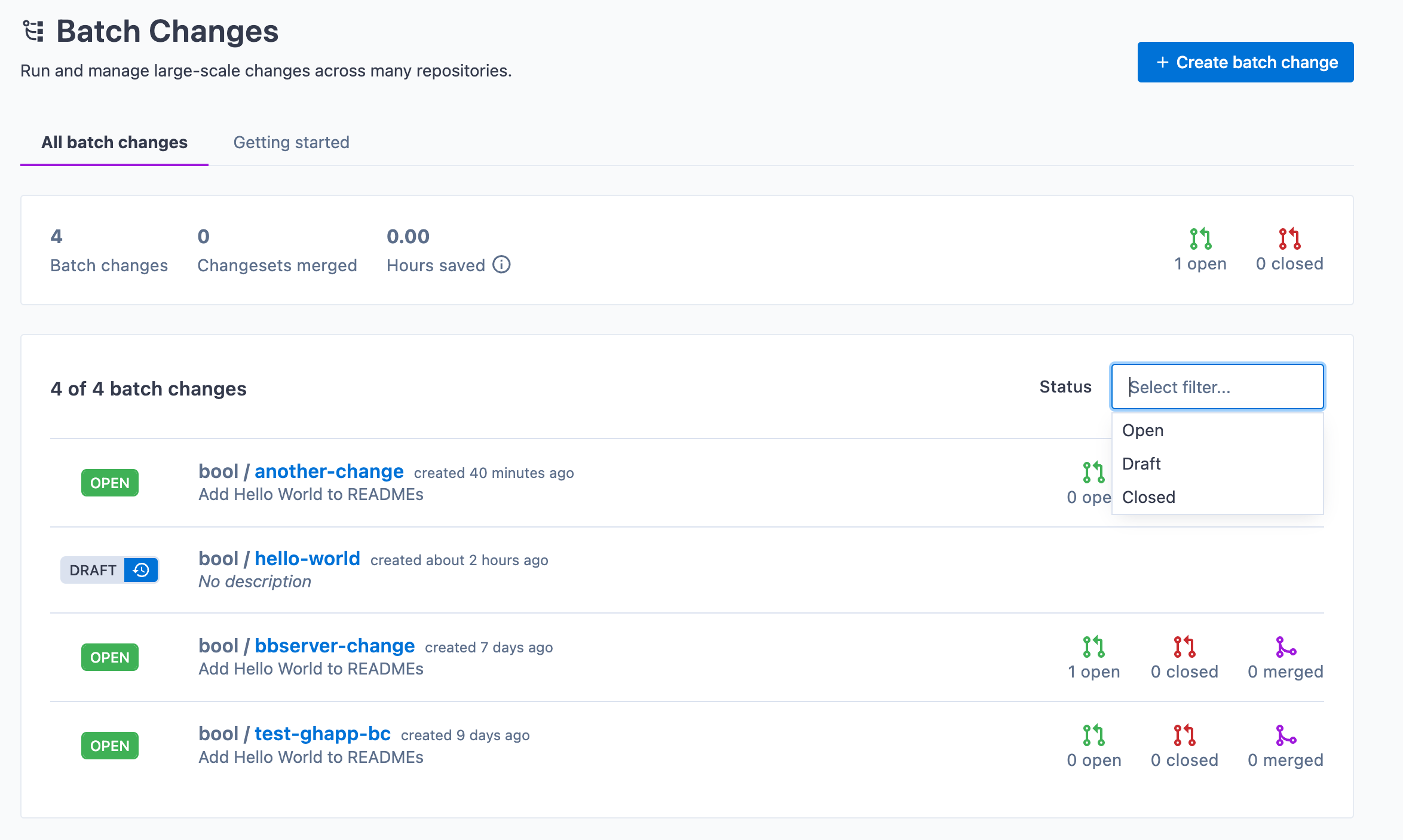

+# Viewing Batch Changes

+

+Learn how to view, search, and filter your Batch Changes.

+

+## Viewing batch changes

+

+You can view a list of Batch Changes by clicking the **Batch Changes** icon in the top navigation bar:

+

+

+

+### Title-based search

+

+You can search through your previously created batch changes by title. This search experience makes it easier to find the batch change you're looking for, especially when you have large volumes of batch changes to monitor.

+

+Start typing the keywords that match the batch change's title, and you will see a list of relevant results.

+

+

+

+## Filtering Batch Changes

+

+You can also use filters to switch between showing all open or closed Batch Changes.

+

+

+

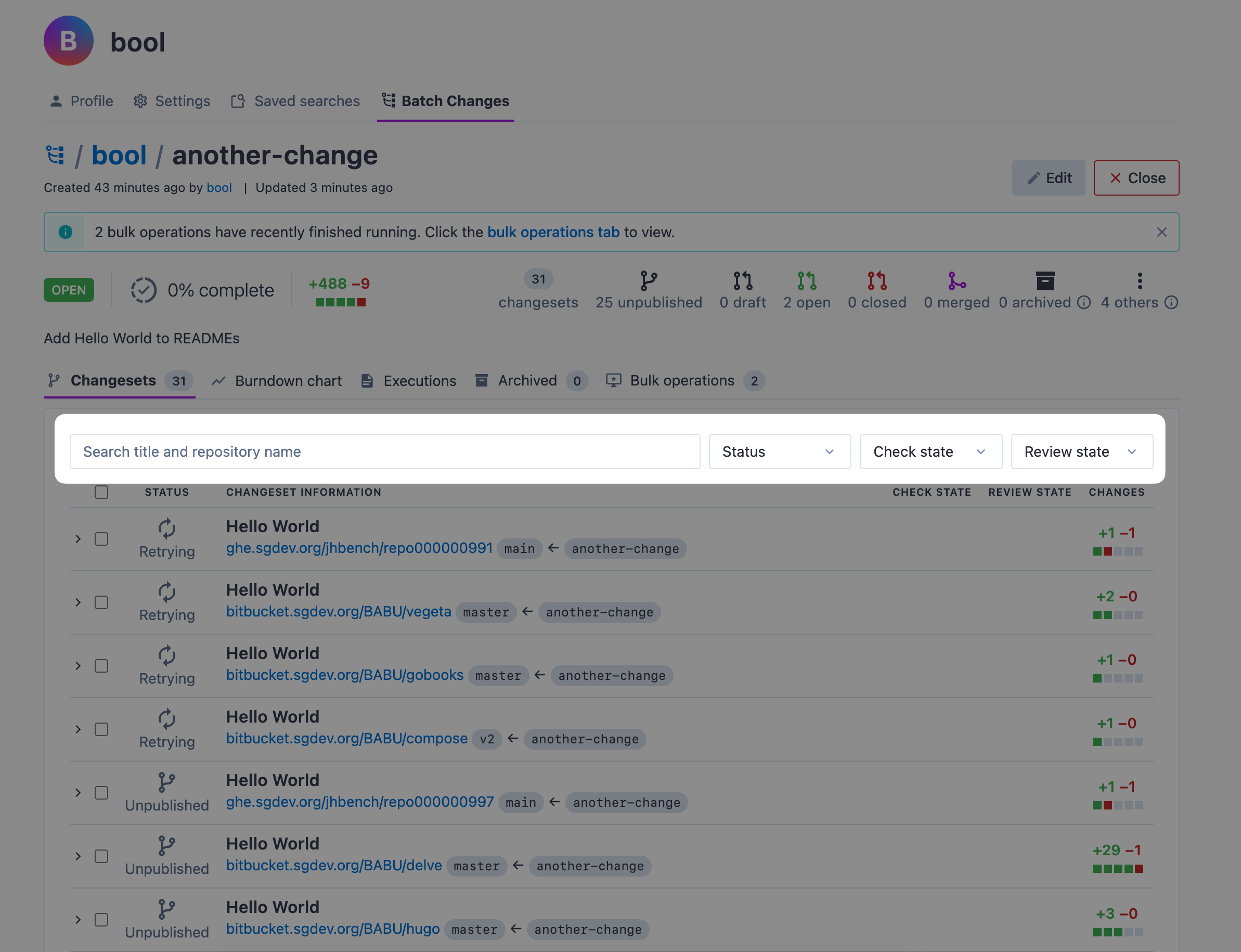

+## Filtering changesets

+

+When looking at a batch change, you can search and filter the list of changesets with the controls at the top of the list:

+

+

+

+## Administration

+

+Once a batch change is open, any Sourcegraph user can view it. However, the namespace determines who can administer it, such as editing or deleting it. When a batch change is created in a user namespace, only that user (and site admins) can administer it. When a batch change is created in an organization namespace, all members of that organization (and site admins) can administer it.

+

+

+

# Updating Go Import Statements using Comby

@@ -23817,14 +23806,91 @@ If the repository containing the workspaces is really large and it's not feasibl

Batch Changes are created by writing a [batch spec](/batch-changes/batch-spec-yaml-reference) and executing that batch spec with the [Sourcegraph CLI](https://github.com/sourcegraph/src-cli) `src`.

+There are two ways of creating a batch change:

+

+1. On your Sourcegraph instance, with [server-side execution](#on-your-sourcegraph-instance)

+2. On your local machine, with the [Sourcegraph CLI](#using-the-sourcegraph-cli)

+

+## On your Sourcegraph instance

+

+Here, you'll learn how to create and run a batch change via server-side execution.

+

+To get started, click the **Batch Changes** icon in the top navigation or navigate to `/batch-changes`.

+

+### Create a batch change

+

+Click the **Create batch change** button on the Batch Changes page, or go to `/batch-changes/create`.

+

+

+

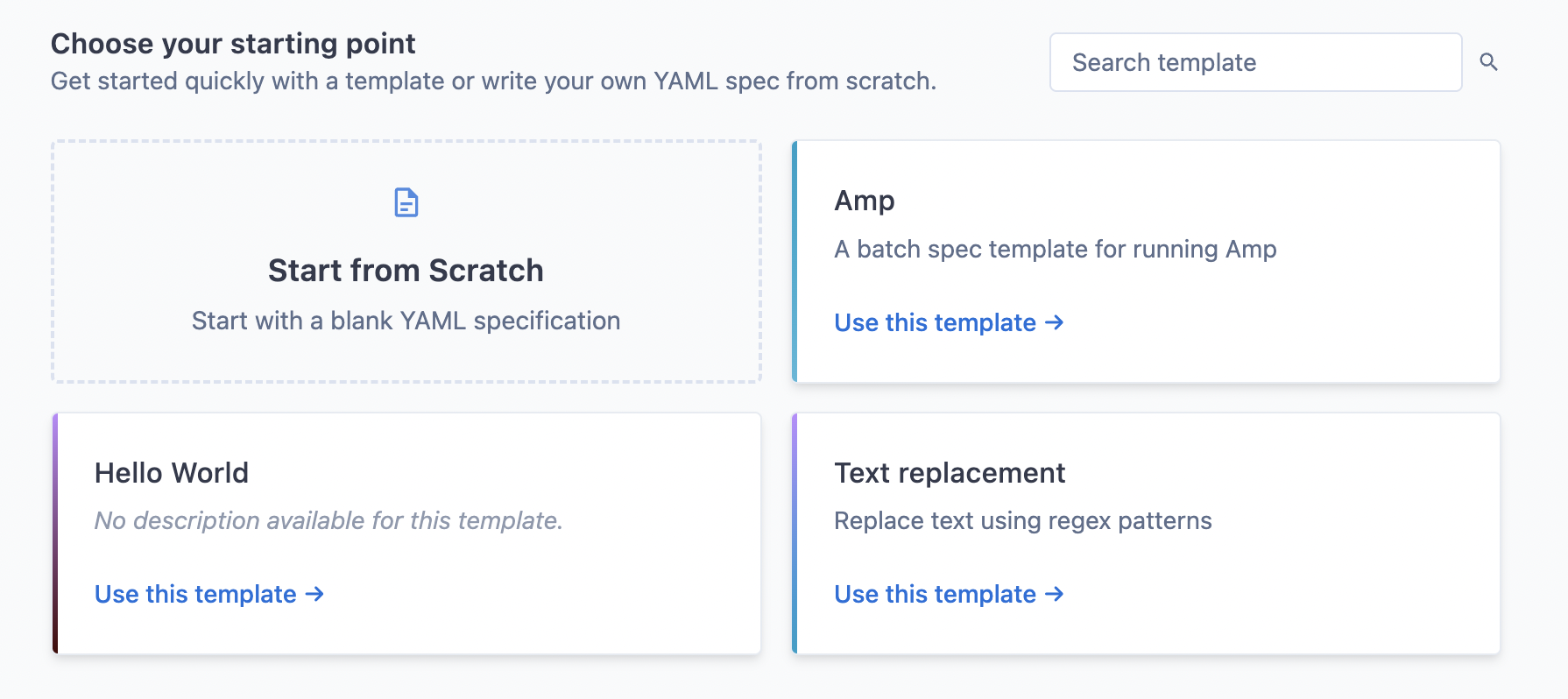

+You will be redirected to a page showing you a list of curated templates.

+

+### Choosing a template

+

+Templates is a feature available in Sourcegraph 6.6 and later.

+

+From the template selection page, you can either:

+

+- **Pick a template** from the list of curated templates that best matches your use case

+- **Click "Start from Scratch"** if you prefer to continue without a template

+

+

+

+Your site admin can curate the list of available templates to match your organization's specific needs and use cases.

+

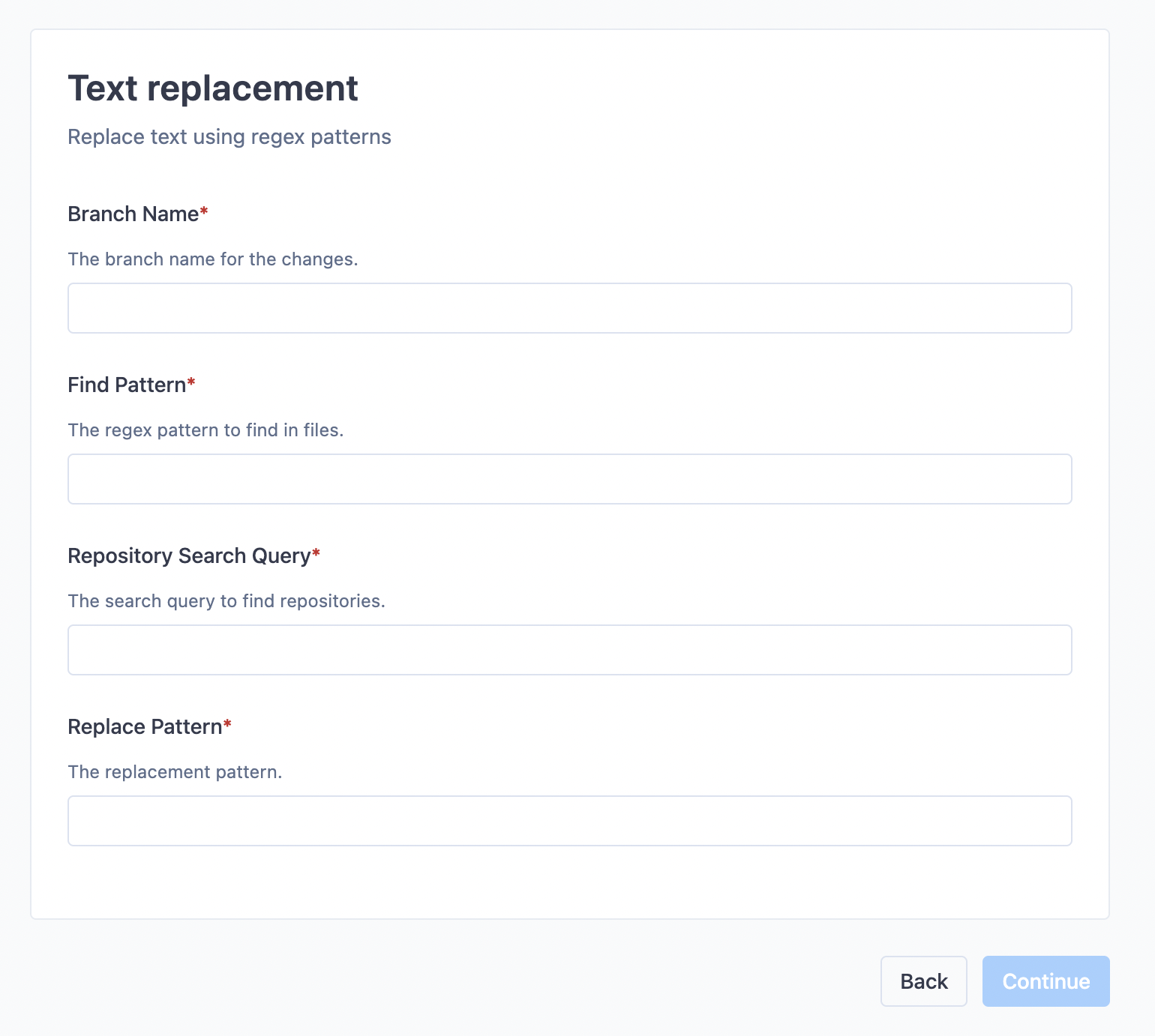

+### Filling out template fields

+

+If you selected a template, you will need to fill out the form fields specific to that template. These fields will customize the batch spec to your specific requirements.

+

+

+

+The form fields are validated by regular expressions. If the validation fails, look at the description of that field to see what kind of value is required.

+

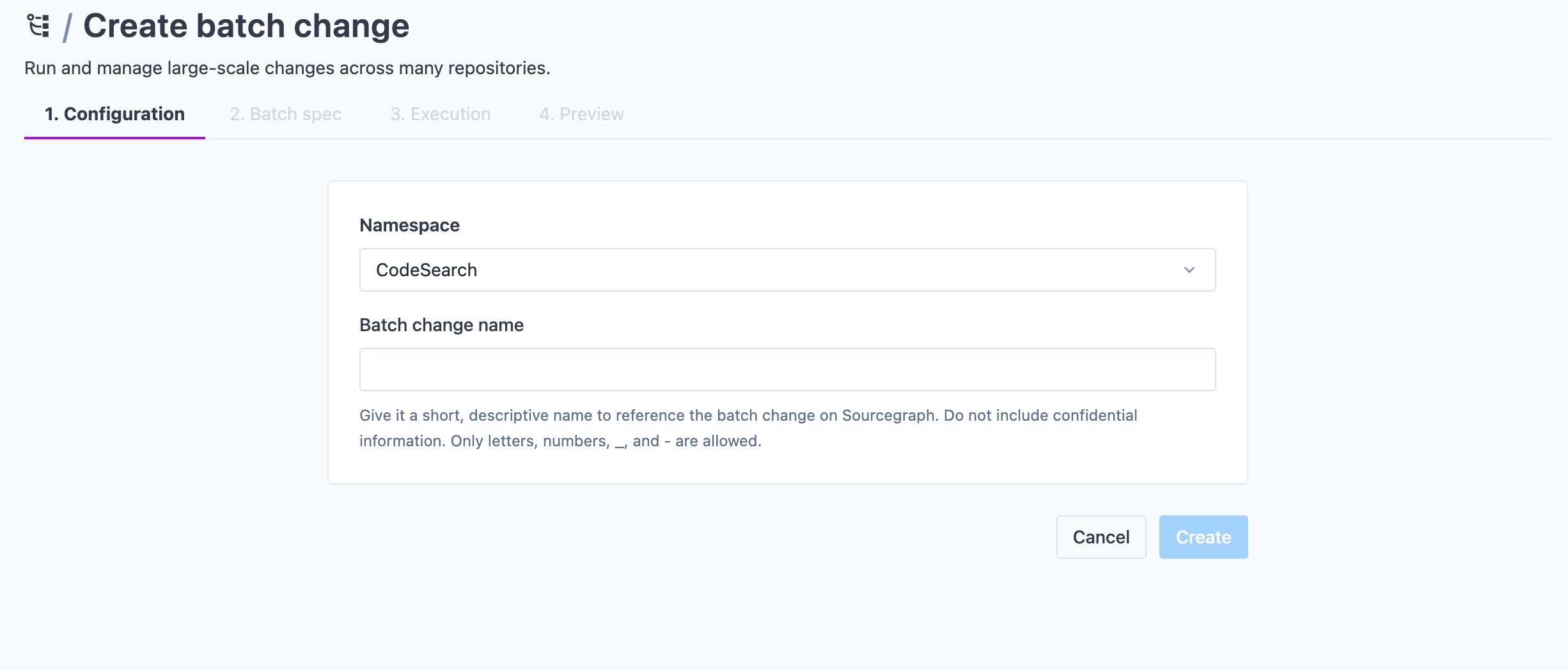

+### Choose a name for your batch change

+

+After you've filled out the template form fields, or after you've clicked "Start from Scratch", you will be prompted to choose a name for your namespace and optionally define a custom namespace to put your batch change in.

+

+

+

+Once done, click **Create**.

+

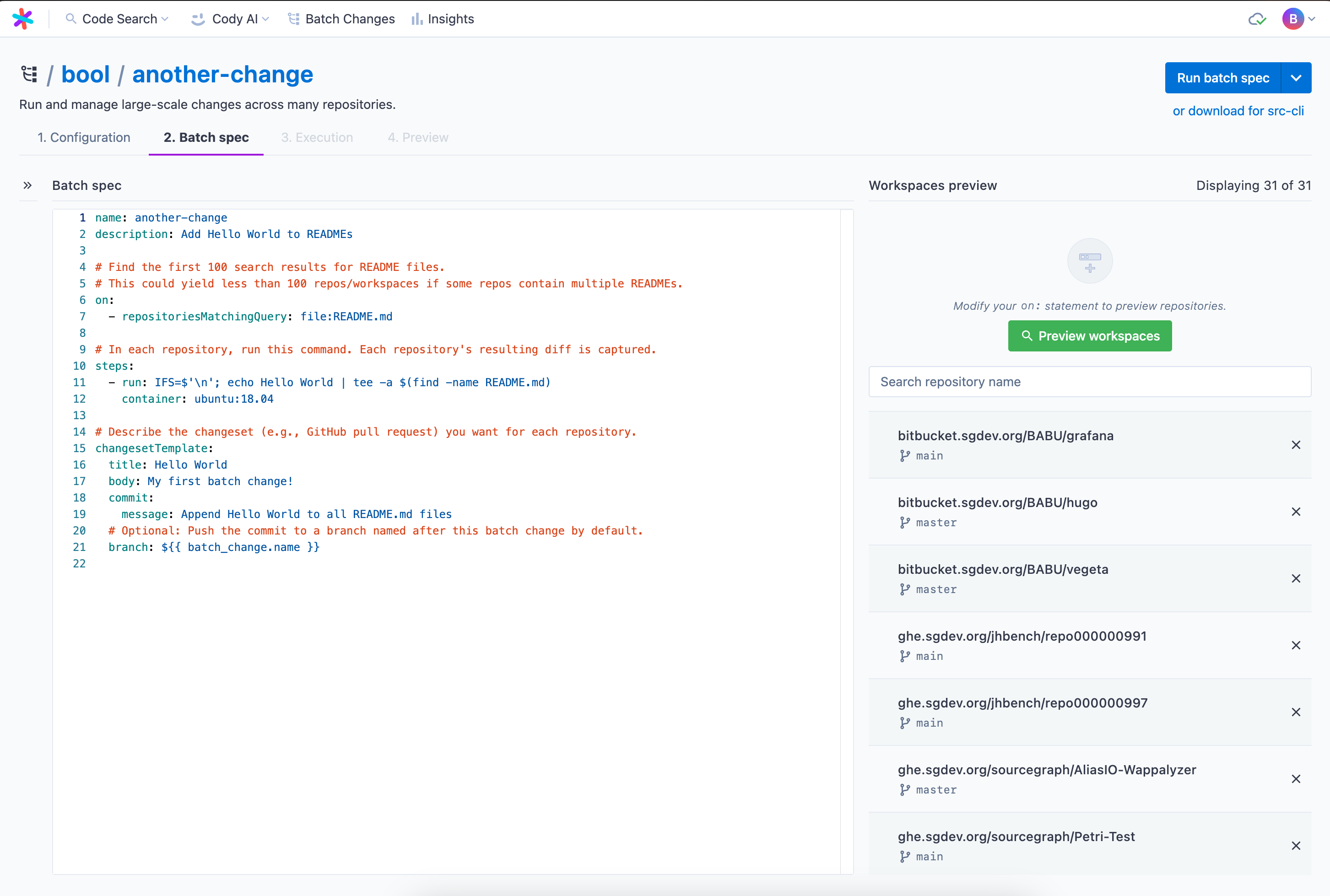

+### Previewing batch spec and workspaces

+

+You can now see the batch spec and run a preview of the affected repositories and workspaces from the right-hand side panel. After resolution, it will show all the workspaces in repositories that match the given `on` statements. You can search through them and determine if your query is satisfying before starting execution. You can also exclude single workspaces from this list.

+

Batch Changes can also be used on [multiple projects within a monorepo](/batch-changes/creating-changesets-per-project-in-monorepos) by using the `workspaces` key in your batch spec.

-There are two ways of creating a batch change:

+The library contains examples that you can apply right into your batch spec if you need inspiration. Your site admin can manage the library of examples.

+

+

+

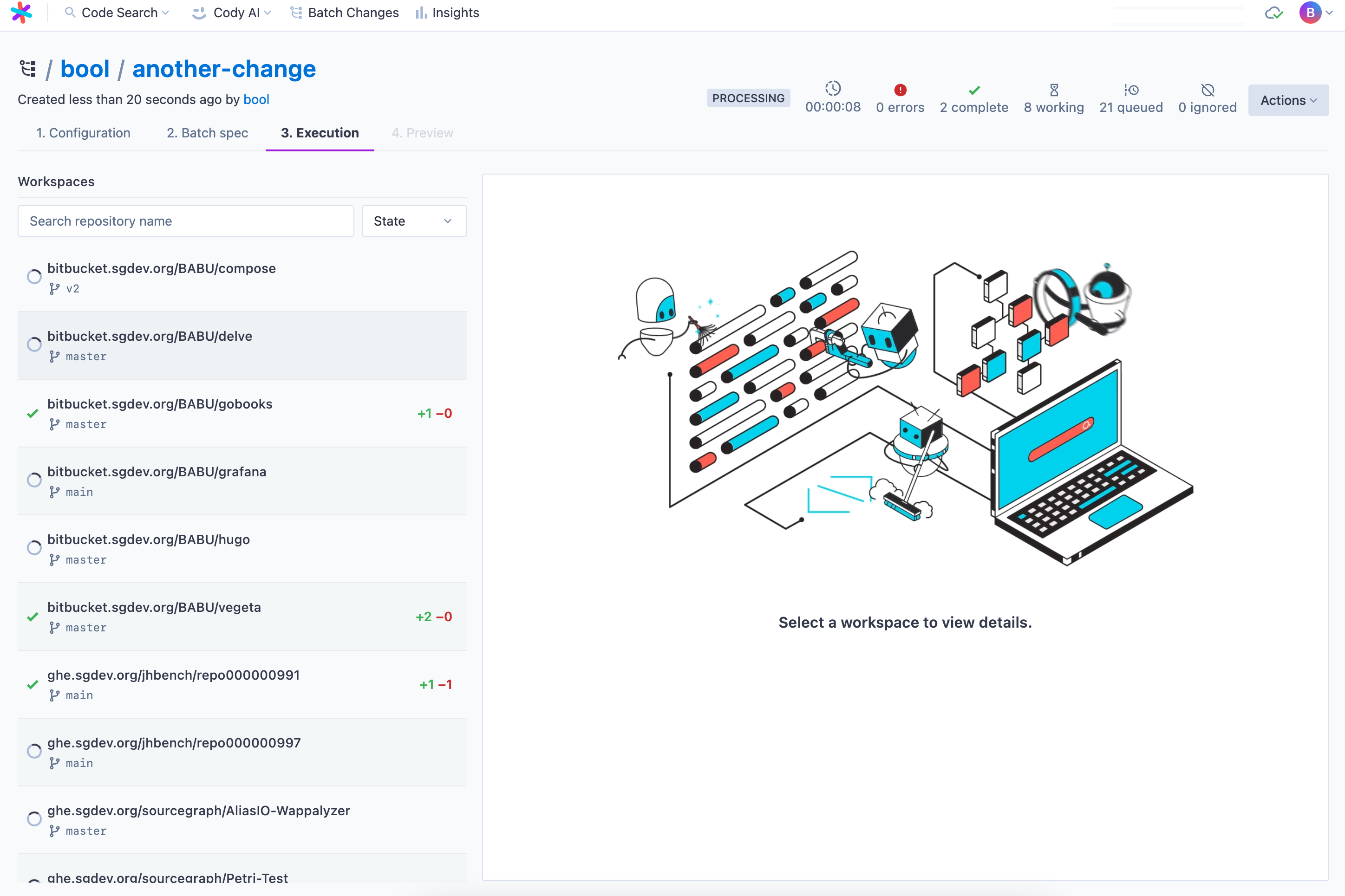

+### Executing your batch spec

+

+When the spec is ready to run, ensure the [preview](/batch-changes/create-a-batch-change#previewing-workspaces) is up to date and then click **Run batch spec**. This takes you to the execution screen. On this page, you see:

+

+- Run statistics at the top

+- All the workspaces, including status and diff stat, in the left panel

+- Details on a particular workspace on the right-hand side panel where you can see steps with:

+ - Logs

+ - Results

+ - Command

+ - Per-step diffs

+ - Output variables

+ - Execution timelines for debugging

+

+Once finished, you can proceed to the batch spec preview, as you know it from before.

-1. On your local machine, with the [Sourcegraph CLI](#create-a-batch-change-with-the-sourcegraph-cli)

-2. Remotely, with [server-side execution](/batch-changes/server-side)

+

+

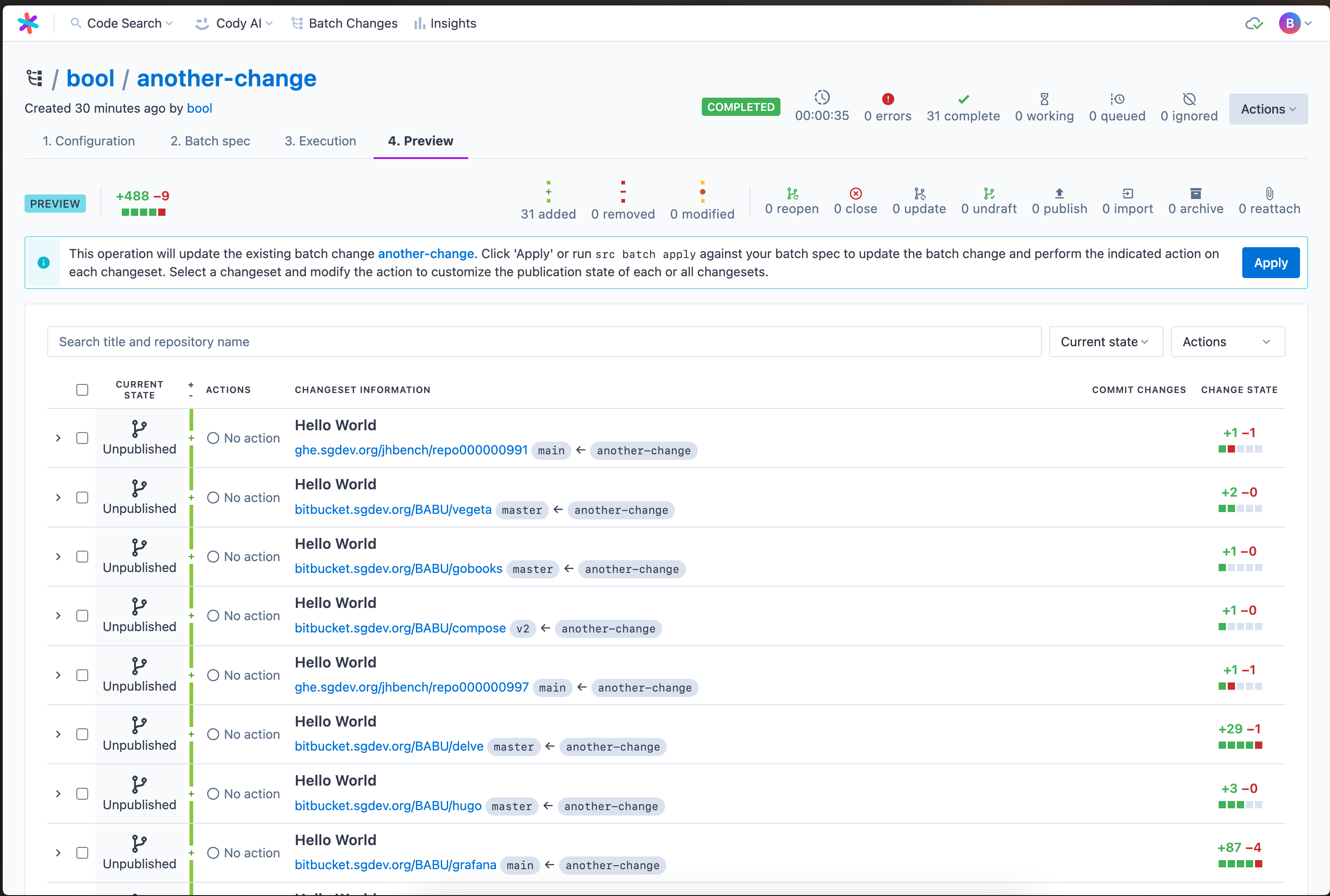

+### Previewing and applying the batch spec

+

+On this page, you can review the proposed changes. Once satisfied, click **Apply**.

+

+Congratulations, you ran your first batch change server-side 🎊

+

+

-## Create a batch change with the Sourcegraph CLI

+## Using the Sourcegraph CLI

This part of the guide will walk you through creating a batch change on your local machine with the Sourcegraph CLI.

@@ -23896,7 +23962,7 @@ src batch preview -f YOUR_BATCH_SPEC.yaml

After you've applied a batch spec, you can [publish changesets](/batch-changes/publishing-changesets) to the code host when you're ready. This will turn the patches into commits, branches, and changesets (such as GitHub pull requests) for others to review and merge.

-You can share the link to your batch change with other users if you want their help. Any user on your Sourcegraph instance can [view it in the batch changes list](/batch-changes/create-a-batch-change#viewing-batch-changes).

+You can share the link to your batch change with other users if you want their help. Any user on your Sourcegraph instance can [view it in the batch changes list](/batch-changes/view-batch-changes).

If a user viewing the batch change lacks read access to a repository in the batch change, they can only see [limited information about the changes to that repository](/batch-changes/permissions-in-batch-changes#repository-permissions-for-batch-changes) (and not the repository name, file paths, or diff).

@@ -23928,91 +23994,6 @@ src batch preview -f your_batch_spec.yaml -namespace

@@ -26900,10 +26881,10 @@ Learn more about how we think about [the ROI of Sourcegraph in our blog](https:/

### Overview metrics

-| **Metric** | **Description** |

-| ------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------ |

-| Percent of code written by Cody | Percentage of code written by Cody out of all code written during the selected time. [Learn more about this metric.](/analytics/pcw) |

-| Lines of code written by Cody | Total lines of code written by Cody during the selected time |

+| **Metric** | **Description** |

+| ---------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------ |

+| Percent of code written by Cody | Percentage of code written by Cody out of all code written during the selected time. [Learn more about this metric.](/analytics/pcw) |

+| Characters of code written by Cody | Total characters of code written by Cody during the selected time |

### User metrics

@@ -27044,9 +27025,9 @@ The Sourcegraph Analytics API is an API that provides programmatic access to you

For Sourcegraph Analytics, you can generate an access token for programmatic access. Tokens are long-lived with an optional expiry and have the same permissions to access instance data as the user who created them.

-### Token management APIs

+### Getting Started

-Token management is currently only available via the Sourcegraph Analytics API. Token management APIs are authenticated via the `cas` session cookie.

+Access tokens are created using the `cas` cookie for authentication to the token creation endpoint. Access tokens are longer lived than the `cas` cookie making them more suitable for programmatic access to the Sourcegraph Analytics API.

- Sign in to [Sourcegraph Analytics](https://analytics.sourcegraph.com).

- Retrieve your session cookie, `cas`, from your browser's developer tools.

@@ -27433,7 +27414,6 @@ Here is a snapshot of an unhealthy dashboard, where no active instance is runnin

Sourcegraph Validation is currently experimental.

-

## Validate Sourcegraph Installation

Installation validation provides a quick way to check that a Sourcegraph installation functions properly after a fresh install

@@ -27782,126 +27762,6 @@ When that is done, update your DNS records to point to your gateway's external I

-

-# Administration troubleshooting

-

-### Docker Toolbox on Windows: `New state of 'nil' is invalid`

-

-If you are using Docker Toolbox on Windows to run Sourcegraph, you may see an error in the `frontend` log output:

-

-```bash

-frontend |

-frontend |

-frontend |

-frontend | New state of 'nil' is invalid.

-```

-

-After this error, no more `frontend` log output is printed.

-

-This problem is caused by [docker/toolbox#695](https://github.com/docker/toolbox/issues/695#issuecomment-356218801) in Docker Toolbox on Windows. To work around it, set the environment variable `LOGO=false`, as in:

-

-```bash

-docker container run -e LOGO=false ... sourcegraph/server

-```

-

-> WARNING: Running Sourcegraph on Docker Toolbox for Windows is not supported for production deployments.

-

-### Submitting a metrics dump

-

-If you encounter performance or instability issues with Sourcegraph, we may ask you to submit a metrics dump to us. This allows us to inspect the performance and health of various parts of your Sourcegraph instance in the past and can often be the most effective way for us to identify the cause of your issue.

-

-The metrics dump includes non-sensitive aggregate statistics of Sourcegraph like CPU & memory usage, number of successful and error requests between Sourcegraph services, and more. It does NOT contain sensitive information like code, repository names, user names, etc.

-