Significant performance degradation in Kafka JMX metrics on upgrade #7944

Replies: 7 comments 17 replies

-

Beta Was this translation helpful? Give feedback.

-

|

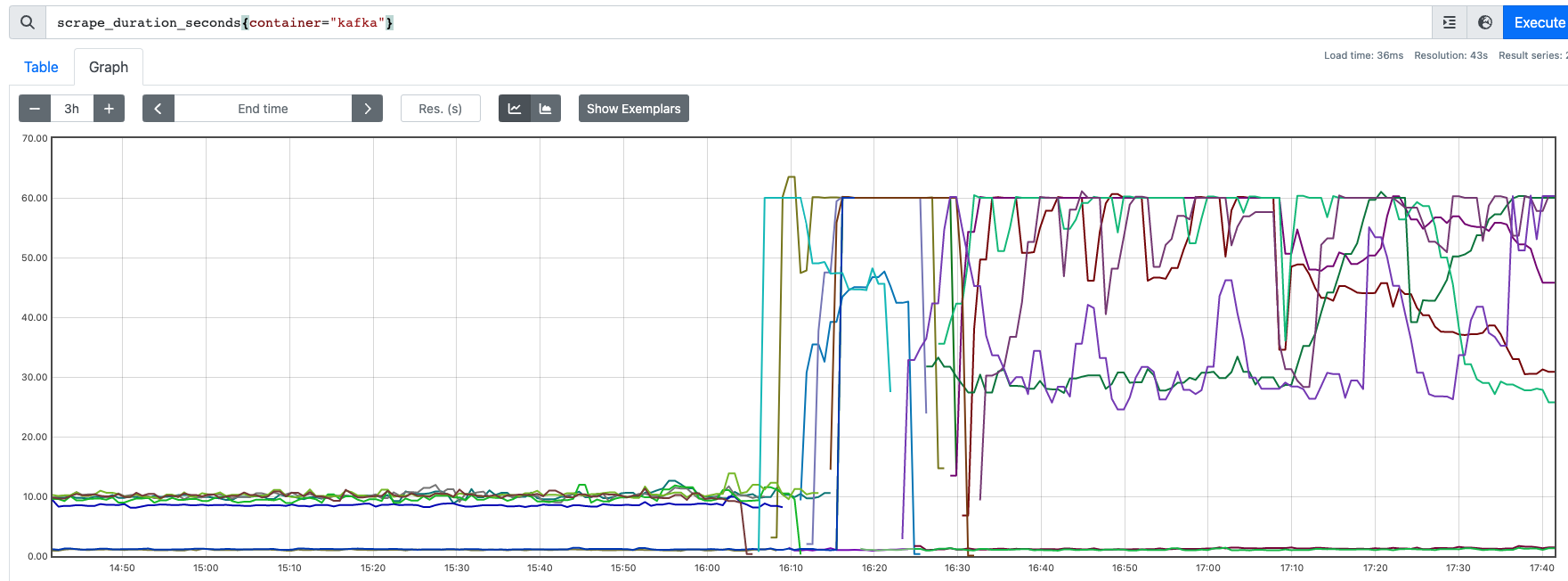

@scholzj apologies, you are right that a legend would have helped. Unfortunately I can't easily add that historically, though I could rerun the test if really needed. To clarify, these are the scrap time in seconds, with each line representing one Kafka broker. No other services e.g. Zookeeper are included. The low values on the left are the brokers when running 0.27.1-3.0.0. High values in the right are 0.32.0-3.3.1. Building with an older JMX Prometheus Exporter sounds like a good test. I will look into that today. Many thanks. |

Beta Was this translation helpful? Give feedback.

-

|

@scholzj thank you very much for your help last week. I didn't go back to an older version of the JMX Prometheus Exporter in the end, but the snippet of info that the metrics were coming from that exporter was very useful. I did some reading of the exporter docs and Github issues, and found many reports of people adding |

Beta Was this translation helpful? Give feedback.

-

|

Just noting that we experienced the same issue when upgrading from Strimzi 0.29.0 Kafka 3.0.1 to Strimzi 0.33.2 Kafka 3.3.2 (via Kafka 3.2.0). The difference is not as significant, but this is on a 3 broker cluster with almost no traffic on it. We also see liveness/readiness probe timeouts too. I'll also try |

Beta Was this translation helpful? Give feedback.

-

|

We see the same performance problem after upgrading strimzi from I can confirm the jmx_exporter is the problem. I have build my own image where I downgrade the prometheus agent to 0.16.1 and it works with the same scrape time as before. My Dockerfile for the custom image: |

Beta Was this translation helpful? Give feedback.

-

|

Hi @scholzj does it make sense to keep 2 versions of prometheus agents (old on - like a stable and new one and switch between them via feature flag) in single docker image? |

Beta Was this translation helpful? Give feedback.

-

|

Sure, I understand your concern. It seems like there might be a performance issue after upgrading your Kafka clusters. Have you checked if there are any specific configurations or settings that might have changed between the versions? Also, have you tried monitoring the system resources during these scrapes to see if there's any bottleneck causing the timeouts? |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Please use this to only for bug reports. For questions or when you need help, you can use the GitHub Discussions, our #strimzi Slack channel or out user mailing list.

Describe the bug

We have recently updated two of our cluster from operator

0.27.1to0.32.0, with a Kafka version upgrade from3.0.0to3.3.1.Following the update we have seen significant performance degradation in the scraping of JMX metrics. Previously scrapes would average around 10 seconds. Following the upgrade all scrapes are slower, with many timing out after 60 seconds.

To Reproduce

Steps to reproduce the behavior:

scrape_duration_secondsExpected behavior

I would expect to see little difference in performance between versions, and certainly not a degradation to the point where scrapes are timing out.

Environment (please complete the following information):

YAML files and logs

YAML Attached

output.yaml.zip

Beta Was this translation helpful? Give feedback.

All reactions