You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Copy file name to clipboardExpand all lines: README.md

+11-5Lines changed: 11 additions & 5 deletions

Display the source diff

Display the rich diff

Original file line number

Diff line number

Diff line change

@@ -4,14 +4,20 @@ An evaluation benchmark 📈 and framework to compare and evolve the quality of

4

4

5

5

This repository gives developers of LLMs (and other code generation tools) a standardized benchmark and framework to improve real-world usage in the software development domain and provides users of LLMs with metrics and comparisons to check if a given LLM is useful for their tasks.

6

6

7

-

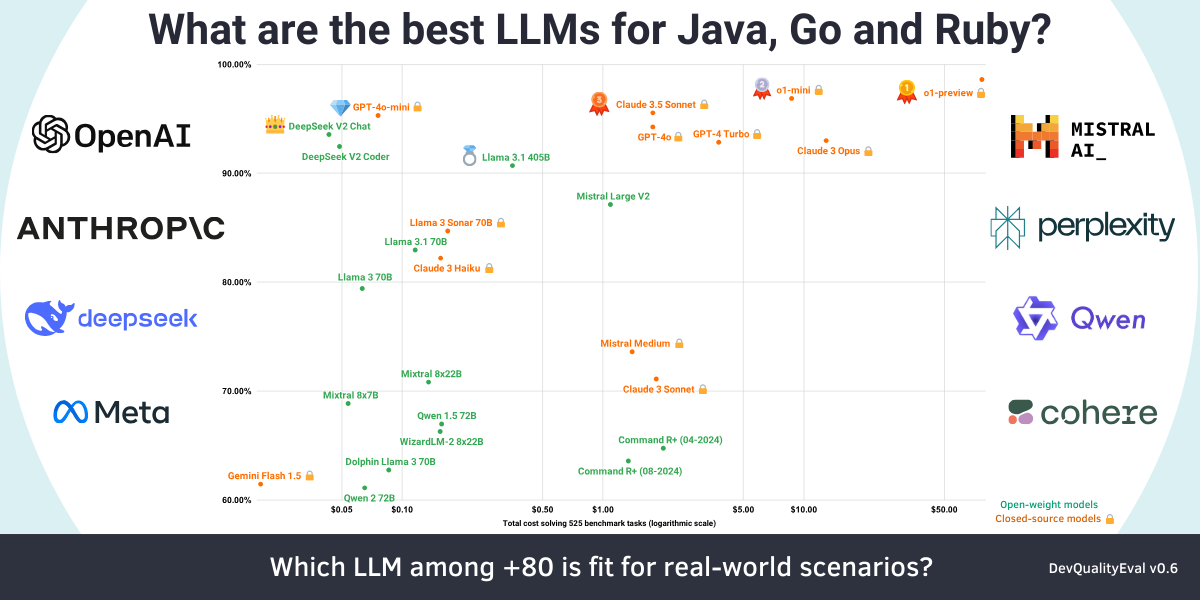

The latest results are discussed in a deep dive: [OpenAI's o1-preview is the king 👑 of code generation but is super slow and expensive](https://symflower.com/en/company/blog/2024/dev-quality-eval-v0.6-o1-preview-is-the-king-of-code-generation-but-is-super-slow-and-expensive/)

7

+

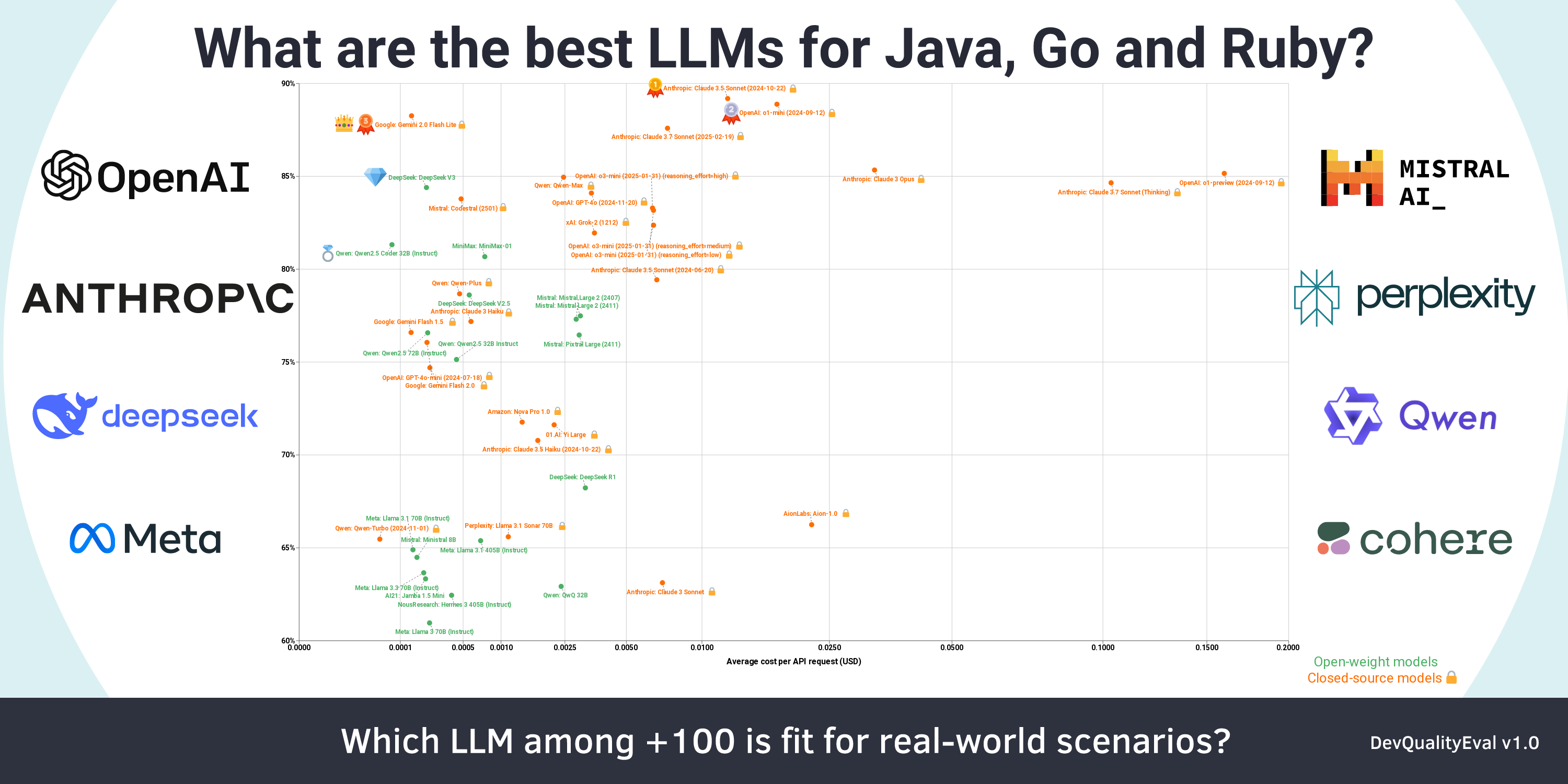

The latest results are discussed in a deep dive: [Anthropic's Claude 3.7 Sonnet is the new king 👑 of code generation (but only with help), and DeepSeek R1 disappoints (Deep dives from the DevQualityEval v1.0)](https://symflower.com/en/company/blog/2025/dev-quality-eval-v1.0-anthropic-s-claude-3.7-sonnet-is-the-king-with-help-and-deepseek-r1-disappoints/)

8

8

9

-

9

+

10

10

11

-

> [!TIP]

12

-

> **💰🍻 [Buy us 2 beverages](https://symflower.com/en/products/devqualityeval-leaderboard/) to support us in extending the DevQualityEval project** and gain access to the entire dataset of DevQualityEval logs and results from the latest benchmark run.

11

+

[](https://buy.stripe.com/5kA3g962hfP0dGMeUX)

13

12

14

-

13

+

> 💰🍻 With [this purchase](https://buy.stripe.com/5kA3g962hfP0dGMeUX) you are mainly supporting DevQualityEval but you also receive access via your Google account to the detailed results of DevQualityEval v1.0 This includes: Access to the Google Sheet document with the leaderboard summary, as well as graphs, and exported metrics.

14

+

15

+

Since all deep dives build upon each other, it is worth taking a look at previous dives:

16

+

17

+

-[OpenAI's o1-preview is the king 👑 of code generation but is super slow and expensive (Deep dives from the DevQualityEval v0.6)](https://symflower.com/en/company/blog/2024/dev-quality-eval-v0.6-o1-preview-is-the-king-of-code-generation-but-is-super-slow-and-expensive/)

18

+

-[DeepSeek v2 Coder and Claude 3.5 Sonnet are more cost-effective at code generation than GPT-4o! (Deep dives from the DevQualityEval v0.5.0)](https://symflower.com/en/company/blog/2024/dev-quality-eval-v0.5.0-deepseek-v2-coder-and-claude-3.5-sonnet-beat-gpt-4o-for-cost-effectiveness-in-code-generation/)

19

+

-[Is Llama-3 better than GPT-4 for generating tests? And other deep dives of the DevQualityEval v0.4.0](https://symflower.com/en/company/blog/2024/dev-quality-eval-v0.4.0-is-llama-3-better-than-gpt-4-for-generating-tests/)

20

+

-[Can LLMs test a Go function that does nothing?](https://symflower.com/en/company/blog/2024/can-ai-test-a-go-function-that-does-nothing/)

0 commit comments