+

+

+

+

+

+

+

+ Disconnected

+

+

+

+

+

+> [!NOTE]

+> Looking for the JavaScript/TypeScript version? Check out [Agents SDK JS/TS](https://github.com/openai/openai-agents-js).

+

### Core concepts:

1. [**Agents**](https://openai.github.io/openai-agents-python/agents): LLMs configured with instructions, tools, guardrails, and handoffs

2. [**Handoffs**](https://openai.github.io/openai-agents-python/handoffs/): A specialized tool call used by the Agents SDK for transferring control between agents

3. [**Guardrails**](https://openai.github.io/openai-agents-python/guardrails/): Configurable safety checks for input and output validation

-4. [**Tracing**](https://openai.github.io/openai-agents-python/tracing/): Built-in tracking of agent runs, allowing you to view, debug and optimize your workflows

+4. [**Sessions**](#sessions): Automatic conversation history management across agent runs

+5. [**Tracing**](https://openai.github.io/openai-agents-python/tracing/): Built-in tracking of agent runs, allowing you to view, debug and optimize your workflows

Explore the [examples](examples) directory to see the SDK in action, and read our [documentation](https://openai.github.io/openai-agents-python/) for more details.

@@ -17,14 +21,23 @@ Explore the [examples](examples) directory to see the SDK in action, and read ou

1. Set up your Python environment

-```

+- Option A: Using venv (traditional method)

+

+```bash

python -m venv env

-source env/bin/activate

+source env/bin/activate # On Windows: env\Scripts\activate

```

-2. Install Agents SDK

+- Option B: Using uv (recommended)

+```bash

+uv venv

+source .venv/bin/activate # On Windows: .venv\Scripts\activate

```

+

+2. Install Agents SDK

+

+```bash

pip install openai-agents

```

@@ -47,7 +60,7 @@ print(result.final_output)

(_If running this, ensure you set the `OPENAI_API_KEY` environment variable_)

-(_For Jupyter notebook users, see [hello_world_jupyter.py](examples/basic/hello_world_jupyter.py)_)

+(_For Jupyter notebook users, see [hello_world_jupyter.ipynb](examples/basic/hello_world_jupyter.ipynb)_)

## Handoffs example

@@ -144,6 +157,118 @@ The Agents SDK is designed to be highly flexible, allowing you to model a wide r

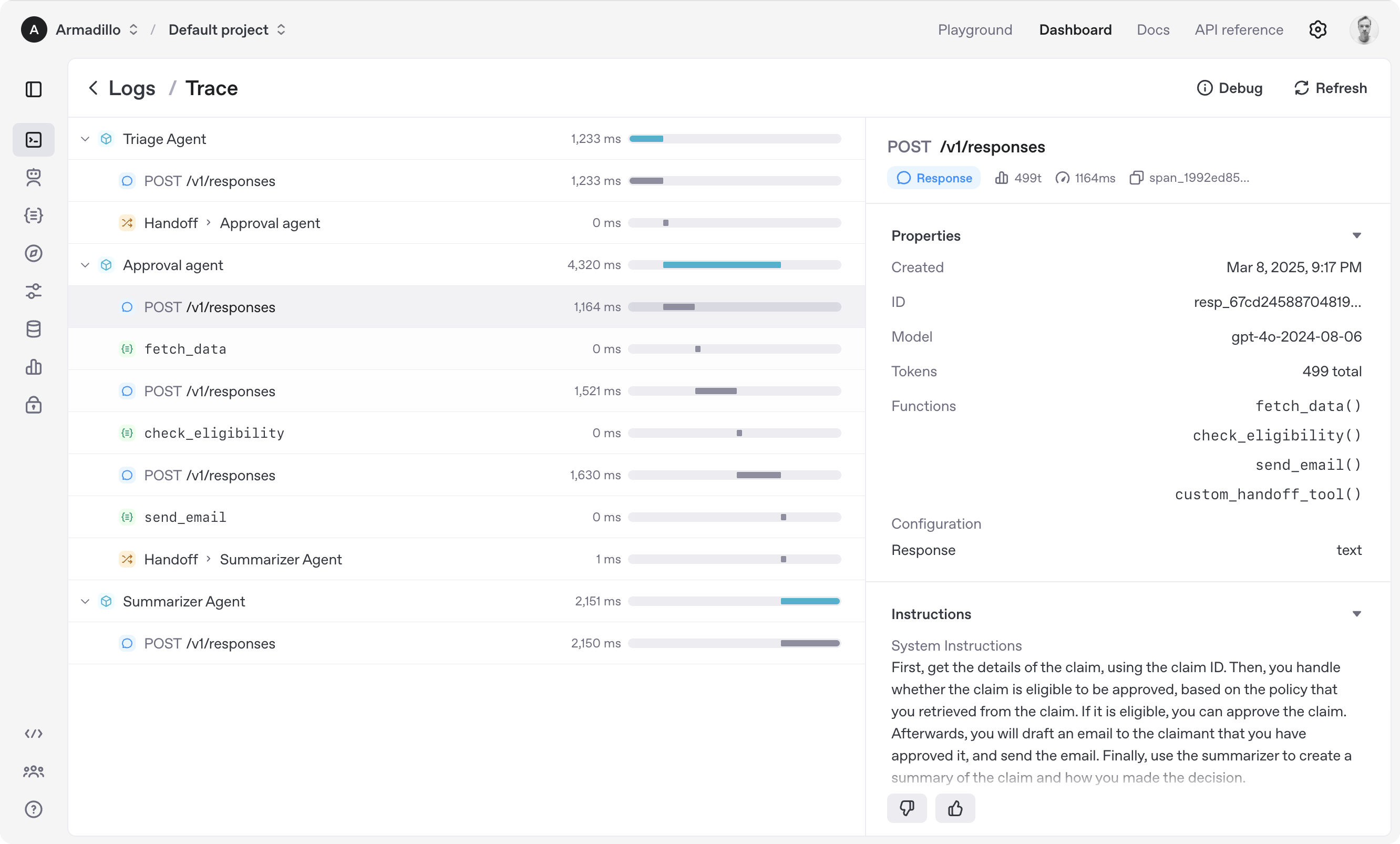

The Agents SDK automatically traces your agent runs, making it easy to track and debug the behavior of your agents. Tracing is extensible by design, supporting custom spans and a wide variety of external destinations, including [Logfire](https://logfire.pydantic.dev/docs/integrations/llms/openai/#openai-agents), [AgentOps](https://docs.agentops.ai/v1/integrations/agentssdk), [Braintrust](https://braintrust.dev/docs/guides/traces/integrations#openai-agents-sdk), [Scorecard](https://docs.scorecard.io/docs/documentation/features/tracing#openai-agents-sdk-integration), and [Keywords AI](https://docs.keywordsai.co/integration/development-frameworks/openai-agent). For more details about how to customize or disable tracing, see [Tracing](http://openai.github.io/openai-agents-python/tracing), which also includes a larger list of [external tracing processors](http://openai.github.io/openai-agents-python/tracing/#external-tracing-processors-list).

+## Long running agents & human-in-the-loop

+

+You can use the Agents SDK [Temporal](https://temporal.io/) integration to run durable, long-running workflows, including human-in-the-loop tasks. View a demo of Temporal and the Agents SDK working in action to complete long-running tasks [in this video](https://www.youtube.com/watch?v=fFBZqzT4DD8), and [view docs here](https://github.com/temporalio/sdk-python/tree/main/temporalio/contrib/openai_agents).

+

+## Sessions

+

+The Agents SDK provides built-in session memory to automatically maintain conversation history across multiple agent runs, eliminating the need to manually handle `.to_input_list()` between turns.

+

+### Quick start

+

+```python

+from agents import Agent, Runner, SQLiteSession

+

+# Create agent

+agent = Agent(

+ name="Assistant",

+ instructions="Reply very concisely.",

+)

+

+# Create a session instance

+session = SQLiteSession("conversation_123")

+

+# First turn

+result = await Runner.run(

+ agent,

+ "What city is the Golden Gate Bridge in?",

+ session=session

+)

+print(result.final_output) # "San Francisco"

+

+# Second turn - agent automatically remembers previous context

+result = await Runner.run(

+ agent,

+ "What state is it in?",

+ session=session

+)

+print(result.final_output) # "California"

+

+# Also works with synchronous runner

+result = Runner.run_sync(

+ agent,

+ "What's the population?",

+ session=session

+)

+print(result.final_output) # "Approximately 39 million"

+```

+

+### Session options

+

+- **No memory** (default): No session memory when session parameter is omitted

+- **`session: Session = DatabaseSession(...)`**: Use a Session instance to manage conversation history

+

+```python

+from agents import Agent, Runner, SQLiteSession

+

+# Custom SQLite database file

+session = SQLiteSession("user_123", "conversations.db")

+agent = Agent(name="Assistant")

+

+# Different session IDs maintain separate conversation histories

+result1 = await Runner.run(

+ agent,

+ "Hello",

+ session=session

+)

+result2 = await Runner.run(

+ agent,

+ "Hello",

+ session=SQLiteSession("user_456", "conversations.db")

+)

+```

+

+### Custom session implementations

+

+You can implement your own session memory by creating a class that follows the `Session` protocol:

+

+```python

+from agents.memory import Session

+from typing import List

+

+class MyCustomSession:

+ """Custom session implementation following the Session protocol."""

+

+ def __init__(self, session_id: str):

+ self.session_id = session_id

+ # Your initialization here

+

+ async def get_items(self, limit: int | None = None) -> List[dict]:

+ # Retrieve conversation history for the session

+ pass

+

+ async def add_items(self, items: List[dict]) -> None:

+ # Store new items for the session

+ pass

+

+ async def pop_item(self) -> dict | None:

+ # Remove and return the most recent item from the session

+ pass

+

+ async def clear_session(self) -> None:

+ # Clear all items for the session

+ pass

+

+# Use your custom session

+agent = Agent(name="Assistant")

+result = await Runner.run(

+ agent,

+ "Hello",

+ session=MyCustomSession("my_session")

+)

+```

+

## Development (only needed if you need to edit the SDK/examples)

0. Ensure you have [`uv`](https://docs.astral.sh/uv/) installed.

@@ -160,10 +285,17 @@ make sync

2. (After making changes) lint/test

+```

+make check # run tests linter and typechecker

+```

+

+Or to run them individually:

+

```

make tests # run tests

make mypy # run typechecker

make lint # run linter

+make format-check # run style checker

```

## Acknowledgements

@@ -171,6 +303,7 @@ make lint # run linter

We'd like to acknowledge the excellent work of the open-source community, especially:

- [Pydantic](https://docs.pydantic.dev/latest/) (data validation) and [PydanticAI](https://ai.pydantic.dev/) (advanced agent framework)

+- [LiteLLM](https://github.com/BerriAI/litellm) (unified interface for 100+ LLMs)

- [MkDocs](https://github.com/squidfunk/mkdocs-material)

- [Griffe](https://github.com/mkdocstrings/griffe)

- [uv](https://github.com/astral-sh/uv) and [ruff](https://github.com/astral-sh/ruff)

diff --git a/docs/agents.md b/docs/agents.md

index 39d4afd57..5dbd775a6 100644

--- a/docs/agents.md

+++ b/docs/agents.md

@@ -6,6 +6,7 @@ Agents are the core building block in your apps. An agent is a large language mo

The most common properties of an agent you'll configure are:

+- `name`: A required string that identifies your agent.

- `instructions`: also known as a developer message or system prompt.

- `model`: which LLM to use, and optional `model_settings` to configure model tuning parameters like temperature, top_p, etc.

- `tools`: Tools that the agent can use to achieve its tasks.

@@ -15,6 +16,7 @@ from agents import Agent, ModelSettings, function_tool

@function_tool

def get_weather(city: str) -> str:

+ """returns weather info for the specified city."""

return f"The weather in {city} is sunny"

agent = Agent(

@@ -32,6 +34,7 @@ Agents are generic on their `context` type. Context is a dependency-injection to

```python

@dataclass

class UserContext:

+ name: str

uid: str

is_pro_user: bool

@@ -112,7 +115,7 @@ Sometimes, you want to observe the lifecycle of an agent. For example, you may w

## Guardrails

-Guardrails allow you to run checks/validations on user input, in parallel to the agent running. For example, you could screen the user's input for relevance. Read more in the [guardrails](guardrails.md) documentation.

+Guardrails allow you to run checks/validations on user input in parallel to the agent running, and on the agent's output once it is produced. For example, you could screen the user's input and agent's output for relevance. Read more in the [guardrails](guardrails.md) documentation.

## Cloning/copying agents

@@ -140,8 +143,103 @@ Supplying a list of tools doesn't always mean the LLM will use a tool. You can f

3. `none`, which requires the LLM to _not_ use a tool.

4. Setting a specific string e.g. `my_tool`, which requires the LLM to use that specific tool.

+```python

+from agents import Agent, Runner, function_tool, ModelSettings

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ model_settings=ModelSettings(tool_choice="get_weather")

+)

+```

+

+## Tool Use Behavior

+

+The `tool_use_behavior` parameter in the `Agent` configuration controls how tool outputs are handled:

+- `"run_llm_again"`: The default. Tools are run, and the LLM processes the results to produce a final response.

+- `"stop_on_first_tool"`: The output of the first tool call is used as the final response, without further LLM processing.

+

+```python

+from agents import Agent, Runner, function_tool, ModelSettings

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ tool_use_behavior="stop_on_first_tool"

+)

+```

+

+- `StopAtTools(stop_at_tool_names=[...])`: Stops if any specified tool is called, using its output as the final response.

+```python

+from agents import Agent, Runner, function_tool

+from agents.agent import StopAtTools

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+@function_tool

+def sum_numbers(a: int, b: int) -> int:

+ """Adds two numbers."""

+ return a + b

+

+agent = Agent(

+ name="Stop At Stock Agent",

+ instructions="Get weather or sum numbers.",

+ tools=[get_weather, sum_numbers],

+ tool_use_behavior=StopAtTools(stop_at_tool_names=["get_weather"])

+)

+```

+- `ToolsToFinalOutputFunction`: A custom function that processes tool results and decides whether to stop or continue with the LLM.

+

+```python

+from agents import Agent, Runner, function_tool, FunctionToolResult, RunContextWrapper

+from agents.agent import ToolsToFinalOutputResult

+from typing import List, Any

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+def custom_tool_handler(

+ context: RunContextWrapper[Any],

+ tool_results: List[FunctionToolResult]

+) -> ToolsToFinalOutputResult:

+ """Processes tool results to decide final output."""

+ for result in tool_results:

+ if result.output and "sunny" in result.output:

+ return ToolsToFinalOutputResult(

+ is_final_output=True,

+ final_output=f"Final weather: {result.output}"

+ )

+ return ToolsToFinalOutputResult(

+ is_final_output=False,

+ final_output=None

+ )

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ tool_use_behavior=custom_tool_handler

+)

+```

+

!!! note

To prevent infinite loops, the framework automatically resets `tool_choice` to "auto" after a tool call. This behavior is configurable via [`agent.reset_tool_choice`][agents.agent.Agent.reset_tool_choice]. The infinite loop is because tool results are sent to the LLM, which then generates another tool call because of `tool_choice`, ad infinitum.

- If you want the Agent to completely stop after a tool call (rather than continuing with auto mode), you can set [`Agent.tool_use_behavior="stop_on_first_tool"`] which will directly use the tool output as the final response without further LLM processing.

diff --git a/docs/context.md b/docs/context.md

index 4176ec51f..6e54565e0 100644

--- a/docs/context.md

+++ b/docs/context.md

@@ -38,7 +38,8 @@ class UserInfo: # (1)!

@function_tool

async def fetch_user_age(wrapper: RunContextWrapper[UserInfo]) -> str: # (2)!

- return f"User {wrapper.context.name} is 47 years old"

+ """Fetch the age of the user. Call this function to get user's age information."""

+ return f"The user {wrapper.context.name} is 47 years old"

async def main():

user_info = UserInfo(name="John", uid=123)

diff --git a/docs/examples.md b/docs/examples.md

index 30d602827..ae40fa909 100644

--- a/docs/examples.md

+++ b/docs/examples.md

@@ -40,3 +40,6 @@ Check out a variety of sample implementations of the SDK in the examples section

- **[voice](https://github.com/openai/openai-agents-python/tree/main/examples/voice):**

See examples of voice agents, using our TTS and STT models.

+

+- **[realtime](https://github.com/openai/openai-agents-python/tree/main/examples/realtime):**

+ Examples showing how to build realtime experiences using the SDK.

diff --git a/docs/guardrails.md b/docs/guardrails.md

index 2f0be0f2a..8df904a4c 100644

--- a/docs/guardrails.md

+++ b/docs/guardrails.md

@@ -23,7 +23,7 @@ Input guardrails run in 3 steps:

Output guardrails run in 3 steps:

-1. First, the guardrail receives the same input passed to the agent.

+1. First, the guardrail receives the output produced by the agent.

2. Next, the guardrail function runs to produce a [`GuardrailFunctionOutput`][agents.guardrail.GuardrailFunctionOutput], which is then wrapped in an [`OutputGuardrailResult`][agents.guardrail.OutputGuardrailResult]

3. Finally, we check if [`.tripwire_triggered`][agents.guardrail.GuardrailFunctionOutput.tripwire_triggered] is true. If true, an [`OutputGuardrailTripwireTriggered`][agents.exceptions.OutputGuardrailTripwireTriggered] exception is raised, so you can appropriately respond to the user or handle the exception.

diff --git a/docs/handoffs.md b/docs/handoffs.md

index 0b868c4af..85707c6b3 100644

--- a/docs/handoffs.md

+++ b/docs/handoffs.md

@@ -36,6 +36,7 @@ The [`handoff()`][agents.handoffs.handoff] function lets you customize things.

- `on_handoff`: A callback function executed when the handoff is invoked. This is useful for things like kicking off some data fetching as soon as you know a handoff is being invoked. This function receives the agent context, and can optionally also receive LLM generated input. The input data is controlled by the `input_type` param.

- `input_type`: The type of input expected by the handoff (optional).

- `input_filter`: This lets you filter the input received by the next agent. See below for more.

+- `is_enabled`: Whether the handoff is enabled. This can be a boolean or a function that returns a boolean, allowing you to dynamically enable or disable the handoff at runtime.

```python

from agents import Agent, handoff, RunContextWrapper

diff --git a/docs/index.md b/docs/index.md

index 8aef6574e..f8eb7dfec 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -4,7 +4,8 @@ The [OpenAI Agents SDK](https://github.com/openai/openai-agents-python) enables

- **Agents**, which are LLMs equipped with instructions and tools

- **Handoffs**, which allow agents to delegate to other agents for specific tasks

-- **Guardrails**, which enable the inputs to agents to be validated

+- **Guardrails**, which enable validation of agent inputs and outputs

+- **Sessions**, which automatically maintains conversation history across agent runs

In combination with Python, these primitives are powerful enough to express complex relationships between tools and agents, and allow you to build real-world applications without a steep learning curve. In addition, the SDK comes with built-in **tracing** that lets you visualize and debug your agentic flows, as well as evaluate them and even fine-tune models for your application.

@@ -21,6 +22,7 @@ Here are the main features of the SDK:

- Python-first: Use built-in language features to orchestrate and chain agents, rather than needing to learn new abstractions.

- Handoffs: A powerful feature to coordinate and delegate between multiple agents.

- Guardrails: Run input validations and checks in parallel to your agents, breaking early if the checks fail.

+- Sessions: Automatic conversation history management across agent runs, eliminating manual state handling.

- Function tools: Turn any Python function into a tool, with automatic schema generation and Pydantic-powered validation.

- Tracing: Built-in tracing that lets you visualize, debug and monitor your workflows, as well as use the OpenAI suite of evaluation, fine-tuning and distillation tools.

diff --git a/docs/ja/agents.md b/docs/ja/agents.md

index 828b36355..e6d72075a 100644

--- a/docs/ja/agents.md

+++ b/docs/ja/agents.md

@@ -4,21 +4,23 @@ search:

---

# エージェント

-エージェントはアプリの主要な構成ブロックです。エージェントは、大規模言語モデル ( LLM ) に instructions と tools を設定したものです。

+エージェントは、アプリにおける中心的な構成要素です。エージェントは、instructions と tools で設定された大規模言語モデル ( LLM ) です。

## 基本設定

-エージェントで最も一般的に設定するプロパティは次のとおりです。

+一般的に設定するエージェントのプロパティは次のとおりです。

-- `instructions`: 開発者メッセージまたは system prompt とも呼ばれます。

-- `model`: 使用する LLM と、temperature や top_p などのモデル調整パラメーターを指定する任意の `model_settings`。

-- `tools`: エージェントがタスクを達成するために利用できるツール。

+- `name`: エージェントを識別する必須の文字列です。

+- `instructions`: developer メッセージまたは system prompt とも呼ばれます。

+- `model`: 使用する LLM と、`model_settings` による temperature、top_p などのチューニング パラメーターの任意設定。

+- `tools`: エージェントがタスク達成のために使用できるツールです。

```python

from agents import Agent, ModelSettings, function_tool

@function_tool

def get_weather(city: str) -> str:

+ """returns weather info for the specified city."""

return f"The weather in {city} is sunny"

agent = Agent(

@@ -31,11 +33,12 @@ agent = Agent(

## コンテキスト

-エージェントはその `context` 型について汎用的です。コンテキストは依存性注入の手段で、`Runner.run()` に渡すオブジェクトです。これはすべてのエージェント、ツール、ハンドオフなどに渡され、エージェント実行時の依存関係や状態をまとめて保持します。任意の Python オブジェクトをコンテキストとして渡せます。

+エージェントは `context` 型に対してジェネリックです。コンテキストは依存性注入ツールです。あなたが作成して `Runner.run()` に渡すオブジェクトで、すべてのエージェント、ツール、ハンドオフなどに渡され、エージェント実行のための依存関係と状態をまとめて保持します。コンテキストには任意の Python オブジェクトを提供できます。

```python

@dataclass

class UserContext:

+ name: str

uid: str

is_pro_user: bool

@@ -49,7 +52,7 @@ agent = Agent[UserContext](

## 出力タイプ

-デフォルトでは、エージェントはプレーンテキスト ( つまり `str` ) を出力します。特定の型で出力させたい場合は `output_type` パラメーターを使用します。一般的には [Pydantic](https://docs.pydantic.dev/) オブジェクトを利用しますが、Pydantic の [TypeAdapter](https://docs.pydantic.dev/latest/api/type_adapter/) でラップ可能な型であれば何でも対応します。たとえば dataclass、list、TypedDict などです。

+デフォルトでは、エージェントはプレーンテキスト ( つまり `str` ) の出力を生成します。特定のタイプの出力を生成させたい場合は、`output_type` パラメーターを使用できます。一般的な選択肢は [Pydantic](https://docs.pydantic.dev/) オブジェクトを使うことですが、Pydantic の [TypeAdapter](https://docs.pydantic.dev/latest/api/type_adapter/) でラップできる任意の型 ( dataclasses、lists、TypedDict など ) をサポートします。

```python

from pydantic import BaseModel

@@ -70,11 +73,11 @@ agent = Agent(

!!! note

- `output_type` を渡すと、モデルは通常のプレーンテキスト応答の代わりに [structured outputs](https://platform.openai.com/docs/guides/structured-outputs) を使用するよう指示されます。

+ `output_type` を渡すと、通常のプレーンテキストの応答ではなく [structured outputs](https://platform.openai.com/docs/guides/structured-outputs) を使うようにモデルへ指示します。

## ハンドオフ

-ハンドオフは、エージェントが委譲できるサブエージェントです。ハンドオフのリストを渡しておくと、エージェントは必要に応じてそれらに処理を委譲できます。これにより、単一のタスクに特化したモジュール式エージェントを編成できる強力なパターンが実現します。詳細は [handoffs](handoffs.md) ドキュメントをご覧ください。

+ハンドオフは、エージェントが委任できるサブエージェントです。ハンドオフのリストを提供すると、関連がある場合にエージェントはそれらへ委任できます。これは、単一のタスクに特化して優れた、モジュール式のエージェントをオーケストレーションする強力なパターンです。詳細は [ハンドオフ](handoffs.md) のドキュメントをご覧ください。

```python

from agents import Agent

@@ -93,9 +96,9 @@ triage_agent = Agent(

)

```

-## 動的 instructions

+## 動的な指示

-通常はエージェント作成時に instructions を指定しますが、関数を介して動的に instructions を提供することもできます。その関数はエージェントとコンテキストを受け取り、プロンプトを返す必要があります。同期関数と `async` 関数の両方に対応しています。

+多くの場合、エージェントの作成時に instructions を指定できます。しかし、関数を通じて動的な指示を提供することも可能です。この関数はエージェントとコンテキストを受け取り、プロンプトを返す必要があります。通常の関数と `async` 関数のどちらも使用できます。

```python

def dynamic_instructions(

@@ -110,17 +113,17 @@ agent = Agent[UserContext](

)

```

-## ライフサイクルイベント (hooks)

+## ライフサイクルイベント(フック)

-場合によっては、エージェントのライフサイクルを観察したいことがあります。たとえば、イベントをログに記録したり、特定のイベント発生時にデータを事前取得したりする場合です。`hooks` プロパティを使ってエージェントのライフサイクルにフックできます。[`AgentHooks`][agents.lifecycle.AgentHooks] クラスをサブクラス化し、関心のあるメソッドをオーバーライドしてください。

+ときには、エージェントのライフサイクルを観察したくなることがあります。たとえば、イベントをログに記録したり、特定のイベント発生時にデータを事前取得したりしたい場合があります。`hooks` プロパティでエージェントのライフサイクルにフックできます。[`AgentHooks`][agents.lifecycle.AgentHooks] クラスをサブクラス化し、関心のあるメソッドをオーバーライドしてください。

## ガードレール

-ガードレールを使うと、エージェントの実行と並行してユーザー入力に対するチェックやバリデーションを実行できます。たとえば、ユーザーの入力内容が関連しているかをスクリーニングできます。詳細は [guardrails](guardrails.md) ドキュメントをご覧ください。

+ガードレールにより、エージェントの実行と並行してユーザー入力に対するチェック/検証を行い、エージェントの出力が生成された後にもそれを行えます。たとえば、ユーザーの入力やエージェントの出力を関連性でスクリーニングできます。詳細は [ガードレール](guardrails.md) のドキュメントをご覧ください。

-## エージェントの複製

+## エージェントのクローン/コピー

-`clone()` メソッドを使用すると、エージェントを複製し、必要に応じて任意のプロパティを変更できます。

+エージェントの `clone()` メソッドを使用すると、エージェントを複製し、必要に応じて任意のプロパティを変更できます。

```python

pirate_agent = Agent(

@@ -137,15 +140,109 @@ robot_agent = pirate_agent.clone(

## ツール使用の強制

-ツールの一覧を渡しても、LLM が必ずツールを使用するとは限りません。[`ModelSettings.tool_choice`][agents.model_settings.ModelSettings.tool_choice] を設定することでツール使用を強制できます。有効な値は次のとおりです。

+ツールのリストを指定しても、LLM が必ずツールを使用するとは限りません。[`ModelSettings.tool_choice`][agents.model_settings.ModelSettings.tool_choice] を設定するとツール使用を強制できます。有効な値は次のとおりです。

-1. `auto` — ツールを使用するかどうかを LLM が判断します。

-2. `required` — LLM にツール使用を必須化します ( ただし使用するツールは自動選択 )。

-3. `none` — LLM にツールを使用しないことを要求します。

-4. 特定の文字列 ( 例: `my_tool` ) — その特定のツールを LLM に使用させます。

+1. `auto`: ツールを使用するかどうかを LLM に委ねます。

+2. `required`: LLM にツールの使用を必須にします ( どのツールを使うかは賢く判断できます )。

+3. `none`: LLM にツールを使用しないことを要求します。

+4. 文字列を指定 ( 例: `my_tool` ): その特定のツールを LLM に使用させます。

-!!! note

+```python

+from agents import Agent, Runner, function_tool, ModelSettings

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ model_settings=ModelSettings(tool_choice="get_weather")

+)

+```

+

+## ツール使用の挙動

+

+`Agent` の設定にある `tool_use_behavior` パラメーターは、ツール出力の扱い方を制御します。

+- `"run_llm_again"`: デフォルト。ツールが実行され、LLM が結果を処理して最終応答を生成します。

+- `"stop_on_first_tool"`: 最初のツール呼び出しの出力をそのまま最終応答として使用し、以降の LLM 処理は行いません。

+

+```python

+from agents import Agent, Runner, function_tool, ModelSettings

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ tool_use_behavior="stop_on_first_tool"

+)

+```

- 無限ループを防ぐため、フレームワークはツール呼び出し後に `tool_choice` を自動的に "auto" にリセットします。この動作は [`agent.reset_tool_choice`][agents.agent.Agent.reset_tool_choice] で設定できます。無限ループが起こる理由は、ツールの結果が LLM に送られ、`tool_choice` により再びツール呼び出しが生成される、という流れが繰り返されるからです。

+- `StopAtTools(stop_at_tool_names=[...])`: 指定したいずれかのツールが呼び出されたら停止し、その出力を最終応答として使用します。

+```python

+from agents import Agent, Runner, function_tool

+from agents.agent import StopAtTools

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+@function_tool

+def sum_numbers(a: int, b: int) -> int:

+ """Adds two numbers."""

+ return a + b

+

+agent = Agent(

+ name="Stop At Stock Agent",

+ instructions="Get weather or sum numbers.",

+ tools=[get_weather, sum_numbers],

+ tool_use_behavior=StopAtTools(stop_at_tool_names=["get_weather"])

+)

+```

+- `ToolsToFinalOutputFunction`: ツールの結果を処理し、停止するか LLM を続行するかを判断するカスタム関数です。

+

+```python

+from agents import Agent, Runner, function_tool, FunctionToolResult, RunContextWrapper

+from agents.agent import ToolsToFinalOutputResult

+from typing import List, Any

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+def custom_tool_handler(

+ context: RunContextWrapper[Any],

+ tool_results: List[FunctionToolResult]

+) -> ToolsToFinalOutputResult:

+ """Processes tool results to decide final output."""

+ for result in tool_results:

+ if result.output and "sunny" in result.output:

+ return ToolsToFinalOutputResult(

+ is_final_output=True,

+ final_output=f"Final weather: {result.output}"

+ )

+ return ToolsToFinalOutputResult(

+ is_final_output=False,

+ final_output=None

+ )

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ tool_use_behavior=custom_tool_handler

+)

+```

+

+!!! note

- ツール呼び出し後にエージェントを完全に停止させたい場合 ( auto モードで続行させたくない場合 ) は、[`Agent.tool_use_behavior="stop_on_first_tool"`] を設定してください。これにより、ツールの出力を LL M の追加処理なしにそのまま最終応答として返します。

\ No newline at end of file

+ 無限ループを防ぐため、フレームワークはツール呼び出し後に `tool_choice` を自動的に "auto" にリセットします。この挙動は [`agent.reset_tool_choice`][agents.agent.Agent.reset_tool_choice] で設定できます。無限ループは、ツール結果が LLM に送られ、その後 `tool_choice` により LLM が再度ツール呼び出しを生成し続けるために発生します。

\ No newline at end of file

diff --git a/docs/ja/config.md b/docs/ja/config.md

index bf76b9fb6..b1b81bfdf 100644

--- a/docs/ja/config.md

+++ b/docs/ja/config.md

@@ -6,7 +6,7 @@ search:

## API キーとクライアント

-デフォルトでは、 SDK はインポートされた時点で LLM リクエストとトレーシングに使用する `OPENAI_API_KEY` 環境変数を探します。アプリ起動前にこの環境変数を設定できない場合は、 [set_default_openai_key()][agents.set_default_openai_key] 関数を利用してキーを設定できます。

+デフォルトでは、SDK はインポートされた直後から LLM リクエストと トレーシング のために `OPENAI_API_KEY` 環境変数を探します。アプリ起動前にその環境変数を設定できない場合は、[set_default_openai_key()][agents.set_default_openai_key] 関数でキーを設定できます。

```python

from agents import set_default_openai_key

@@ -14,7 +14,7 @@ from agents import set_default_openai_key

set_default_openai_key("sk-...")

```

-また、使用する OpenAI クライアントを構成することも可能です。デフォルトでは、 SDK は環境変数または上記で設定したデフォルトキーを用いて `AsyncOpenAI` インスタンスを作成します。これを変更するには、 [set_default_openai_client()][agents.set_default_openai_client] 関数を使用します。

+また、使用する OpenAI クライアントを設定することもできます。デフォルトでは、SDK は環境変数または上記で設定したデフォルト キーを用いて `AsyncOpenAI` インスタンスを作成します。これを変更するには、[set_default_openai_client()][agents.set_default_openai_client] 関数を使用します。

```python

from openai import AsyncOpenAI

@@ -24,7 +24,7 @@ custom_client = AsyncOpenAI(base_url="...", api_key="...")

set_default_openai_client(custom_client)

```

-さらに、使用する OpenAI API をカスタマイズすることもできます。既定では OpenAI Responses API を利用します。これを Chat Completions API に変更するには、 [set_default_openai_api()][agents.set_default_openai_api] 関数を使用してください。

+さらに、使用する OpenAI API をカスタマイズできます。デフォルトでは OpenAI Responses API を使用します。これを上書きして Chat Completions API を使用するには、[set_default_openai_api()][agents.set_default_openai_api] 関数を使用します。

```python

from agents import set_default_openai_api

@@ -34,7 +34,7 @@ set_default_openai_api("chat_completions")

## トレーシング

-トレーシングはデフォルトで有効になっています。前述の OpenAI API キー(環境変数または設定したデフォルトキー)が自動的に使用されます。トレーシングで使用する API キーを個別に設定したい場合は、 [`set_tracing_export_api_key`][agents.set_tracing_export_api_key] 関数を利用してください。

+トレーシング はデフォルトで有効です。デフォルトでは上記の OpenAI API キー(つまり、環境変数または設定したデフォルト キー)を使用します。トレーシング に使用する API キーを個別に設定するには、[`set_tracing_export_api_key`][agents.set_tracing_export_api_key] 関数を使用します。

```python

from agents import set_tracing_export_api_key

@@ -42,7 +42,7 @@ from agents import set_tracing_export_api_key

set_tracing_export_api_key("sk-...")

```

-トレーシングを完全に無効化するには、 [`set_tracing_disabled()`][agents.set_tracing_disabled] 関数を呼び出します。

+[`set_tracing_disabled()`][agents.set_tracing_disabled] 関数を使用して、トレーシング を完全に無効化することもできます。

```python

from agents import set_tracing_disabled

@@ -50,11 +50,11 @@ from agents import set_tracing_disabled

set_tracing_disabled(True)

```

-## デバッグログ

+## デバッグ ログ

- SDK にはハンドラーが設定されていない Python ロガーが 2 つあります。デフォルトでは、警告とエラーは `stdout` に出力されますが、それ以外のログは抑制されます。

+SDK には、ハンドラーが設定されていない 2 つの Python ロガーがあります。デフォルトでは、警告とエラーは `stdout` に送られ、それ以外のログは抑制されます。

-詳細なログを有効にするには、 [`enable_verbose_stdout_logging()`][agents.enable_verbose_stdout_logging] 関数を使用します。

+詳細なログを有効にするには、[`enable_verbose_stdout_logging()`][agents.enable_verbose_stdout_logging] 関数を使用します。

```python

from agents import enable_verbose_stdout_logging

@@ -62,7 +62,7 @@ from agents import enable_verbose_stdout_logging

enable_verbose_stdout_logging()

```

-必要に応じて、ハンドラー、フィルター、フォーマッターなどを追加してログをカスタマイズすることも可能です。詳しくは [Python ロギングガイド](https://docs.python.org/3/howto/logging.html) を参照してください。

+また、ハンドラー、フィルター、フォーマッターなどを追加してログをカスタマイズすることもできます。詳しくは [Python logging guide](https://docs.python.org/3/howto/logging.html) を参照してください。

```python

import logging

@@ -81,17 +81,17 @@ logger.setLevel(logging.WARNING)

logger.addHandler(logging.StreamHandler())

```

-### ログに含まれる機微情報

+### ログ内の機微データ

-特定のログには機微情報(たとえば ユーザー データ)が含まれる場合があります。この情報が記録されるのを防ぎたい場合は、次の環境変数を設定してください。

+一部のログには機微なデータ(たとえば ユーザー データ)が含まれる場合があります。これらのデータがログに出力されないようにするには、以下の環境変数を設定してください。

-LLM の入力および出力のログを無効にする:

+LLM の入力と出力のログ記録を無効化するには:

```bash

export OPENAI_AGENTS_DONT_LOG_MODEL_DATA=1

```

-ツールの入力および出力のログを無効にする:

+ツールの入力と出力のログ記録を無効化するには:

```bash

export OPENAI_AGENTS_DONT_LOG_TOOL_DATA=1

diff --git a/docs/ja/context.md b/docs/ja/context.md

index 72c0938cf..3b514dd7a 100644

--- a/docs/ja/context.md

+++ b/docs/ja/context.md

@@ -4,30 +4,30 @@ search:

---

# コンテキスト管理

-コンテキストという言葉には複数の意味があります。ここでは主に 2 つのコンテキストについて説明します。

+コンテキストという用語は多義的です。ここでは主に次の 2 つのコンテキストがあります。

-1. コード内でローカルに利用できるコンテキスト: ツール関数の実行時や `on_handoff` などのコールバック、ライフサイクルフックで必要となるデータや依存関係です。

-2. LLM が参照できるコンテキスト: LLM がレスポンスを生成する際に見えるデータです。

+1. コードからローカルに利用できるコンテキスト: ツール関数の実行時、`on_handoff` のようなコールバック、ライフサイクルフックなどで必要になるデータや依存関係です。

+2. LLM に利用できるコンテキスト: 応答を生成する際に LLM が参照できるデータです。

## ローカルコンテキスト

-ローカルコンテキストは [`RunContextWrapper`][agents.run_context.RunContextWrapper] クラスと、その中の [`context`][agents.run_context.RunContextWrapper.context] プロパティで表現されます。仕組みは次のとおりです。

+これは [`RunContextWrapper`][agents.run_context.RunContextWrapper] クラスと、その中の [`context`][agents.run_context.RunContextWrapper.context] プロパティで表現されます。動作の概要は次のとおりです。

-1. 任意の Python オブジェクトを作成します。一般的なパターンとして dataclass や Pydantic オブジェクトを使用します。

-2. そのオブジェクトを各種 run メソッド(例: `Runner.run(..., **context=whatever** )`)に渡します。

-3. すべてのツール呼び出しやライフサイクルフックには、ラッパーオブジェクト `RunContextWrapper[T]` が渡されます。ここで `T` はコンテキストオブジェクトの型で、`wrapper.context` からアクセスできます。

+1. 任意の Python オブジェクトを作成します。一般的には dataclass や Pydantic オブジェクトを使います。

+2. そのオブジェクトを各種の実行メソッドに渡します(例: `Runner.run(..., **context=whatever**)`)。

+3. すべてのツール呼び出しやライフサイクルフックなどには、ラッパーオブジェクト `RunContextWrapper[T]` が渡されます。ここで `T` はコンテキストオブジェクトの型で、`wrapper.context` からアクセスできます。

-**最重要ポイント**: あるエージェントの実行において、エージェント・ツール関数・ライフサイクルフックなどはすべて同じ _型_ のコンテキストを使用しなければなりません。

+ **最重要** なポイント: 特定のエージェント実行において、すべてのエージェント、ツール関数、ライフサイクルなどは同じ型のコンテキストを使用しなければなりません。

-コンテキストでは次のような用途が考えられます。

+コンテキストは次のような用途に使えます:

-- 実行に関するデータ(例: ユーザー名 / uid やその他のユーザー情報)

-- 依存オブジェクト(例: ロガー、データフェッチャーなど)

+- 実行のための状況データ(例: ユーザー名 / uid やその他のユーザー情報)

+- 依存関係(例: logger オブジェクト、データ取得コンポーネントなど)

- ヘルパー関数

-!!! danger "Note"

+!!! danger "注意"

- コンテキストオブジェクトは LLM には送信されません。あくまでローカルのオブジェクトであり、読み書きやメソッド呼び出しが可能です。

+ コンテキストオブジェクトは LLM に **送信されません**。ローカル専用のオブジェクトであり、読み書きやメソッド呼び出しができます。

```python

import asyncio

@@ -42,7 +42,8 @@ class UserInfo: # (1)!

@function_tool

async def fetch_user_age(wrapper: RunContextWrapper[UserInfo]) -> str: # (2)!

- return f"User {wrapper.context.name} is 47 years old"

+ """Fetch the age of the user. Call this function to get user's age information."""

+ return f"The user {wrapper.context.name} is 47 years old"

async def main():

user_info = UserInfo(name="John", uid=123)

@@ -65,17 +66,17 @@ if __name__ == "__main__":

asyncio.run(main())

```

-1. これがコンテキストオブジェクトです。ここでは dataclass を使っていますが、任意の型を使用できます。

-2. これはツールです。`RunContextWrapper[UserInfo]` を受け取り、実装内でコンテキストを参照しています。

-3. エージェントにジェネリック `UserInfo` を付与することで、型チェッカーが誤りを検出できます(たとえば別のコンテキスト型を受け取るツールを渡した場合など)。

-4. `run` 関数にコンテキストを渡します。

-5. エージェントはツールを正しく呼び出し、年齢を取得します。

+1. これはコンテキストオブジェクトです。ここでは dataclass を使っていますが、任意の型を使えます。

+2. これはツールです。`RunContextWrapper[UserInfo]` を受け取り、ツールの実装はコンテキストから読み取ります。

+3. エージェントにジェネリクス `UserInfo` を付けることで、型チェッカーがエラーを検出できます(たとえば、異なるコンテキスト型を取るツールを渡そうとした場合など)。

+4. コンテキストは `run` 関数に渡されます。

+5. エージェントはツールを正しく呼び出し、年齢を取得します。

## エージェント / LLM コンテキスト

-LLM が呼び出されるとき、LLM が参照できるデータは会話履歴に含まれるものだけです。したがって、新しいデータを LLM に渡したい場合は、そのデータを履歴に含める形で提供する必要があります。方法はいくつかあります。

+LLM が呼び出されると、参照できるデータは会話履歴のみです。したがって、新しいデータを LLM に利用させたい場合は、その履歴で利用できる形で提供する必要があります。方法はいくつかあります:

-1. Agent の `instructions` に追加する。いわゆる「system prompt」や「developer message」と呼ばれるものです。システムプロンプトは静的な文字列でも、コンテキストを受け取って文字列を返す動的な関数でも構いません。ユーザー名や現在の日付など、常に有用な情報を渡す際によく使われます。

-2. `Runner.run` 呼び出し時の `input` に追加する。`instructions` と似ていますが、[chain of command](https://cdn.openai.com/spec/model-spec-2024-05-08.html#follow-the-chain-of-command) の下位レイヤーにメッセージを配置できます。

-3. 関数ツール経由で公開する。オンデマンドで取得するコンテキストに適しており、LLM が必要に応じてツールを呼び出してデータを取得します。

-4. retrieval や web search を使う。これらは特別なツールで、ファイルやデータベースから関連データを取得する(retrieval)、もしくは Web から取得する(web search)ことができます。レスポンスを関連コンテキストで「グラウンディング」するのに有効です。

\ No newline at end of file

+1. エージェントの `instructions` に追加します。これは「システムプロンプト」または「開発者メッセージ」とも呼ばれます。システムプロンプトは静的な文字列でも、コンテキストを受け取って文字列を返す動的関数でも構いません。常に有用な情報(例: ユーザー名や現在の日付)に適した方法です。

+2. `Runner.run` を呼び出す際の `input` に追加します。これは `instructions` に追加する方法に似ていますが、[指揮系統](https://cdn.openai.com/spec/model-spec-2024-05-08.html#follow-the-chain-of-command) の下位にあるメッセージを持たせられます。

+3. 関数ツールで公開します。これはオンデマンドのコンテキストに便利で、LLM が必要だと判断したときにツールを呼び出してデータを取得できます。

+4. リトリーバルや Web 検索を使用します。これらは、ファイルやデータベースから関連データを取得(リトリーバル)したり、Web から取得(Web 検索)したりできる特別なツールです。関連するコンテキストデータに基づいて応答を「グラウンディング」するのに有用です。

\ No newline at end of file

diff --git a/docs/ja/examples.md b/docs/ja/examples.md

index 00f634ec1..6bb457871 100644

--- a/docs/ja/examples.md

+++ b/docs/ja/examples.md

@@ -4,42 +4,45 @@ search:

---

# コード例

-リポジトリの [examples セクション](https://github.com/openai/openai-agents-python/tree/main/examples) には、 SDK のさまざまなサンプル実装が用意されています。これらの例は、異なるパターンや機能を示す複数のカテゴリーに整理されています。

-

+[リポジトリ](https://github.com/openai/openai-agents-python/tree/main/examples) の examples セクションでは、SDK のさまざまなサンプル実装をご覧いただけます。異なるパターンや機能を示す複数のカテゴリーに整理されています。

## カテゴリー

-- **[agent_patterns](https://github.com/openai/openai-agents-python/tree/main/examples/agent_patterns):**

- このカテゴリーの例では、一般的なエージェント設計パターンを紹介しています。

+- **[エージェントパターン](https://github.com/openai/openai-agents-python/tree/main/examples/agent_patterns):**

+ このカテゴリーの例は、一般的なエージェント設計パターンを示します。例:

+

+ - 決定的なワークフロー

+ - ツールとしてのエージェント

+ - エージェントの並列実行

- - 決定論的ワークフロー

- - ツールとしてのエージェント

- - エージェントの並列実行

+- **[基本](https://github.com/openai/openai-agents-python/tree/main/examples/basic):**

+ このコード例は、SDK の基礎的な機能を紹介します。例:

-- **[basic](https://github.com/openai/openai-agents-python/tree/main/examples/basic):**

- SDK の基礎的な機能を示す例です。

+ - 動的なシステムプロンプト

+ - ストリーミング出力

+ - ライフサイクルイベント

- - 動的なシステムプロンプト

- - ストリーミング出力

- - ライフサイクルイベント

+- **[ツールのコード例](https://github.com/openai/openai-agents-python/tree/main/examples/tools):**

+ Web 検索やファイル検索などの OpenAI がホストするツールの実装方法と、

+ それらをエージェントに統合する方法を学べます。

-- **[tool examples](https://github.com/openai/openai-agents-python/tree/main/examples/tools):**

- Web 検索やファイル検索など、 OpenAI がホストするツールの実装方法と、それらをエージェントに統合する方法を学べます。

+- **[モデルプロバイダー](https://github.com/openai/openai-agents-python/tree/main/examples/model_providers):**

+ SDK で OpenAI 以外のモデルを使う方法を探索します。

-- **[model providers](https://github.com/openai/openai-agents-python/tree/main/examples/model_providers):**

- OpenAI 以外のモデルを SDK で利用する方法を探ります。

+- **[ハンドオフ](https://github.com/openai/openai-agents-python/tree/main/examples/handoffs):**

+ エージェントのハンドオフの実用的な例をご覧ください。

-- **[handoffs](https://github.com/openai/openai-agents-python/tree/main/examples/handoffs):**

- エージェントのハンドオフを実践的に示す例です。

+- **[MCP](https://github.com/openai/openai-agents-python/tree/main/examples/mcp):**

+ MCP でエージェントを構築する方法を学べます。

-- **[mcp](https://github.com/openai/openai-agents-python/tree/main/examples/mcp):**

- MCP を使ったエージェントの構築方法を学べます。

+- **[カスタマーサービス](https://github.com/openai/openai-agents-python/tree/main/examples/customer_service)** と **[リサーチボット](https://github.com/openai/openai-agents-python/tree/main/examples/research_bot):**

+ 実運用アプリケーションを示す、さらに作り込まれたコード例が 2 つあります

-- **[customer_service](https://github.com/openai/openai-agents-python/tree/main/examples/customer_service)** と **[research_bot](https://github.com/openai/openai-agents-python/tree/main/examples/research_bot):**

- より実践的なユースケースを示す、拡張された 2 つの例です。

+ - **customer_service**: 航空会社向けのカスタマーサービス システムの例。

+ - **research_bot**: シンプルな ディープリサーチ クローン。

- - **customer_service**: 航空会社向けカスタマーサービスシステムの例

- - **research_bot**: シンプルなディープリサーチクローン

+- **[音声](https://github.com/openai/openai-agents-python/tree/main/examples/voice):**

+ 当社の TTS と STT モデルを用いた音声エージェントの例をご覧ください。

-- **[voice](https://github.com/openai/openai-agents-python/tree/main/examples/voice):**

- TTS と STT モデルを用いた音声エージェントの例をご覧ください。

\ No newline at end of file

+- **[リアルタイム](https://github.com/openai/openai-agents-python/tree/main/examples/realtime):**

+ SDK を使ってリアルタイムな体験を構築する方法の例です。

\ No newline at end of file

diff --git a/docs/ja/guardrails.md b/docs/ja/guardrails.md

index e7b02a6ed..e82b7910a 100644

--- a/docs/ja/guardrails.md

+++ b/docs/ja/guardrails.md

@@ -4,44 +4,44 @@ search:

---

# ガードレール

-ガードレールは エージェント と _並列_ に実行され、 ユーザー入力 のチェックとバリデーションを行います。たとえば、顧客からのリクエストを支援するために非常に賢い (そのため遅く / 高価な) モデルを使うエージェントがあるとします。悪意のある ユーザー がモデルに数学の宿題を手伝わせようとするのは避けたいですよね。その場合、 高速 / 低コスト のモデルでガードレールを実行できます。ガードレールが悪意のある利用を検知した場合、即座にエラーを送出して高価なモデルの実行を停止し、時間と費用を節約できます。

+ガードレールはエージェントと _並行して_ 実行され、ユーザー入力のチェックや検証を可能にします。たとえば、非常に賢い(したがって遅く/高価な)モデルを使ってカスタマーリクエストを支援するエージェントがあるとします。悪意あるユーザーがそのモデルに数学の宿題を手伝わせるようなことは避けたいはずです。そこで、迅速/低コストなモデルでガードレールを実行できます。ガードレールが悪意のある使用を検知した場合、即座にエラーを発生させ、高価なモデルの実行を止め、時間と費用を節約できます。

ガードレールには 2 種類あります。

-1. Input ガードレールは最初の ユーザー入力 に対して実行されます

-2. Output ガードレールは最終的なエージェント出力に対して実行されます

+1. 入力ガードレールは初期のユーザー入力に対して実行されます

+2. 出力ガードレールは最終的なエージェント出力に対して実行されます

-## Input ガードレール

+## 入力ガードレール

-Input ガードレールは 3 つのステップで実行されます。

+入力ガードレールは 3 段階で実行されます。

-1. まず、ガードレールはエージェントに渡されたものと同じ入力を受け取ります。

-2. 次に、ガードレール関数が実行され [`GuardrailFunctionOutput`][agents.guardrail.GuardrailFunctionOutput] を生成し、それが [`InputGuardrailResult`][agents.guardrail.InputGuardrailResult] でラップされます。

-3. 最後に [`.tripwire_triggered`][agents.guardrail.GuardrailFunctionOutput.tripwire_triggered] が true かどうかを確認します。true の場合、[`InputGuardrailTripwireTriggered`][agents.exceptions.InputGuardrailTripwireTriggered] 例外が送出されるので、 ユーザー への適切な応答や例外処理を行えます。

+1. まず、ガードレールはエージェントに渡されたものと同じ入力を受け取ります。

+2. 次に、ガードレール関数が実行され、[`GuardrailFunctionOutput`][agents.guardrail.GuardrailFunctionOutput] を生成し、それが [`InputGuardrailResult`][agents.guardrail.InputGuardrailResult] にラップされます。

+3. 最後に、[`.tripwire_triggered`][agents.guardrail.GuardrailFunctionOutput.tripwire_triggered] が true かどうかを確認します。true の場合、[`InputGuardrailTripwireTriggered`][agents.exceptions.InputGuardrailTripwireTriggered] 例外が送出され、ユーザーへの適切な応答や例外処理が可能になります。

!!! Note

- Input ガードレールは ユーザー入力 に対して実行されることを想定しているため、エージェントのガードレールが実行されるのはそのエージェントが *最初* のエージェントである場合だけです。「なぜ `guardrails` プロパティがエージェントにあり、 `Runner.run` に渡さないのか?」と思うかもしれません。ガードレールは実際の エージェント に密接に関連する場合が多く、エージェントごとに異なるガードレールを実行するため、コードを同じ場所に置くことで可読性が向上するからです。

+ 入力ガードレールはユーザー入力で実行されることを意図しているため、あるエージェントのガードレールは、そのエージェントが「最初の」エージェントである場合にのみ実行されます。なぜ `guardrails` プロパティがエージェント側にあり、`Runner.run` に渡さないのか不思議に思うかもしれません。これは、ガードレールが実際のエージェントに密接に関連する傾向があるためです。エージェントごとに異なるガードレールを実行するのが一般的であり、コードを同じ場所に置くことで読みやすさが向上します。

-## Output ガードレール

+## 出力ガードレール

-Output ガードレールは 3 つのステップで実行されます。

+出力ガードレールは 3 段階で実行されます。

-1. まず、ガードレールはエージェントに渡されたものと同じ入力を受け取ります。

-2. 次に、ガードレール関数が実行され [`GuardrailFunctionOutput`][agents.guardrail.GuardrailFunctionOutput] を生成し、それが [`OutputGuardrailResult`][agents.guardrail.OutputGuardrailResult] でラップされます。

-3. 最後に [`.tripwire_triggered`][agents.guardrail.GuardrailFunctionOutput.tripwire_triggered] が true かどうかを確認します。true の場合、[`OutputGuardrailTripwireTriggered`][agents.exceptions.OutputGuardrailTripwireTriggered] 例外が送出されるので、 ユーザー への適切な応答や例外処理を行えます。

+1. まず、ガードレールはエージェントによって生成された出力を受け取ります。

+2. 次に、ガードレール関数が実行され、[`GuardrailFunctionOutput`][agents.guardrail.GuardrailFunctionOutput] を生成し、それが [`OutputGuardrailResult`][agents.guardrail.OutputGuardrailResult] にラップされます。

+3. 最後に、[`.tripwire_triggered`][agents.guardrail.GuardrailFunctionOutput.tripwire_triggered] が true かどうかを確認します。true の場合、[`OutputGuardrailTripwireTriggered`][agents.exceptions.OutputGuardrailTripwireTriggered] 例外が送出され、ユーザーへの適切な応答や例外処理が可能になります。

!!! Note

- Output ガードレールは最終的なエージェント出力に対して実行されることを想定しているため、エージェントのガードレールが実行されるのはそのエージェントが *最後* のエージェントである場合だけです。Input ガードレール同様、ガードレールは実際の エージェント に密接に関連するため、コードを同じ場所に置くことで可読性が向上します。

+ 出力ガードレールは最終的なエージェント出力で実行されることを意図しているため、あるエージェントのガードレールは、そのエージェントが「最後の」エージェントである場合にのみ実行されます。入力ガードレールと同様に、ガードレールは実際のエージェントに関連する傾向があるため、エージェントごとに異なるガードレールを実行するのが一般的であり、コードを同じ場所に置くことで読みやすさが向上します。

-## トリップワイヤ

+## トリップワイヤー

-入力または出力がガードレールに失敗した場合、ガードレールはトリップワイヤを用いてそれを通知できます。ガードレールがトリップワイヤを発火したことを検知すると、ただちに `{Input,Output}GuardrailTripwireTriggered` 例外を送出してエージェントの実行を停止します。

+入力または出力がガードレールに不合格となった場合、ガードレールはトリップワイヤーでそれを通知できます。トリップワイヤーが作動したガードレールを検知するとすぐに、{Input,Output}GuardrailTripwireTriggered 例外を送出し、エージェントの実行を停止します。

## ガードレールの実装

-入力を受け取り、[`GuardrailFunctionOutput`][agents.guardrail.GuardrailFunctionOutput] を返す関数を用意する必要があります。次の例では、内部で エージェント を実行してこれを行います。

+入力を受け取り、[`GuardrailFunctionOutput`][agents.guardrail.GuardrailFunctionOutput] を返す関数を提供する必要があります。次の例では、内部でエージェントを実行してこれを行います。

```python

from pydantic import BaseModel

@@ -94,12 +94,12 @@ async def main():

print("Math homework guardrail tripped")

```

-1. この エージェント をガードレール関数内で使用します。

-2. これはエージェントの入力 / コンテキストを受け取り、結果を返すガードレール関数です。

-3. ガードレール結果に追加情報を含めることができます。

-4. これはワークフローを定義する実際のエージェントです。

+1. このエージェントをガードレール関数内で使用します。

+2. これはエージェントの入力/コンテキストを受け取り、結果を返すガードレール関数です。

+3. ガードレール結果に追加情報を含めることができます。

+4. これはワークフローを定義する実際のエージェントです。

-Output ガードレールも同様です。

+出力ガードレールも同様です。

```python

from pydantic import BaseModel

@@ -152,7 +152,7 @@ async def main():

print("Math output guardrail tripped")

```

-1. これは実際のエージェントの出力型です。

-2. これはガードレールの出力型です。

-3. これはエージェントの出力を受け取り、結果を返すガードレール関数です。

+1. これは実際のエージェントの出力型です。

+2. これはガードレールの出力型です。

+3. これはエージェントの出力を受け取り、結果を返すガードレール関数です。

4. これはワークフローを定義する実際のエージェントです。

\ No newline at end of file

diff --git a/docs/ja/handoffs.md b/docs/ja/handoffs.md

index c0e99556e..278405757 100644

--- a/docs/ja/handoffs.md

+++ b/docs/ja/handoffs.md

@@ -4,19 +4,19 @@ search:

---

# ハンドオフ

-ハンドオフを使用すると、エージェント がタスクを別の エージェント に委譲できます。これは、複数の エージェント がそれぞれ異なる分野を専門とするシナリオで特に便利です。たとえばカスタマーサポートアプリでは、注文状況、返金、 FAQ などのタスクを個別に担当する エージェント を用意できます。

+ハンドオフは、あるエージェントが別のエージェントにタスクを委譲できるようにするものです。これは、異なるエージェントがそれぞれの分野に特化している場面で特に有用です。たとえば、カスタマーサポートアプリでは、注文状況、返金、FAQ などのタスクを個別に担当するエージェントがいるかもしれません。

-ハンドオフは LLM からはツールとして認識されます。そのため、`Refund Agent` という エージェント へのハンドオフであれば、ツール名は `transfer_to_refund_agent` になります。

+ハンドオフは LLM へのツールとして表現されます。たとえば、`Refund Agent` というエージェントへのハンドオフがある場合、そのツール名は `transfer_to_refund_agent` となります。

## ハンドオフの作成

-すべての エージェント には [`handoffs`][agents.agent.Agent.handoffs] パラメーターがあり、直接 `Agent` を渡すことも、ハンドオフをカスタマイズする `Handoff` オブジェクトを渡すこともできます。

+すべてのエージェントは [`handoffs`][agents.agent.Agent.handoffs] パラメーターを持ち、これは直接 `Agent` を受け取るか、ハンドオフをカスタマイズする `Handoff` オブジェクトを受け取れます。

-Agents SDK が提供する [`handoff()`][agents.handoffs.handoff] 関数を使ってハンドオフを作成できます。この関数では、引き継ぎ先の エージェント を指定し、オーバーライドや入力フィルターをオプションで設定できます。

+Agents SDK によって提供される [`handoff()`][agents.handoffs.handoff] 関数を使ってハンドオフを作成できます。この関数では、ハンドオフ先のエージェントに加え、任意の override や入力フィルターも指定できます。

### 基本的な使い方

-シンプルなハンドオフを作成する例を示します。

+シンプルなハンドオフの作成方法は次のとおりです。

```python

from agents import Agent, handoff

@@ -28,18 +28,19 @@ refund_agent = Agent(name="Refund agent")

triage_agent = Agent(name="Triage agent", handoffs=[billing_agent, handoff(refund_agent)])

```

-1. `billing_agent` のように エージェント を直接指定することも、`handoff()` 関数を使用することもできます。

+1. `billing_agent` のようにエージェントを直接使うことも、`handoff()` 関数を使うこともできます。

### `handoff()` 関数によるハンドオフのカスタマイズ

-[`handoff()`][agents.handoffs.handoff] 関数を使うと、ハンドオフを細かくカスタマイズできます。

+[`handoff()`][agents.handoffs.handoff] 関数では、さまざまなカスタマイズが可能です。

-- `agent`: ここで指定した エージェント に処理が引き渡されます。

-- `tool_name_override`: デフォルトでは `Handoff.default_tool_name()` が使用され、`transfer_to_

+> [!NOTE]

+> Looking for the JavaScript/TypeScript version? Check out [Agents SDK JS/TS](https://github.com/openai/openai-agents-js).

+

### Core concepts:

1. [**Agents**](https://openai.github.io/openai-agents-python/agents): LLMs configured with instructions, tools, guardrails, and handoffs

2. [**Handoffs**](https://openai.github.io/openai-agents-python/handoffs/): A specialized tool call used by the Agents SDK for transferring control between agents

3. [**Guardrails**](https://openai.github.io/openai-agents-python/guardrails/): Configurable safety checks for input and output validation

-4. [**Tracing**](https://openai.github.io/openai-agents-python/tracing/): Built-in tracking of agent runs, allowing you to view, debug and optimize your workflows

+4. [**Sessions**](#sessions): Automatic conversation history management across agent runs

+5. [**Tracing**](https://openai.github.io/openai-agents-python/tracing/): Built-in tracking of agent runs, allowing you to view, debug and optimize your workflows

Explore the [examples](examples) directory to see the SDK in action, and read our [documentation](https://openai.github.io/openai-agents-python/) for more details.

@@ -17,14 +21,23 @@ Explore the [examples](examples) directory to see the SDK in action, and read ou

1. Set up your Python environment

-```

+- Option A: Using venv (traditional method)

+

+```bash

python -m venv env

-source env/bin/activate

+source env/bin/activate # On Windows: env\Scripts\activate

```

-2. Install Agents SDK

+- Option B: Using uv (recommended)

+```bash

+uv venv

+source .venv/bin/activate # On Windows: .venv\Scripts\activate

```

+

+2. Install Agents SDK

+

+```bash

pip install openai-agents

```

@@ -47,7 +60,7 @@ print(result.final_output)

(_If running this, ensure you set the `OPENAI_API_KEY` environment variable_)

-(_For Jupyter notebook users, see [hello_world_jupyter.py](examples/basic/hello_world_jupyter.py)_)

+(_For Jupyter notebook users, see [hello_world_jupyter.ipynb](examples/basic/hello_world_jupyter.ipynb)_)

## Handoffs example

@@ -144,6 +157,118 @@ The Agents SDK is designed to be highly flexible, allowing you to model a wide r

The Agents SDK automatically traces your agent runs, making it easy to track and debug the behavior of your agents. Tracing is extensible by design, supporting custom spans and a wide variety of external destinations, including [Logfire](https://logfire.pydantic.dev/docs/integrations/llms/openai/#openai-agents), [AgentOps](https://docs.agentops.ai/v1/integrations/agentssdk), [Braintrust](https://braintrust.dev/docs/guides/traces/integrations#openai-agents-sdk), [Scorecard](https://docs.scorecard.io/docs/documentation/features/tracing#openai-agents-sdk-integration), and [Keywords AI](https://docs.keywordsai.co/integration/development-frameworks/openai-agent). For more details about how to customize or disable tracing, see [Tracing](http://openai.github.io/openai-agents-python/tracing), which also includes a larger list of [external tracing processors](http://openai.github.io/openai-agents-python/tracing/#external-tracing-processors-list).

+## Long running agents & human-in-the-loop

+

+You can use the Agents SDK [Temporal](https://temporal.io/) integration to run durable, long-running workflows, including human-in-the-loop tasks. View a demo of Temporal and the Agents SDK working in action to complete long-running tasks [in this video](https://www.youtube.com/watch?v=fFBZqzT4DD8), and [view docs here](https://github.com/temporalio/sdk-python/tree/main/temporalio/contrib/openai_agents).

+

+## Sessions

+

+The Agents SDK provides built-in session memory to automatically maintain conversation history across multiple agent runs, eliminating the need to manually handle `.to_input_list()` between turns.

+

+### Quick start

+

+```python

+from agents import Agent, Runner, SQLiteSession

+

+# Create agent

+agent = Agent(

+ name="Assistant",

+ instructions="Reply very concisely.",

+)

+

+# Create a session instance

+session = SQLiteSession("conversation_123")

+

+# First turn

+result = await Runner.run(

+ agent,

+ "What city is the Golden Gate Bridge in?",

+ session=session

+)

+print(result.final_output) # "San Francisco"

+

+# Second turn - agent automatically remembers previous context

+result = await Runner.run(

+ agent,

+ "What state is it in?",

+ session=session

+)

+print(result.final_output) # "California"

+

+# Also works with synchronous runner

+result = Runner.run_sync(

+ agent,

+ "What's the population?",

+ session=session

+)

+print(result.final_output) # "Approximately 39 million"

+```

+

+### Session options

+

+- **No memory** (default): No session memory when session parameter is omitted

+- **`session: Session = DatabaseSession(...)`**: Use a Session instance to manage conversation history

+

+```python

+from agents import Agent, Runner, SQLiteSession

+

+# Custom SQLite database file

+session = SQLiteSession("user_123", "conversations.db")

+agent = Agent(name="Assistant")

+

+# Different session IDs maintain separate conversation histories

+result1 = await Runner.run(

+ agent,

+ "Hello",

+ session=session

+)

+result2 = await Runner.run(

+ agent,

+ "Hello",

+ session=SQLiteSession("user_456", "conversations.db")

+)

+```

+

+### Custom session implementations

+

+You can implement your own session memory by creating a class that follows the `Session` protocol:

+

+```python

+from agents.memory import Session

+from typing import List

+

+class MyCustomSession:

+ """Custom session implementation following the Session protocol."""

+

+ def __init__(self, session_id: str):

+ self.session_id = session_id

+ # Your initialization here

+

+ async def get_items(self, limit: int | None = None) -> List[dict]:

+ # Retrieve conversation history for the session

+ pass

+

+ async def add_items(self, items: List[dict]) -> None:

+ # Store new items for the session

+ pass

+

+ async def pop_item(self) -> dict | None:

+ # Remove and return the most recent item from the session

+ pass

+

+ async def clear_session(self) -> None:

+ # Clear all items for the session

+ pass

+

+# Use your custom session

+agent = Agent(name="Assistant")

+result = await Runner.run(

+ agent,

+ "Hello",

+ session=MyCustomSession("my_session")

+)

+```

+

## Development (only needed if you need to edit the SDK/examples)

0. Ensure you have [`uv`](https://docs.astral.sh/uv/) installed.

@@ -160,10 +285,17 @@ make sync

2. (After making changes) lint/test

+```

+make check # run tests linter and typechecker

+```

+

+Or to run them individually:

+

```

make tests # run tests

make mypy # run typechecker

make lint # run linter

+make format-check # run style checker

```

## Acknowledgements

@@ -171,6 +303,7 @@ make lint # run linter

We'd like to acknowledge the excellent work of the open-source community, especially:

- [Pydantic](https://docs.pydantic.dev/latest/) (data validation) and [PydanticAI](https://ai.pydantic.dev/) (advanced agent framework)

+- [LiteLLM](https://github.com/BerriAI/litellm) (unified interface for 100+ LLMs)

- [MkDocs](https://github.com/squidfunk/mkdocs-material)

- [Griffe](https://github.com/mkdocstrings/griffe)

- [uv](https://github.com/astral-sh/uv) and [ruff](https://github.com/astral-sh/ruff)

diff --git a/docs/agents.md b/docs/agents.md

index 39d4afd57..5dbd775a6 100644

--- a/docs/agents.md

+++ b/docs/agents.md

@@ -6,6 +6,7 @@ Agents are the core building block in your apps. An agent is a large language mo

The most common properties of an agent you'll configure are:

+- `name`: A required string that identifies your agent.

- `instructions`: also known as a developer message or system prompt.

- `model`: which LLM to use, and optional `model_settings` to configure model tuning parameters like temperature, top_p, etc.

- `tools`: Tools that the agent can use to achieve its tasks.

@@ -15,6 +16,7 @@ from agents import Agent, ModelSettings, function_tool

@function_tool

def get_weather(city: str) -> str:

+ """returns weather info for the specified city."""

return f"The weather in {city} is sunny"

agent = Agent(

@@ -32,6 +34,7 @@ Agents are generic on their `context` type. Context is a dependency-injection to

```python

@dataclass

class UserContext:

+ name: str

uid: str

is_pro_user: bool

@@ -112,7 +115,7 @@ Sometimes, you want to observe the lifecycle of an agent. For example, you may w

## Guardrails

-Guardrails allow you to run checks/validations on user input, in parallel to the agent running. For example, you could screen the user's input for relevance. Read more in the [guardrails](guardrails.md) documentation.

+Guardrails allow you to run checks/validations on user input in parallel to the agent running, and on the agent's output once it is produced. For example, you could screen the user's input and agent's output for relevance. Read more in the [guardrails](guardrails.md) documentation.

## Cloning/copying agents

@@ -140,8 +143,103 @@ Supplying a list of tools doesn't always mean the LLM will use a tool. You can f

3. `none`, which requires the LLM to _not_ use a tool.

4. Setting a specific string e.g. `my_tool`, which requires the LLM to use that specific tool.

+```python

+from agents import Agent, Runner, function_tool, ModelSettings

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ model_settings=ModelSettings(tool_choice="get_weather")

+)

+```

+

+## Tool Use Behavior

+

+The `tool_use_behavior` parameter in the `Agent` configuration controls how tool outputs are handled:

+- `"run_llm_again"`: The default. Tools are run, and the LLM processes the results to produce a final response.

+- `"stop_on_first_tool"`: The output of the first tool call is used as the final response, without further LLM processing.

+

+```python

+from agents import Agent, Runner, function_tool, ModelSettings

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ tool_use_behavior="stop_on_first_tool"

+)

+```

+

+- `StopAtTools(stop_at_tool_names=[...])`: Stops if any specified tool is called, using its output as the final response.

+```python

+from agents import Agent, Runner, function_tool

+from agents.agent import StopAtTools

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+@function_tool

+def sum_numbers(a: int, b: int) -> int:

+ """Adds two numbers."""

+ return a + b

+

+agent = Agent(

+ name="Stop At Stock Agent",

+ instructions="Get weather or sum numbers.",

+ tools=[get_weather, sum_numbers],

+ tool_use_behavior=StopAtTools(stop_at_tool_names=["get_weather"])

+)

+```

+- `ToolsToFinalOutputFunction`: A custom function that processes tool results and decides whether to stop or continue with the LLM.

+

+```python

+from agents import Agent, Runner, function_tool, FunctionToolResult, RunContextWrapper

+from agents.agent import ToolsToFinalOutputResult

+from typing import List, Any

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+def custom_tool_handler(

+ context: RunContextWrapper[Any],

+ tool_results: List[FunctionToolResult]

+) -> ToolsToFinalOutputResult:

+ """Processes tool results to decide final output."""

+ for result in tool_results:

+ if result.output and "sunny" in result.output:

+ return ToolsToFinalOutputResult(

+ is_final_output=True,

+ final_output=f"Final weather: {result.output}"

+ )

+ return ToolsToFinalOutputResult(

+ is_final_output=False,

+ final_output=None

+ )

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ tool_use_behavior=custom_tool_handler

+)

+```

+

!!! note

To prevent infinite loops, the framework automatically resets `tool_choice` to "auto" after a tool call. This behavior is configurable via [`agent.reset_tool_choice`][agents.agent.Agent.reset_tool_choice]. The infinite loop is because tool results are sent to the LLM, which then generates another tool call because of `tool_choice`, ad infinitum.

- If you want the Agent to completely stop after a tool call (rather than continuing with auto mode), you can set [`Agent.tool_use_behavior="stop_on_first_tool"`] which will directly use the tool output as the final response without further LLM processing.

diff --git a/docs/context.md b/docs/context.md

index 4176ec51f..6e54565e0 100644

--- a/docs/context.md

+++ b/docs/context.md

@@ -38,7 +38,8 @@ class UserInfo: # (1)!

@function_tool

async def fetch_user_age(wrapper: RunContextWrapper[UserInfo]) -> str: # (2)!

- return f"User {wrapper.context.name} is 47 years old"

+ """Fetch the age of the user. Call this function to get user's age information."""

+ return f"The user {wrapper.context.name} is 47 years old"

async def main():

user_info = UserInfo(name="John", uid=123)

diff --git a/docs/examples.md b/docs/examples.md

index 30d602827..ae40fa909 100644

--- a/docs/examples.md

+++ b/docs/examples.md

@@ -40,3 +40,6 @@ Check out a variety of sample implementations of the SDK in the examples section

- **[voice](https://github.com/openai/openai-agents-python/tree/main/examples/voice):**

See examples of voice agents, using our TTS and STT models.

+

+- **[realtime](https://github.com/openai/openai-agents-python/tree/main/examples/realtime):**

+ Examples showing how to build realtime experiences using the SDK.

diff --git a/docs/guardrails.md b/docs/guardrails.md

index 2f0be0f2a..8df904a4c 100644

--- a/docs/guardrails.md

+++ b/docs/guardrails.md

@@ -23,7 +23,7 @@ Input guardrails run in 3 steps:

Output guardrails run in 3 steps:

-1. First, the guardrail receives the same input passed to the agent.

+1. First, the guardrail receives the output produced by the agent.

2. Next, the guardrail function runs to produce a [`GuardrailFunctionOutput`][agents.guardrail.GuardrailFunctionOutput], which is then wrapped in an [`OutputGuardrailResult`][agents.guardrail.OutputGuardrailResult]

3. Finally, we check if [`.tripwire_triggered`][agents.guardrail.GuardrailFunctionOutput.tripwire_triggered] is true. If true, an [`OutputGuardrailTripwireTriggered`][agents.exceptions.OutputGuardrailTripwireTriggered] exception is raised, so you can appropriately respond to the user or handle the exception.

diff --git a/docs/handoffs.md b/docs/handoffs.md

index 0b868c4af..85707c6b3 100644

--- a/docs/handoffs.md

+++ b/docs/handoffs.md

@@ -36,6 +36,7 @@ The [`handoff()`][agents.handoffs.handoff] function lets you customize things.

- `on_handoff`: A callback function executed when the handoff is invoked. This is useful for things like kicking off some data fetching as soon as you know a handoff is being invoked. This function receives the agent context, and can optionally also receive LLM generated input. The input data is controlled by the `input_type` param.

- `input_type`: The type of input expected by the handoff (optional).

- `input_filter`: This lets you filter the input received by the next agent. See below for more.

+- `is_enabled`: Whether the handoff is enabled. This can be a boolean or a function that returns a boolean, allowing you to dynamically enable or disable the handoff at runtime.

```python

from agents import Agent, handoff, RunContextWrapper

diff --git a/docs/index.md b/docs/index.md

index 8aef6574e..f8eb7dfec 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -4,7 +4,8 @@ The [OpenAI Agents SDK](https://github.com/openai/openai-agents-python) enables

- **Agents**, which are LLMs equipped with instructions and tools

- **Handoffs**, which allow agents to delegate to other agents for specific tasks

-- **Guardrails**, which enable the inputs to agents to be validated

+- **Guardrails**, which enable validation of agent inputs and outputs

+- **Sessions**, which automatically maintains conversation history across agent runs

In combination with Python, these primitives are powerful enough to express complex relationships between tools and agents, and allow you to build real-world applications without a steep learning curve. In addition, the SDK comes with built-in **tracing** that lets you visualize and debug your agentic flows, as well as evaluate them and even fine-tune models for your application.

@@ -21,6 +22,7 @@ Here are the main features of the SDK:

- Python-first: Use built-in language features to orchestrate and chain agents, rather than needing to learn new abstractions.

- Handoffs: A powerful feature to coordinate and delegate between multiple agents.

- Guardrails: Run input validations and checks in parallel to your agents, breaking early if the checks fail.

+- Sessions: Automatic conversation history management across agent runs, eliminating manual state handling.

- Function tools: Turn any Python function into a tool, with automatic schema generation and Pydantic-powered validation.

- Tracing: Built-in tracing that lets you visualize, debug and monitor your workflows, as well as use the OpenAI suite of evaluation, fine-tuning and distillation tools.

diff --git a/docs/ja/agents.md b/docs/ja/agents.md

index 828b36355..e6d72075a 100644

--- a/docs/ja/agents.md

+++ b/docs/ja/agents.md

@@ -4,21 +4,23 @@ search:

---

# エージェント

-エージェントはアプリの主要な構成ブロックです。エージェントは、大規模言語モデル ( LLM ) に instructions と tools を設定したものです。

+エージェントは、アプリにおける中心的な構成要素です。エージェントは、instructions と tools で設定された大規模言語モデル ( LLM ) です。

## 基本設定

-エージェントで最も一般的に設定するプロパティは次のとおりです。

+一般的に設定するエージェントのプロパティは次のとおりです。

-- `instructions`: 開発者メッセージまたは system prompt とも呼ばれます。

-- `model`: 使用する LLM と、temperature や top_p などのモデル調整パラメーターを指定する任意の `model_settings`。

-- `tools`: エージェントがタスクを達成するために利用できるツール。

+- `name`: エージェントを識別する必須の文字列です。

+- `instructions`: developer メッセージまたは system prompt とも呼ばれます。

+- `model`: 使用する LLM と、`model_settings` による temperature、top_p などのチューニング パラメーターの任意設定。

+- `tools`: エージェントがタスク達成のために使用できるツールです。

```python

from agents import Agent, ModelSettings, function_tool

@function_tool

def get_weather(city: str) -> str:

+ """returns weather info for the specified city."""

return f"The weather in {city} is sunny"

agent = Agent(

@@ -31,11 +33,12 @@ agent = Agent(

## コンテキスト

-エージェントはその `context` 型について汎用的です。コンテキストは依存性注入の手段で、`Runner.run()` に渡すオブジェクトです。これはすべてのエージェント、ツール、ハンドオフなどに渡され、エージェント実行時の依存関係や状態をまとめて保持します。任意の Python オブジェクトをコンテキストとして渡せます。

+エージェントは `context` 型に対してジェネリックです。コンテキストは依存性注入ツールです。あなたが作成して `Runner.run()` に渡すオブジェクトで、すべてのエージェント、ツール、ハンドオフなどに渡され、エージェント実行のための依存関係と状態をまとめて保持します。コンテキストには任意の Python オブジェクトを提供できます。

```python

@dataclass

class UserContext:

+ name: str

uid: str

is_pro_user: bool

@@ -49,7 +52,7 @@ agent = Agent[UserContext](

## 出力タイプ

-デフォルトでは、エージェントはプレーンテキスト ( つまり `str` ) を出力します。特定の型で出力させたい場合は `output_type` パラメーターを使用します。一般的には [Pydantic](https://docs.pydantic.dev/) オブジェクトを利用しますが、Pydantic の [TypeAdapter](https://docs.pydantic.dev/latest/api/type_adapter/) でラップ可能な型であれば何でも対応します。たとえば dataclass、list、TypedDict などです。

+デフォルトでは、エージェントはプレーンテキスト ( つまり `str` ) の出力を生成します。特定のタイプの出力を生成させたい場合は、`output_type` パラメーターを使用できます。一般的な選択肢は [Pydantic](https://docs.pydantic.dev/) オブジェクトを使うことですが、Pydantic の [TypeAdapter](https://docs.pydantic.dev/latest/api/type_adapter/) でラップできる任意の型 ( dataclasses、lists、TypedDict など ) をサポートします。

```python

from pydantic import BaseModel

@@ -70,11 +73,11 @@ agent = Agent(

!!! note

- `output_type` を渡すと、モデルは通常のプレーンテキスト応答の代わりに [structured outputs](https://platform.openai.com/docs/guides/structured-outputs) を使用するよう指示されます。

+ `output_type` を渡すと、通常のプレーンテキストの応答ではなく [structured outputs](https://platform.openai.com/docs/guides/structured-outputs) を使うようにモデルへ指示します。

## ハンドオフ

-ハンドオフは、エージェントが委譲できるサブエージェントです。ハンドオフのリストを渡しておくと、エージェントは必要に応じてそれらに処理を委譲できます。これにより、単一のタスクに特化したモジュール式エージェントを編成できる強力なパターンが実現します。詳細は [handoffs](handoffs.md) ドキュメントをご覧ください。

+ハンドオフは、エージェントが委任できるサブエージェントです。ハンドオフのリストを提供すると、関連がある場合にエージェントはそれらへ委任できます。これは、単一のタスクに特化して優れた、モジュール式のエージェントをオーケストレーションする強力なパターンです。詳細は [ハンドオフ](handoffs.md) のドキュメントをご覧ください。

```python

from agents import Agent

@@ -93,9 +96,9 @@ triage_agent = Agent(

)

```

-## 動的 instructions

+## 動的な指示

-通常はエージェント作成時に instructions を指定しますが、関数を介して動的に instructions を提供することもできます。その関数はエージェントとコンテキストを受け取り、プロンプトを返す必要があります。同期関数と `async` 関数の両方に対応しています。

+多くの場合、エージェントの作成時に instructions を指定できます。しかし、関数を通じて動的な指示を提供することも可能です。この関数はエージェントとコンテキストを受け取り、プロンプトを返す必要があります。通常の関数と `async` 関数のどちらも使用できます。

```python

def dynamic_instructions(

@@ -110,17 +113,17 @@ agent = Agent[UserContext](

)

```

-## ライフサイクルイベント (hooks)

+## ライフサイクルイベント(フック)

-場合によっては、エージェントのライフサイクルを観察したいことがあります。たとえば、イベントをログに記録したり、特定のイベント発生時にデータを事前取得したりする場合です。`hooks` プロパティを使ってエージェントのライフサイクルにフックできます。[`AgentHooks`][agents.lifecycle.AgentHooks] クラスをサブクラス化し、関心のあるメソッドをオーバーライドしてください。

+ときには、エージェントのライフサイクルを観察したくなることがあります。たとえば、イベントをログに記録したり、特定のイベント発生時にデータを事前取得したりしたい場合があります。`hooks` プロパティでエージェントのライフサイクルにフックできます。[`AgentHooks`][agents.lifecycle.AgentHooks] クラスをサブクラス化し、関心のあるメソッドをオーバーライドしてください。

## ガードレール

-ガードレールを使うと、エージェントの実行と並行してユーザー入力に対するチェックやバリデーションを実行できます。たとえば、ユーザーの入力内容が関連しているかをスクリーニングできます。詳細は [guardrails](guardrails.md) ドキュメントをご覧ください。

+ガードレールにより、エージェントの実行と並行してユーザー入力に対するチェック/検証を行い、エージェントの出力が生成された後にもそれを行えます。たとえば、ユーザーの入力やエージェントの出力を関連性でスクリーニングできます。詳細は [ガードレール](guardrails.md) のドキュメントをご覧ください。

-## エージェントの複製

+## エージェントのクローン/コピー

-`clone()` メソッドを使用すると、エージェントを複製し、必要に応じて任意のプロパティを変更できます。

+エージェントの `clone()` メソッドを使用すると、エージェントを複製し、必要に応じて任意のプロパティを変更できます。

```python

pirate_agent = Agent(

@@ -137,15 +140,109 @@ robot_agent = pirate_agent.clone(

## ツール使用の強制

-ツールの一覧を渡しても、LLM が必ずツールを使用するとは限りません。[`ModelSettings.tool_choice`][agents.model_settings.ModelSettings.tool_choice] を設定することでツール使用を強制できます。有効な値は次のとおりです。

+ツールのリストを指定しても、LLM が必ずツールを使用するとは限りません。[`ModelSettings.tool_choice`][agents.model_settings.ModelSettings.tool_choice] を設定するとツール使用を強制できます。有効な値は次のとおりです。

-1. `auto` — ツールを使用するかどうかを LLM が判断します。

-2. `required` — LLM にツール使用を必須化します ( ただし使用するツールは自動選択 )。

-3. `none` — LLM にツールを使用しないことを要求します。

-4. 特定の文字列 ( 例: `my_tool` ) — その特定のツールを LLM に使用させます。

+1. `auto`: ツールを使用するかどうかを LLM に委ねます。

+2. `required`: LLM にツールの使用を必須にします ( どのツールを使うかは賢く判断できます )。

+3. `none`: LLM にツールを使用しないことを要求します。

+4. 文字列を指定 ( 例: `my_tool` ): その特定のツールを LLM に使用させます。

-!!! note

+```python

+from agents import Agent, Runner, function_tool, ModelSettings

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ model_settings=ModelSettings(tool_choice="get_weather")

+)

+```

+

+## ツール使用の挙動

+

+`Agent` の設定にある `tool_use_behavior` パラメーターは、ツール出力の扱い方を制御します。

+- `"run_llm_again"`: デフォルト。ツールが実行され、LLM が結果を処理して最終応答を生成します。

+- `"stop_on_first_tool"`: 最初のツール呼び出しの出力をそのまま最終応答として使用し、以降の LLM 処理は行いません。

+

+```python

+from agents import Agent, Runner, function_tool, ModelSettings

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ tool_use_behavior="stop_on_first_tool"

+)

+```

- 無限ループを防ぐため、フレームワークはツール呼び出し後に `tool_choice` を自動的に "auto" にリセットします。この動作は [`agent.reset_tool_choice`][agents.agent.Agent.reset_tool_choice] で設定できます。無限ループが起こる理由は、ツールの結果が LLM に送られ、`tool_choice` により再びツール呼び出しが生成される、という流れが繰り返されるからです。

+- `StopAtTools(stop_at_tool_names=[...])`: 指定したいずれかのツールが呼び出されたら停止し、その出力を最終応答として使用します。

+```python

+from agents import Agent, Runner, function_tool

+from agents.agent import StopAtTools

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+@function_tool

+def sum_numbers(a: int, b: int) -> int:

+ """Adds two numbers."""

+ return a + b

+

+agent = Agent(

+ name="Stop At Stock Agent",

+ instructions="Get weather or sum numbers.",

+ tools=[get_weather, sum_numbers],

+ tool_use_behavior=StopAtTools(stop_at_tool_names=["get_weather"])

+)

+```

+- `ToolsToFinalOutputFunction`: ツールの結果を処理し、停止するか LLM を続行するかを判断するカスタム関数です。

+

+```python

+from agents import Agent, Runner, function_tool, FunctionToolResult, RunContextWrapper

+from agents.agent import ToolsToFinalOutputResult

+from typing import List, Any

+

+@function_tool

+def get_weather(city: str) -> str:

+ """Returns weather info for the specified city."""

+ return f"The weather in {city} is sunny"

+

+def custom_tool_handler(

+ context: RunContextWrapper[Any],

+ tool_results: List[FunctionToolResult]

+) -> ToolsToFinalOutputResult:

+ """Processes tool results to decide final output."""

+ for result in tool_results:

+ if result.output and "sunny" in result.output:

+ return ToolsToFinalOutputResult(

+ is_final_output=True,

+ final_output=f"Final weather: {result.output}"

+ )

+ return ToolsToFinalOutputResult(

+ is_final_output=False,

+ final_output=None

+ )

+

+agent = Agent(

+ name="Weather Agent",

+ instructions="Retrieve weather details.",

+ tools=[get_weather],

+ tool_use_behavior=custom_tool_handler

+)

+```

+

+!!! note

- ツール呼び出し後にエージェントを完全に停止させたい場合 ( auto モードで続行させたくない場合 ) は、[`Agent.tool_use_behavior="stop_on_first_tool"`] を設定してください。これにより、ツールの出力を LL M の追加処理なしにそのまま最終応答として返します。

\ No newline at end of file

+ 無限ループを防ぐため、フレームワークはツール呼び出し後に `tool_choice` を自動的に "auto" にリセットします。この挙動は [`agent.reset_tool_choice`][agents.agent.Agent.reset_tool_choice] で設定できます。無限ループは、ツール結果が LLM に送られ、その後 `tool_choice` により LLM が再度ツール呼び出しを生成し続けるために発生します。

\ No newline at end of file

diff --git a/docs/ja/config.md b/docs/ja/config.md

index bf76b9fb6..b1b81bfdf 100644

--- a/docs/ja/config.md

+++ b/docs/ja/config.md

@@ -6,7 +6,7 @@ search:

## API キーとクライアント

-デフォルトでは、 SDK はインポートされた時点で LLM リクエストとトレーシングに使用する `OPENAI_API_KEY` 環境変数を探します。アプリ起動前にこの環境変数を設定できない場合は、 [set_default_openai_key()][agents.set_default_openai_key] 関数を利用してキーを設定できます。

+デフォルトでは、SDK はインポートされた直後から LLM リクエストと トレーシング のために `OPENAI_API_KEY` 環境変数を探します。アプリ起動前にその環境変数を設定できない場合は、[set_default_openai_key()][agents.set_default_openai_key] 関数でキーを設定できます。

```python

from agents import set_default_openai_key

@@ -14,7 +14,7 @@ from agents import set_default_openai_key

set_default_openai_key("sk-...")

```