PDF Document Layout Analysis for Deployment on Modal.com

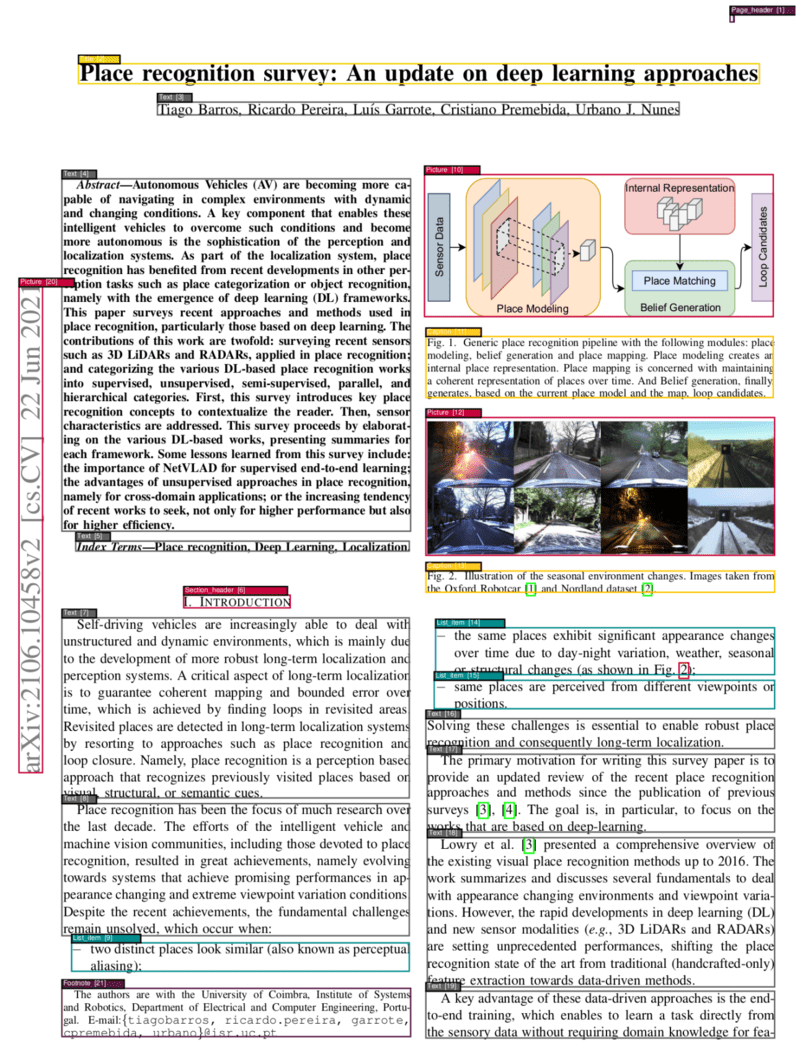

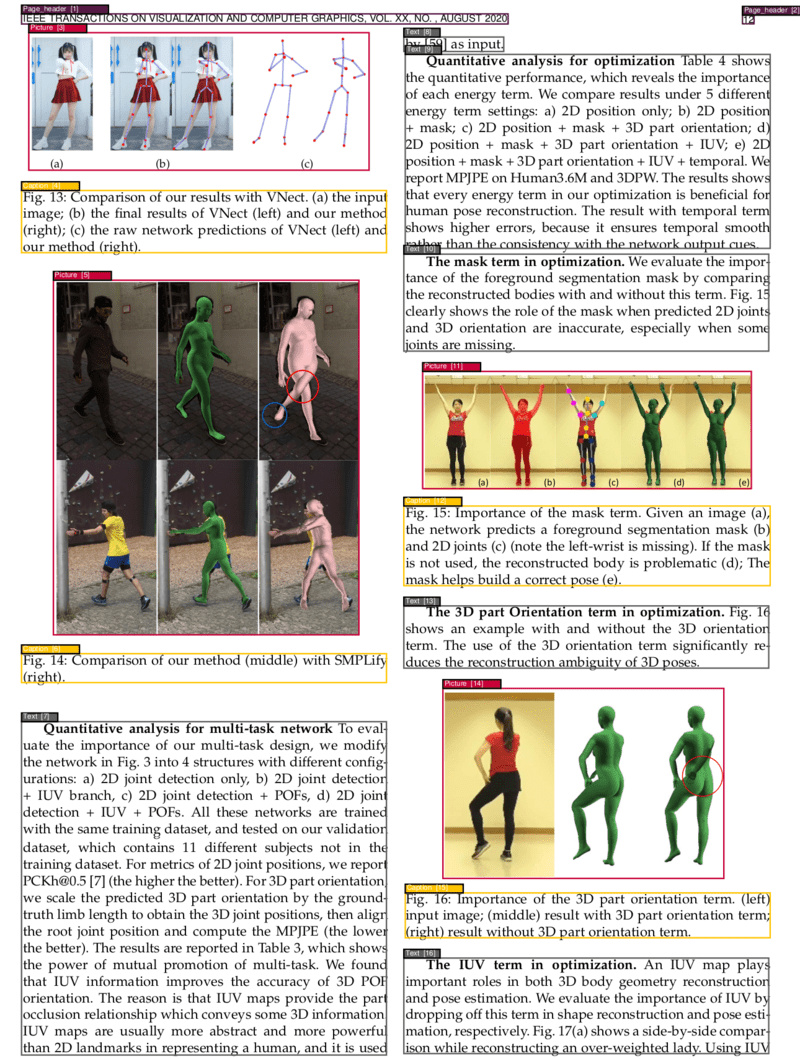

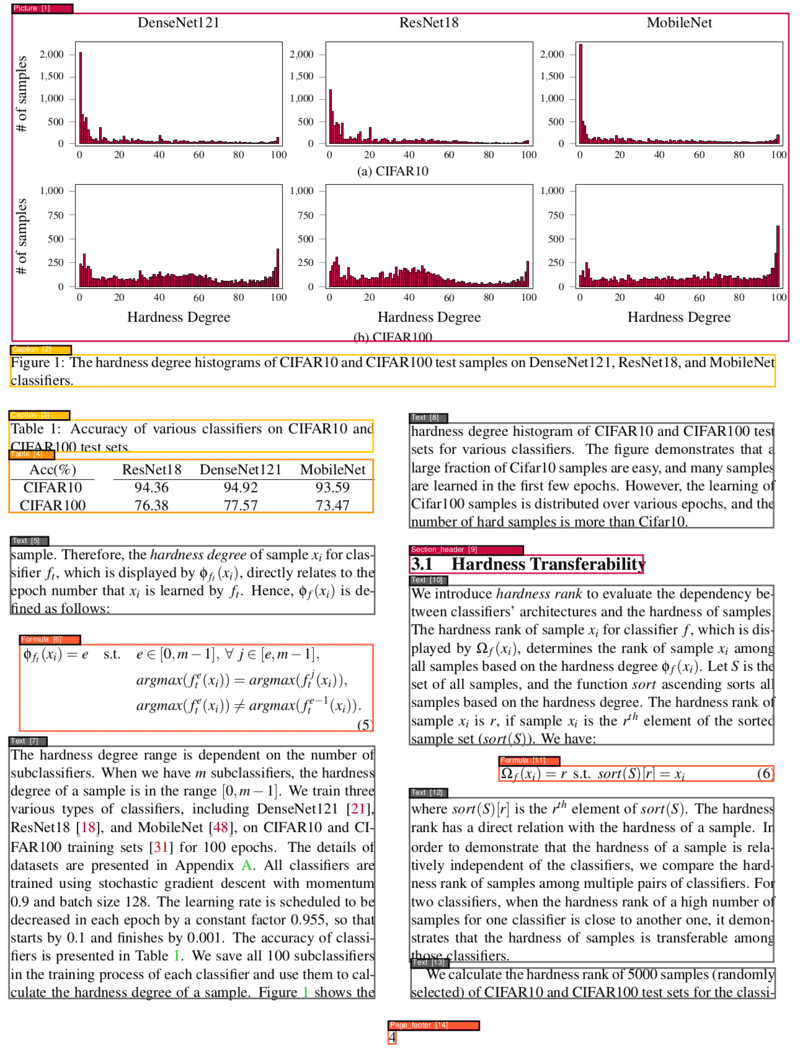

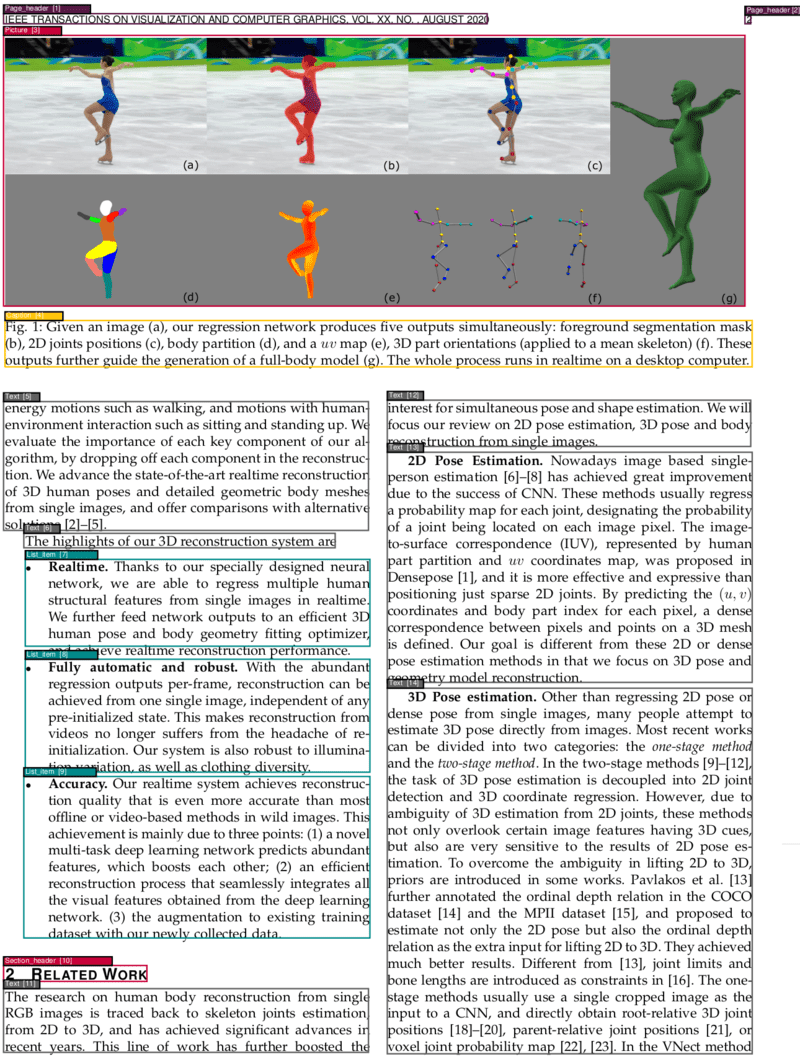

Originally created by Huridocs, this project provides a powerful and flexible PDF analysis service. The service enables OCR, segmentation, and classification of different parts of PDF pages, identifying elements such as texts, titles, pictures, tables, and so on. Additionally, it determines the correct order of these identified elements.

I (chriscarrollsmith) have modified this project to be deployed on Modal.com, a serverless cloud platform.

|

|

|

|

This service is designed to be deployed on Modal, a serverless cloud platform.

Before deploying the service, Modal tests all the imports locally. That means you'll need to install the dependencies.

You may need to install some system packages first. Here's how to install the full list of packages used by the service (on Ubuntu):

sudo apt-get install -y libgomp1 ffmpeg libsm6 libxext6 pdftohtml git ninja-build g++ qpdf pandoc ocrmypdfIf you don't already have it, you'll need to install uv to install the Python dependencies. Then run the following commands to install them:

uv sync

uv pip install git+https://github.com/facebookresearch/detectron2.git@70f454304e1a38378200459dd2dbca0f0f4a5ab4

uv pip install pycocotools==2.0.8To deploy to Modal, you'll need a Modal account. Run uv run modal setup to log in with the Modal CLI tool, which will have been installed along with the Python dependencies when you ran uv sync.

You'll also need to set an API key to secure your service. Run:

export API_KEY=$(openssl rand -hex 32)

modal secret create pdf-document-secret API_KEY=$API_KEY

echo $API_KEY # Save this key securely!Then run the following command from the repository's root directory:

uv run modal deploy -m src.modal_deployment_with_authOnce deployed, Modal will provide a public URL for your service. You will use this URL (we'll refer to it as <YOUR_MODAL_APP_URL>) for all API requests.

Ping the /health endpoint to confirm that the service is running:

curl <YOUR_MODAL_APP_URL>/healthGet the segments from a PDF using the doclaynet (vision) model:

curl -X POST -F "file=@/PATH/TO/PDF/pdf_name.pdf" -H "X-API-Key: $API_KEY" <YOUR_MODAL_APP_URL>/apiTo use the fast (LightGBM) model, add the fast=true parameter:

curl -X POST -F "file=@/PATH/TO/PDF/pdf_name.pdf" -F "fast=true" -H "X-API-Key: $API_KEY" <YOUR_MODAL_APP_URL>/apiOptionally, OCR the PDF by using the api/ocr endpoint and the language parameter. (Check supported languages by calling the api/info endpoint or install more languages.)

curl -X POST -F "language=en" -F "file=@/PATH/TO/PDF/pdf_name.pdf" -H "X-API-Key: $API_KEY" <YOUR_MODAL_APP_URL>/api/ocr --output ocr_document.pdfYou can stop the running application from the Modal UI or by using the Modal CLI (modal app stop <app_name>).

This service offers two model options:

- VGT (Vision Grid Transformer) - Default visual model trained on DocLayNet dataset. Higher accuracy but requires more resources (GPU recommended).

- Fast (LightGBM) - Non-visual model using XML parsing. Faster and CPU-only but slightly lower accuracy.

For detailed model architecture and training information, see the original repository.

Both models return identical response format - a list of SegmentBox elements:

{

"type": "array",

"items": {

"type": "object",

"properties": {

"left": {

"type": "number",

"description": "The horizontal position of the element's left edge in points from the page's left edge"

},

"top": {

"type": "number",

"description": "The vertical position of the element's top edge in points from the page's top edge"

},

"width": {

"type": "number",

"description": "The width of the element in points"

},

"height": {

"type": "number",

"description": "The height of the element in points"

},

"page_number": {

"type": "integer",

"description": "The 1-based page number where this element appears"

},

"page_width": {

"type": "integer",

"description": "The total width of the page in points"

},

"page_height": {

"type": "integer",

"description": "The total height of the page in points"

},

"text": {

"type": "string",

"description": "The textual content extracted from this document element"

},

"type": {

"type": "string",

"description": "The classification type of this element (e.g., 'Page header')"

}

},

"required": [

"left", "top", "width", "height",

"page_number", "page_width", "page_height",

"text", "type"

]

}

}The models detect 11 types of document elements:

- Caption, 2. Footnote, 3. Formula, 4. List item, 5. Page footer, 6. Page header, 7. Picture, 8. Section header, 9. Table, 10. Text, 11. Title

VGT Model Accuracy (mAP on PubLayNet):

- Overall: 0.962

- Text: 0.950, Title: 0.939, List: 0.968, Table: 0.981, Figure: 0.971

Speed (15-page document):

- Fast Model (CPU): 0.42 sec/page

- VGT (GPU): 1.75 sec/page

- VGT (CPU): 13.5 sec/page

- Table/Formula Extraction: Add

extraction_format=markdown|latex|htmlparameter to extract tables and formulas in structured formats - OCR Support: Use

/api/ocrendpoint withlanguageparameter for text-searchable PDFs - Visualization: Use

/visualizeendpoint to get PDFs with detected segments highlighted

For comprehensive documentation on advanced features, model details, and implementation specifics, visit the original repository.

For additional configuration options including OCR language installation, refer to the original repository documentation.