🔒 A fast, serverless, and scalable API service for automated OCR and PII redaction on document batches.

Redact exposes a FastAPI HTTPs interface, persists state in Supabase (Postgres + Storage), and offloads compute-heavy redaction workloads to Modal

-

RESTful API: Clear endpoints for submitting and retrieving redaction tasks.

-

Asynchronous Processing: Long-running redaction jobs are executed asynchronously via serverless compute.

-

Persistent Storage: Task metadata stored in Supabase Postgres via SQLAlchemy; documents stored in Supabase Storage.

-

Benchmarked: Includes load testing scripts using Locust and benchmarks for performance analysis.

-

API: FastAPI

-

Database: Supabase Postgres

-

Object Storage: Supabase Storage

-

Compute: Modal (serverless execution)

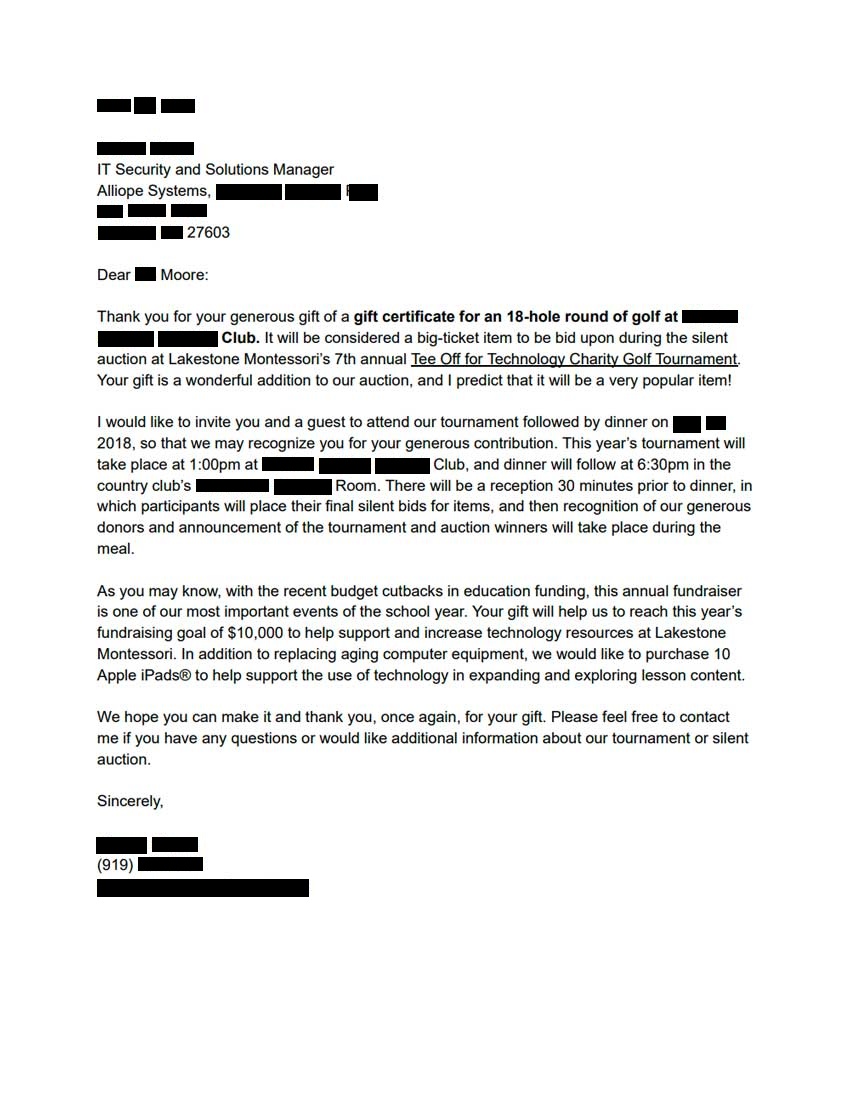

Random image I found on the internet vs. Redacted.

| Endpoint | Operation | Payload Size | Concurrent Users | Requests/sec | Avg Latency (ms) | P95 Latency (ms) | Error Rate | Notes |

|---|---|---|---|---|---|---|---|---|

POST /predict |

Create | 100KB image | 2 | 0.67 | 34.95 | 56 | 0% | Includes file validation, disk write, Inference offshoring |

GET /download/{id} |

GET | N/A | 10 | 3.58 | 10.38 | 32 | 0% | Retrieve processed document from server |

GET /check/{id} |

Read | N/A | 10 | 3.52 | 9.94 | 28 | 0% | Fetches job status from Supabase Postgres |

DELETE /drop/{id} |

Delete | N/A | 10 | 3.47 | 10.43 | 30 | Delete a batch and all related files from DB |

Legend:

- Payload Size: Size of file or JSON sent in the request.

- Concurrent Users: Simulated users (e.g., in Locust).

- Requests/sec: Throughput under load.

- Latency: Time from request to response (P95 = 95th percentile).

To interact with the hosted service as a client, see the client/ directory for example request scripts and helpers.

⚠️ Local execution note

Running the API locally is intended for development and testing only.

Production workloads are designed to run on Modal’s serverless infrastructure.

To run this project locally, you will need:

-

A Supabase project

- Supabase Postgres (used for task metadata)

- Supabase Storage (used for document and redaction artifacts)

-

A Modal account

- Modal must be configured locally (one-time setup)

-

Python 3.10+

Modal must be authenticated on your local machine.

If you have not configured Modal before, run:

modal token setThis will store your credentials locally (e.g. in ~/.modal.toml). Alternatively, you may provide credentials manually via environment variables:

MODAL_TOKEN_ID=...

MODAL_TOKEN_SECRET=...Ensure the Modal app name configured in the project is unique within your Modal account.

Supabase credentials are always required. Modal credentials are optional if Modal is already configured locally. You only need to set your modal app name in the .env file.

Then from ~/redact/ proceed to run:

modal run redact/workers/modalapp.py For a persistent app on modal run:

modal deploy redact/workers/modalapp.py git clone https://github.com/fw7th/redact.git

cd redactpython -m venv venv

source venv/bin/activatepip install -r requirements.txtPlease go over the Development Setup Prerequisites section.

cp .env.example .envuvicorn app.main:app --reloadpytest tests/./benchmark/benchmarkThis project does not use Docker for deployment; serverless execution is handled entirely by Modal.

When the server is running locally, visit:

- http://localhost:8000/docs — Swagger UI

- http://localhost:8000/redoc — ReDoc

These provide interactive documentation of all available endpoints with live testing.

This project is licensed under the MIT License — see the LICENSE file for details.

- GLiNER medium v2.1 was pivotal in the development of the PII redaction module.

@misc{stepanov2024glinermultitaskgeneralistlightweight,

title={GLiNER multi-task: Generalist Lightweight Model for Various Information Extraction Tasks},

author={Ihor Stepanov and Mykhailo Shtopko},

year={2024},

eprint={2406.12925},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2406.12925},

}- Tesseract v5.5.1 w/ py-tesseract, the Open Source OCR engine, performs text extraction in this project.

@Manual{,

title = {tesseract: Open Source OCR Engine},

author = {Jeroen Ooms},

year = {2026},

note = {R package version 5.2.5},

url = {https://docs.ropensci.org/tesseract/},

}