-

Notifications

You must be signed in to change notification settings - Fork 374

docs: langfuse on spcaes guide and gradio example #1529

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

andrewrreed

merged 30 commits into

huggingface:main

from

jannikmaierhoefer:langfuse-spaces-example

Jan 7, 2025

Merged

Changes from 6 commits

Commits

Show all changes

30 commits

Select commit

Hold shift + click to select a range

7496fef

docs: langfuse on spcaes guide and gradio example

jannikmaierhoefer 0fffbdb

edit toctree

jannikmaierhoefer fcc7485

text edit

jannikmaierhoefer 82e3972

edit troubleshooting part

jannikmaierhoefer f024ddc

edit text

jannikmaierhoefer 41dfe8a

update numbers

jannikmaierhoefer ac70405

fix spelling

jannikmaierhoefer 8462e6f

Update docs/hub/spaces-sdks-docker-langfuse.md

jannikmaierhoefer ad18cdd

Update docs/hub/spaces-sdks-docker-langfuse.md

jannikmaierhoefer a0dfd6e

Update docs/hub/spaces-sdks-docker-langfuse.md

jannikmaierhoefer 112e7e9

Update docs/hub/spaces-sdks-docker-langfuse.md

jannikmaierhoefer 35f25b3

Update docs/hub/spaces-sdks-docker-langfuse.md

jannikmaierhoefer 19dc6c0

Update docs/hub/spaces-sdks-docker-langfuse.md

jannikmaierhoefer 737301c

move troubleshoot section to gradio template readme as this is only g…

jannikmaierhoefer 4c74941

Update docs/hub/spaces-sdks-docker-langfuse.md

jannikmaierhoefer 9849195

edit gradio link name

jannikmaierhoefer 9336d5d

Apply suggestions from code review

andrewrreed 192fb20

fix setup steps numbered list formatting

andrewrreed b1a5a3d

Add simple tracing example with HF Serverless API

andrewrreed 0ca2049

remove <tip> for link formatting

andrewrreed d08059e

point "Deploy on HF" to preselected template

andrewrreed 27485c5

Update docs/hub/spaces-sdks-docker-langfuse.md

andrewrreed fc5070c

include note about HF OAuth

andrewrreed 3b7d39a

add note about AUTH_DISABLE_SIGNUP

andrewrreed 5e976ec

fix tip syntax

andrewrreed cb366e6

alt tip syntax

andrewrreed 42262e0

update note

andrewrreed 345cd96

back to [!TIP]

andrewrreed 5b73543

clarify user access

andrewrreed 1e27b68

minor cleanup

andrewrreed File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,86 @@ | ||

| # Langfuse on Spaces | ||

|

|

||

| This guide shows you how to deploy Langfuse on Hugging Face Spaces and start instrumenting your LLM application. This integreation helps you to experiment on Hugging Face models, manage your prompts in one place and evaluate model outputs. | ||

|

|

||

| ## What is Langfuse? | ||

|

|

||

| [Langfuse](https://langfuse.com) is an open-source LLM engineering platform that helps teams collaboratively debug, evaluate, and iterate on their LLM applications. | ||

|

|

||

| Key features of Langfuse include LLM tracing to capture the full context of your application's execution flow, prompt management for centralized and collaborative prompt iteration, evaluation metrics to assess output quality, dataset creation for testing and benchmarking, and a playground to experiment with prompts and model configurations. | ||

jannikmaierhoefer marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

jannikmaierhoefer marked this conversation as resolved.

Show resolved

Hide resolved

|

||

| _This video is a 10 min walkthrough of the Langfuse features:_ | ||

| <iframe width="700" height="394" src="https://www.youtube.com/embed/2E8iTvGo9Hs?si=i_mPeArwkWc5_4EO" title="10 min Walkthrough of Langfuse – Open Source LLM Observability, Evaluation, and Prompt Management" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe> | ||

|

|

||

| ## Why LLM Observability? | ||

|

|

||

| - As language models become more prevalent, understanding their behavior and performance is important. | ||

| - **LLM observability** involves monitoring and understanding the internal states of an LLM application through its outputs. | ||

| - It is essential for addressing challenges such as: | ||

| - **Complex control flows** with repeated or chained calls, making debugging challenging. | ||

| - **Non-deterministic outputs**, adding complexity to consistent quality assessment. | ||

| - **Varied user intents**, requiring deep understanding to improve user experience. | ||

| - Building LLM applications involves intricate workflows, and observability helps in managing these complexities. | ||

|

|

||

| ## Step 1: Set up Langfuse on Spaces | ||

|

|

||

| The Langfuse Huggingface Space allows you to get up and running with a deployed version of Langfuse with just a few clicks. Within a few minutes, you'll have this default Langfuse dashboard deployed and ready for you to connect to from your local machine. | ||

|

|

||

| <a href="https://huggingface.co/spaces/langfuse/langfuse-template-space"> | ||

| <img src="https://huggingface.co/datasets/huggingface/badges/resolve/main/deploy-to-spaces-lg.svg" /> | ||

| </a> | ||

|

|

||

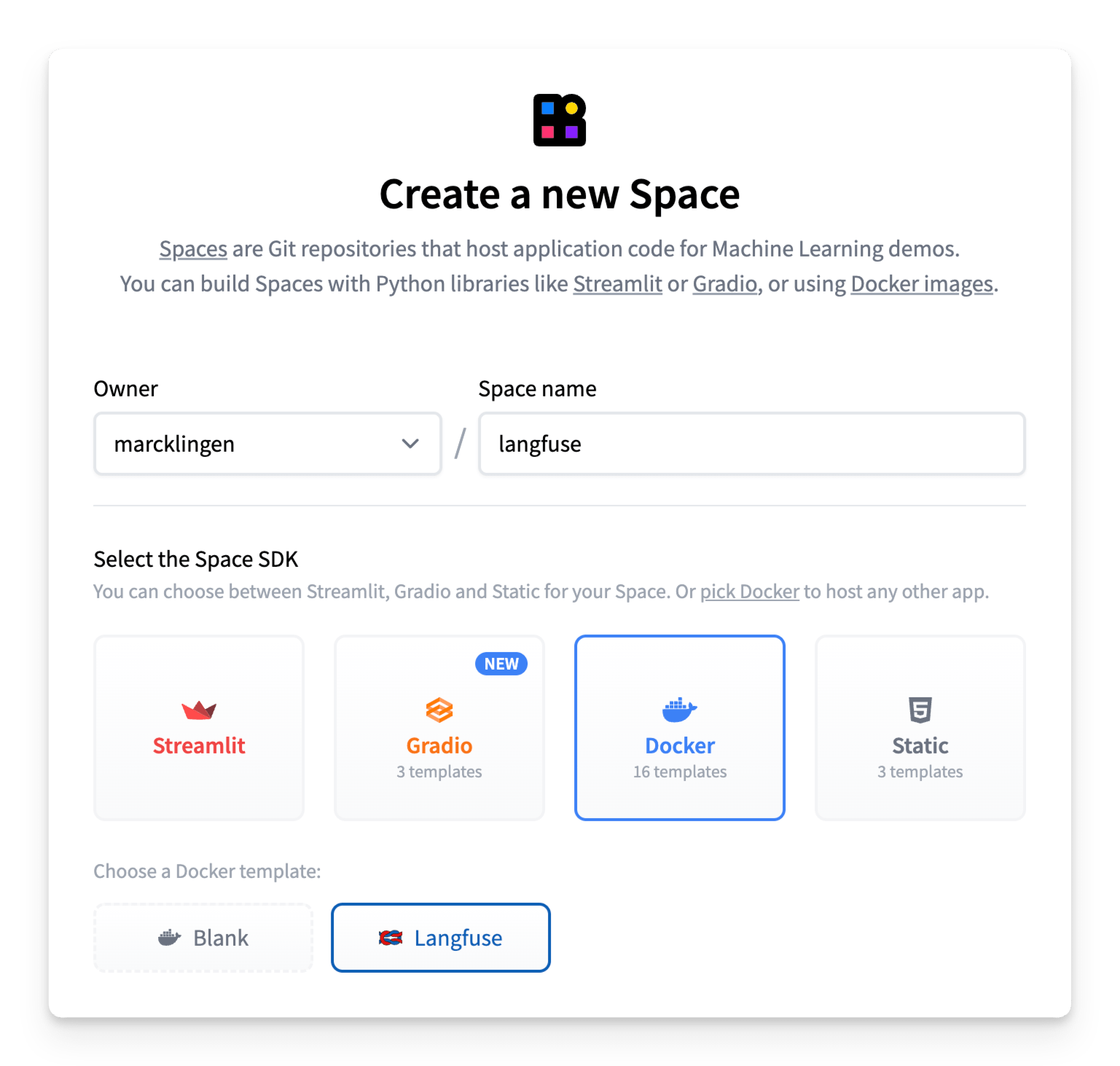

| 1.1. Create a [**new Hugging Face Space**](https://huggingface.co/new-space) | ||

| 1.2. Select **Docker** as the Space SDK | ||

| 1.3. Select **Langfuse** as the Space template | ||

| 1.4. Enable **persistent storage** to ensure your Langfuse data is persisted across restarts | ||

| 1.5. Change the **Environment Variables**: | ||

jannikmaierhoefer marked this conversation as resolved.

Outdated

Show resolved

Hide resolved

|

||

| - `NEXTAUTH_SECRET`: Used to validate login session cookies, generate secret with at least 256 entropy using `openssl rand -base64 32`. You should overwrite the default value here for a secure deployment. | ||

jannikmaierhoefer marked this conversation as resolved.

Outdated

Show resolved

Hide resolved

|

||

| - `SALT`: Used to salt hashed API keys, generate secret with at least 256 entropy using `openssl rand -base64 32`. You should overwrite the default value here for a secure deployment. | ||

jannikmaierhoefer marked this conversation as resolved.

Outdated

Show resolved

Hide resolved

|

||

| - `ENCRYPTION_KEY`: Used to encrypt sensitive data. Must be 256 bits, 64 string characters in hex format, generate via: `openssl rand -hex 32`. You should overwrite the default value here for a secure deployment. | ||

jannikmaierhoefer marked this conversation as resolved.

Outdated

Show resolved

Hide resolved

|

||

|

|

||

andrewrreed marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|  | ||

jannikmaierhoefer marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

| ## Step 2: Instrument your Code | ||

jannikmaierhoefer marked this conversation as resolved.

Outdated

Show resolved

Hide resolved

|

||

|

|

||

| Now that you have Langfuse running, you can start instrumenting your LLM application to capture traces and manage your prompts. | ||

|

|

||

| ### Example: Monitor your Gradio Application | ||

|

|

||

| We created a Gradio template space that shows how to create a simple chat application using a Hugging Face model and trace model calls and user feedback in Langfuse - without leaving Hugging Face. | ||

|

|

||

| <a href="https://huggingface.co/spaces/langfuse/gradio-example-template"> | ||

| <img src="https://huggingface.co/datasets/huggingface/badges/resolve/main/deploy-to-spaces-lg.svg" /> | ||

| </a> | ||

|

|

||

| To get started, clone the [Gradio template space](https://huggingface.co/spaces/langfuse/gradio-example-template) and follow the instructions in the [README](https://huggingface.co/spaces/langfuse/gradio-example-template/blob/main/README.md). | ||

|

|

||

| ### Monitor Any Application | ||

|

|

||

| Langfuse is model agnostic and can be used to trace any application. Follow the [get-started guide](https://langfuse.com/docs) in Langfuse documentation to see how you can instrument your code. | ||

|

|

||

| Langfuse maintains native integrations with many popular LLM frameworks, including [Langchain](https://langfuse.com/docs/integrations/langchain/tracing), [LlamaIndex](https://langfuse.com/docs/integrations/llama-index/get-started) and [OpenAI](https://langfuse.com/docs/integrations/openai/python/get-started) and offers Python and JS/TS SDKs to instrument your code. Langfuse also offers various API endpoints to ingest data and has been integrated by other open source projects such as [Langflow](https://langfuse.com/docs/integrations/langflow), [Dify](https://langfuse.com/docs/integrations/dify) and [Haystack](https://langfuse.com/docs/integrations/haystack/get-started). | ||

|

|

||

| ## Step 3: View Traces in Langfuse | ||

|

|

||

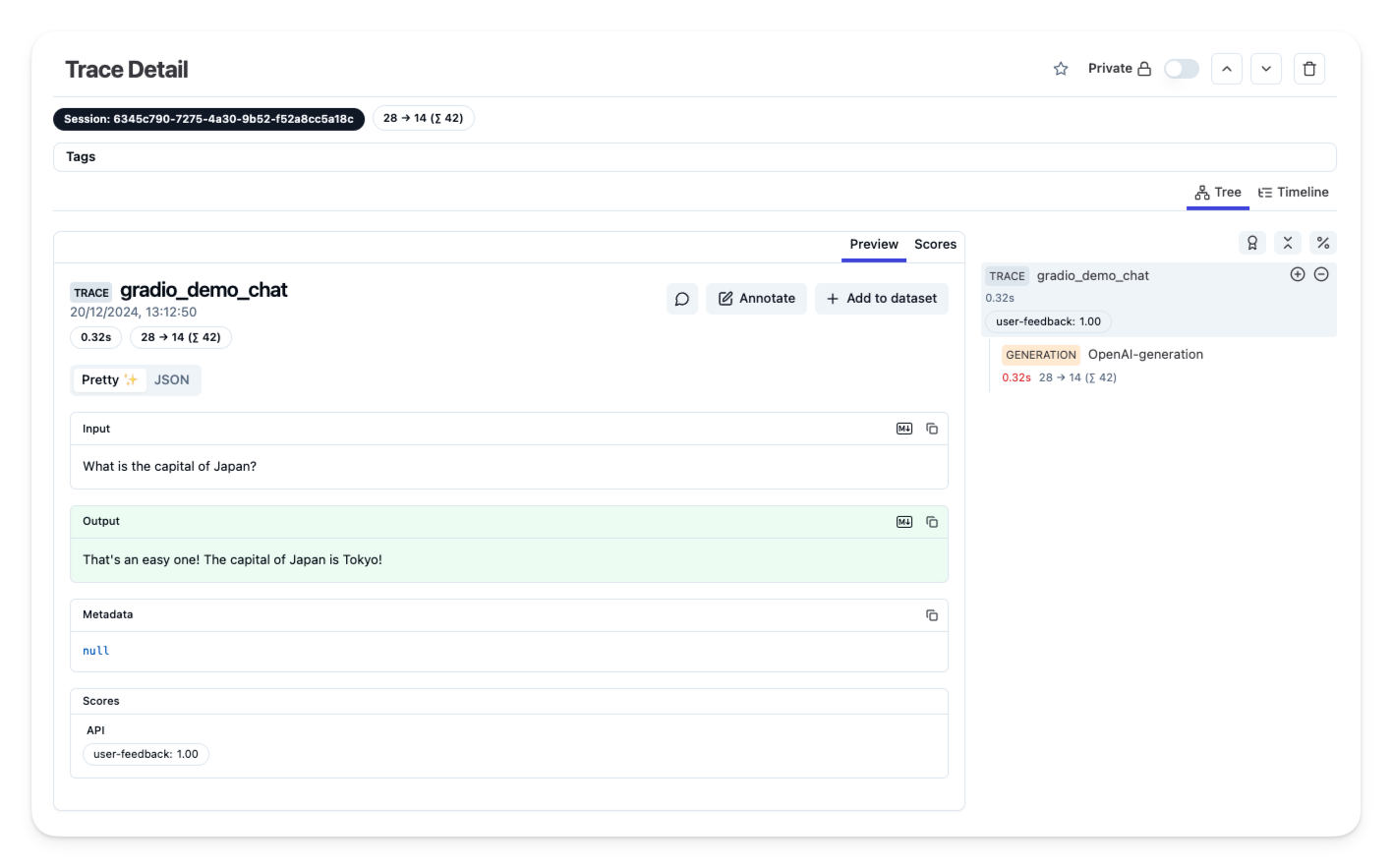

| Once you have instrumented your application, and ingested traces or user feedback into Langfuse, you can view your traces in Langfuse. | ||

|

|

||

|  | ||

|

|

||

| _[Example trace in the Langfuse UI](https://langfuse-langfuse-template-space.hf.space/project/cm4r1ajtn000a4co550swodxv/traces/9cdc12fb-71bf-4074-ab0b-0b8d212d839f?timestamp=2024-12-20T12%3A12%3A50.089Z&view=preview)_ | ||

|

|

||

| ## Additional Resources and Support | ||

|

|

||

| - [Langfuse documentation](https://langfuse.com/docs) | ||

| - [Langfuse GitHub repository](https://github.com/langfuse/langfuse) | ||

| - [Langfuse Discord](https://langfuse.com/discord) | ||

| - [Langfuse template Space](https://huggingface.co/spaces/langfuse/langfuse-template-space) | ||

|

|

||

| ## Troubleshooting | ||

|

|

||

| If you encounter issues using the [Langfuse Gradio example template](https://huggingface.co/spaces/langfuse/gradio-example-template): | ||

jannikmaierhoefer marked this conversation as resolved.

Outdated

Show resolved

Hide resolved

|

||

|

|

||

| 1. Make sure your notebook runs locally in app mode using `python app.py` | ||

| 2. Check that all required packages are listed in `requirements.txt` | ||

| 3. Check Space logs for any Python errors | ||

|

|

||

| For more help, open a support ticket on [GitHub discussions](https://langfuse.com/discussions) or [open an issue](https://github.com/langfuse/langfuse/issues). | ||

jannikmaierhoefer marked this conversation as resolved.

Outdated

Show resolved

Hide resolved

|

||

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Uh oh!

There was an error while loading. Please reload this page.