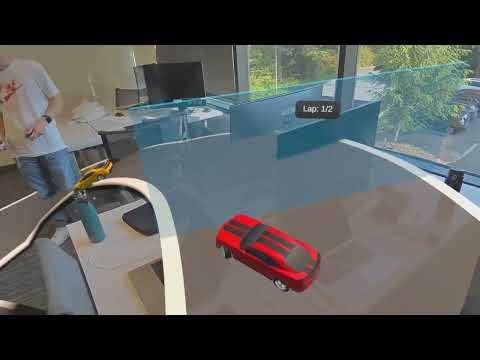

A co-located multiplayer AR racing experience for Meta Quest 3

Demo Video

·

Dev Log (more technical details)

ARC (Augmented Reality/Remote Control) Car Racing is a multiplayer, co-located augmented reality racing game developed for Meta Quest 3. The game allows players in the same physical space to create and compete on custom, procedurally-generated racetracks that adapt to the real-world environment.

Players can collaboratively place checkpoints anywhere in the real world, and the system will generate a racetrack that passes through all the designated checkpoints, while avoiding real-world obstacles such as tables, chairs, and walls using Meta's Scene API.

ARC Car Racing enables easy creation of track designs that would be impossible in the real world. Racetracks can weave through furniture, climb walls, jump across gaps, and span vertical space, creating fun courses without requiring players to manually place every piece of track.

(Click image to go to the video on YouTube)

For more technical details on the procedural race track generation system shown in the video, check out Jenny's dev log.

| Unity 6 | Photon Fusion 2 | Meta XR Building Blocks |

|---|---|---|

|

|

|

- Splines

- For racetrack generation, a 3D path is stored as a spline, and a mesh is procedurally extruded from the spline knots to form the racetrack.

- Multiplayer Building Blocks

- Local Matchmaking: joins headsets to the same multiplayer room using Bluetooth discovery

- Shared Spatial Anchor Core: uses camera data to creates shared points of reference, allowing multiple headsets to understand their relative positions in a shared physical space

- Colocation: connects Shared Spatial Anchors to the Photon Fusion multiplayer system to enable colocated experiences

- Player Name Tag: adds name tags above other players, useful for determining if colocation is active

- Augmented Reality (AR) Capabilities

- Passthrough: Provides camera feed to allow players to see the real world

- Occlusion: Allows real-world objects such as other people to appear in front of virtual objects, creating a realistic sense of depth.

- MRUK Scene API: Provides scene understanding to allow virtual objects to interact with elements of the real world.

- Spatial Audio: Allows game sounds to be perceived as coming from different directions as the player moves around the environment.

In addition to the standard Meta Quest Unity development setup, this project requires a Photon Fusion 2 App ID to enable multiplayer functionality. Follow the instructions here to configure your App ID in Unity.

To use co-located multiplayer with shared spatial anchors, all Meta Quest headsets must be logged into Meta developer accounts within a verified developer organization. Without this, shared spatial anchors will not function and players will not align correctly in the same physical space. See this guide for more details.

To comply with Unity licensing, this repository excludes Unity Asset Store resources that we used, which include:

This project was developed in a team of 4 (Lawrence, Jenny, Karim, Luna) over 8 weeks for CSE 481V: VR Capstone at the University of Washington. We thank the course staff for their guidance and resources.

The following tutorials were fundamental for learning how to develop parts of the system: