-

Notifications

You must be signed in to change notification settings - Fork 21

Pluggable flow aggregation function functionality added #22

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Open

arashkav

wants to merge

5

commits into

visgl:main

Choose a base branch

from

arashkav:flow_agg_plugin_feature

base: main

Could not load branches

Branch not found: {{ refName }}

Loading

Could not load tags

Nothing to show

Loading

Are you sure you want to change the base?

Some commits from the old base branch may be removed from the timeline,

and old review comments may become outdated.

Open

Changes from 1 commit

Commits

Show all changes

5 commits

Select commit

Hold shift + click to select a range

73ec574

Pluggable flow aggregation function functionality added

arashkav 4b96b73

Updated edge and node aggregation function plugin

arashkav c6e8c75

Some build related errors resolved

arashkav 5e39ffa

rebuild issues resolved

arashkav d877776

flowmap.gl configured

arashkav File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -48,7 +48,12 @@ export interface ClusterIndex<F> { | |

| aggregateFlows: ( | ||

| flows: F[], | ||

| zoom: number, | ||

| {getFlowOriginId, getFlowDestId, getFlowMagnitude}: FlowAccessors<F>, | ||

| { | ||

| getFlowOriginId, | ||

| getFlowDestId, | ||

| getFlowMagnitude, | ||

| getFlowAggFunc, | ||

| }: FlowAccessors<F>, | ||

| options?: { | ||

| flowCountsMapReduce?: FlowCountsMapReduce<F>; | ||

| }, | ||

|

|

@@ -165,20 +170,24 @@ export function buildIndex<F>(clusterLevels: ClusterLevels): ClusterIndex<F> { | |

| aggregateFlows: ( | ||

| flows, | ||

| zoom, | ||

| {getFlowOriginId, getFlowDestId, getFlowMagnitude}, | ||

| {getFlowOriginId, getFlowDestId, getFlowMagnitude, getFlowAggFunc}, | ||

| options = {}, | ||

| ) => { | ||

| if (zoom > maxZoom) { | ||

| return flows; | ||

| } | ||

| if (!getFlowAggFunc) { | ||

| getFlowAggFunc = (flowValues: number[]) => | ||

| flowValues.reduce((a, b) => a + b, 0); | ||

| } | ||

| const result: (F | AggregateFlow)[] = []; | ||

| const aggFlowsByKey = new Map<string, AggregateFlow>(); | ||

| const makeKey = (origin: string | number, dest: string | number) => | ||

| `${origin}:${dest}`; | ||

| const { | ||

| flowCountsMapReduce = { | ||

| map: getFlowMagnitude, | ||

| reduce: (acc: any, count: number) => (acc || 0) + count, | ||

| reduce: getFlowAggFunc, | ||

| }, | ||

| } = options; | ||

| for (const flow of flows) { | ||

|

|

@@ -197,13 +206,14 @@ export function buildIndex<F>(clusterLevels: ClusterLevels): ClusterIndex<F> { | |

| dest: destCluster, | ||

| count: flowCountsMapReduce.map(flow), | ||

| aggregate: true, | ||

| values: [flowCountsMapReduce.map(flow)], | ||

| }; | ||

| result.push(aggregateFlow); | ||

| aggFlowsByKey.set(key, aggregateFlow); | ||

| } else { | ||

| aggregateFlow.values.push(flowCountsMapReduce.map(flow)); | ||

|

||

| aggregateFlow.count = flowCountsMapReduce.reduce( | ||

| aggregateFlow.count, | ||

| flowCountsMapReduce.map(flow), | ||

| aggregateFlow.values, | ||

| ); | ||

| } | ||

| } | ||

|

|

||

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why do you add

valuesto flow here? It will likely significantly increase the memory use for the resulting flows data structure.Uh oh!

There was an error while loading. Please reload this page.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

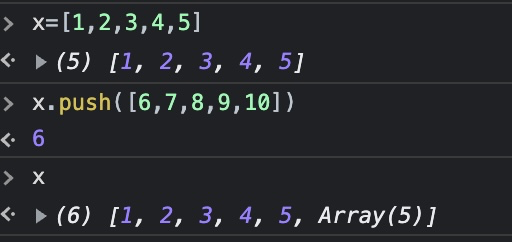

I try to explain why I picked this approach. Previously, you applied a map-reduce approach which means summing up every new counts added to a cluster in each iteration. This approach works fine when our aggregation function is 'sum'.

The same can not be achieved if we wanna 'average'. To explain, say we have three edges with values of 10,5 and 8. If we apply the same approach for sum and just average them every time 'reduce' function is called, we get a different number than a real average.

(((10+5)/2) + 8)/2 != (10+5+8)/3

This approach, despite your concern, gives the developer full flexibility on what aggregation function to be used (e.x. weighted sum, logarithmic, exponential, etc.).

To avoid any performance loss with normal 'sum' aggregation, I change the code to use the previous 'reduce' function (and stop pushing values into the array) if getFlowAggFunc is not defined.

I also consider replacing arrays with a better performing function.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I get that. I was more concerned with the memory footprint of the resulting data structure. In line 209 we add the new

valuesproperty to theflowobject, so the resulting data structure will keep more data than necessary. It appears to me thatvaluesis only used as a temporary accumulator for the flow counts, so we don't need to keep it forever.Another issue with your proposed approach is that the reduce function is called every time a new value is added. This might slow the calculations down unnecessarily esp. if the aggregation function is costly.

Maybe we can instead accumulate the values in a separate temporary map similar to

aggFlowsByKey, e.g.:After iterating over all flows we can call

flowCountsMapReduce.reduceonce for each of them and save the results to the flowcounts. Then, we can leaveaggFlowCountsByKeybehind to be garbage collected.Or alternatively, we can use your accumulation approach, but give the resulting array another pass at the end in which we calculate the averages from the values (calling the agg function only once per flow), add them as counts to the flows and delete the

valuesproperty from the results. Maybe that's simpler.Uh oh!

There was an error while loading. Please reload this page.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I made the changes following the proposed approach. Added node aggregation feature as well. Please let me know if they work better now.

To be able to allow weighted aggregation I added another argument that allows to determine the weight attribute. Example would be we have a performance metric and we wanna weigthed sum it considering the volume(flow).

getFlowMagnitude: (flow) => flow.metric_1,

getFlowAggWeight: (flow) => flow.count,

getFlowAggFunc: (values) => values.reduce((accumulator, curr:any) => accumulator + curr.aggvalue*curr.aggweight, 0)

/values.reduce((acc,cur:any)=>acc + cur.aggweight,0),

The example app seem to be working quite responsive as the memory footprint dropped dramatically.