-

Notifications

You must be signed in to change notification settings - Fork 7

Testing metrics with Tensorboard

This is a multi-output regression problem, thus we can only train/evaluate our models using regression losses/metrics.

With tf.keras we have the option of using the following built in losses/metrics:

- Accuracy

- RootMeanSquaredError

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanSquaredError

- MeanSquaredLogarithmicError

- CosineSimilarity

- KLDivergence

- LogCoshError

A possible strategy for selecting a set of metrics which can objectively measure a model's performance could be as follows:

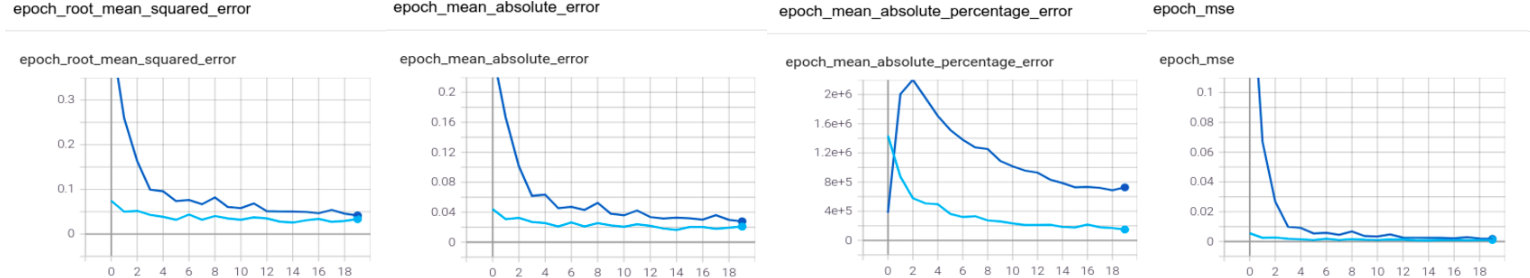

- Plot training and validation error curves of the default DNN model in tensorboard for all of the above metrics.

- Select a subset of metrics which are not being minimized to a sufficient degree (relative to others). The idea is to correspond the poor performance of the default model with the relevant metric values. Metrics which are already converging to a very low value are not useful and can be discarded.

Using the above strategy, we have four candidate metrics as follows: RootMeanSquaredError, MeanAbsoluteError, MeanAbsolutePercentageError, and MeanSquaredError.

We can now set any one of these four metrics as our loss function whilst also tracking the values of the other three in tensorboard.

Model A will be objectively better than Model B if Model A manages to minimize a majority of these metrics better than Model B.

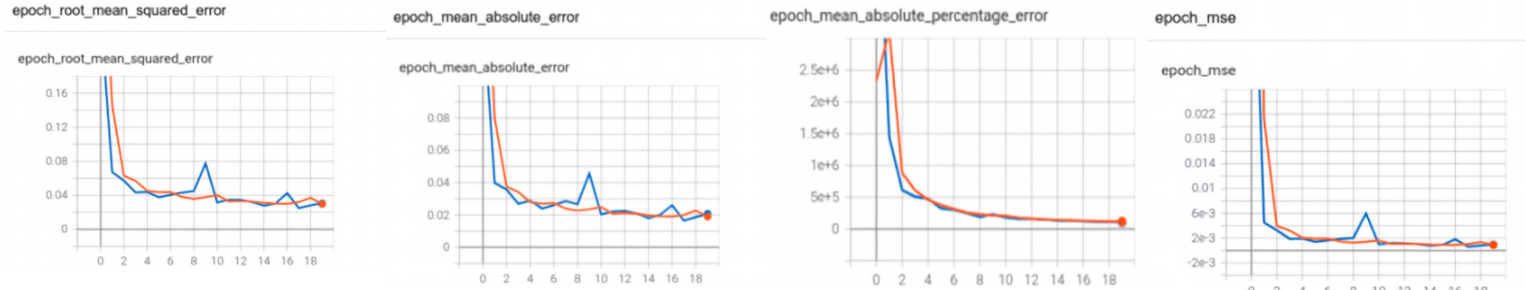

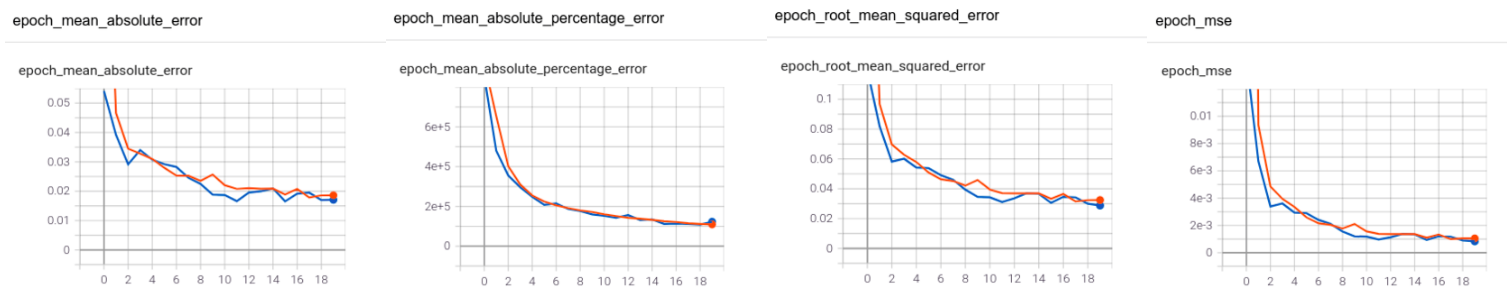

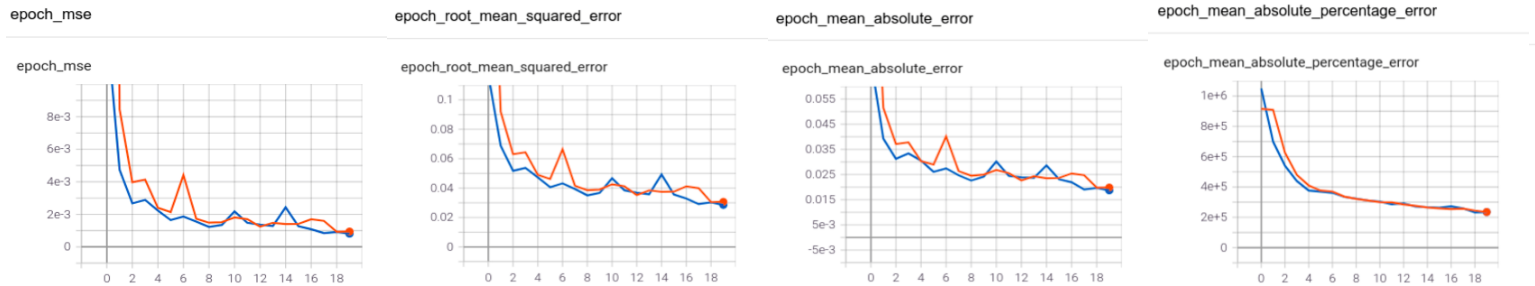

Here's an example of the plots we obtain when setting each of these metrics (excluding MeanAbsolutePercentageError) as our loss function (orange line is train curve, blue line is validation curve):

- RMSE loss:

- MAE loss:

- MSE loss:

The above plots suggest that RootMeanSquaredError, MeanAbsoluteError and MeanSquaredError are all good options for the loss function.

Overall, it is recommended to set any one of these three options as the loss whilst also simultaneously checking the curves of the other three metrics (including MeanAbsolutePercentageError) in tensorboard.

-

RootMeanSquaredErrororMeanSquaredErroris a good & popular choice when we want to minimize the average error. -

MeanAbsoluteErroris a good choice when we want to optimize the median error i.e. there should be as many positive errors as there are negative errors.

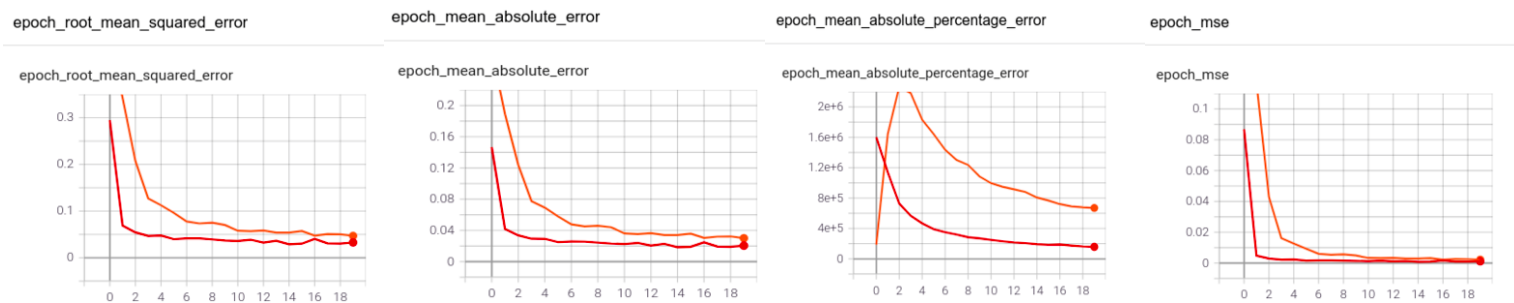

- Comparing train curves of default UNet model trained on 900 events (orange) against the same model trained on 4000 events (red) using RMSE loss:

- Comparing validation curves of default UNet model trained on 900 events (dark blue) against the same model trained on 4000 events (light blue) using RMSE loss:

As we can see from the above plots, an objectively better model will be produce lower error curves across a majority of the aforementioned four metrics. Since different metrics measure errors differently, some will be minimized to a greater degree than others and thus show much starker differences in a comparison.