-

-

Notifications

You must be signed in to change notification settings - Fork 362

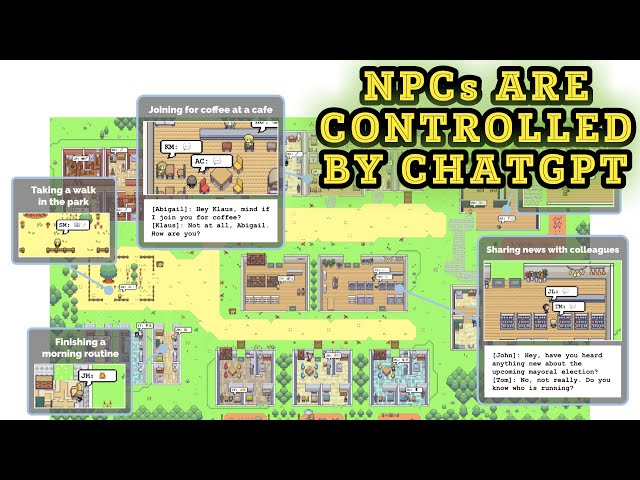

1st ChatGPT Powered NPCs Having SandBox RPG Game Smallville Generative Agents Interactive Simulacra

1st ChatGPT Powered NPCs Having SandBox RPG Game Smallville: Generative Agents Interactive Simulacra

Full tutorial link > https://www.youtube.com/watch?v=aIDSmgsT4p8

Discord: https://bit.ly/SECoursesDiscord. So many people were dreaming of NPC agents powered by ChatGPT. Here the first model of such games. If I have been of assistance to you and you would like to show your support for my work, please consider becoming a patron on 🥰 https://www.patreon.com/SECourses

This game is like playing The Sims against real people not NPCs.

Scientific Paper PDF

https://arxiv.org/pdf/2304.03442.pdf

Pre-recorded demo of the game

https://reverie.herokuapp.com/arXiv_Demo/

Technology & Science: News, Tips, Tutorials, Tricks, Best Applications, Guides, Reviews

https://www.youtube.com/playlist?list=PL_pbwdIyffsnkay6X91BWb9rrfLATUMr3

Playlist of StableDiffusion Tutorials, Automatic1111 and Google Colab Guides, DreamBooth, Textual Inversion / Embedding, LoRA, AI Upscaling, Pix2Pix, Img2Img

https://www.youtube.com/playlist?list=PL_pbwdIyffsmclLl0O144nQRnezKlNdx3

00:00:00 Introduction to ChatGPT powered agents having first ever made RPG game Smallville

00:00:48 What is Smallville SandBox RPG Game

00:01:12 The community of the ChatGPT powered Smallville game

00:02:05 Core concept of the run by the GPT engine Smallville game

00:02:09 Inter-agent communication

00:03:27 User control

00:03:40 Environmental interaction

00:04:08 Smallville sandbox environment implementation

00:04:23 Example day in life of ChatGPT powered agents

00:04:42 Why the game is still far away from being a full-fledged game

00:05:11 Each day a new plan is made

00:05:40 Emerging social behaviors

00:05:56 Information diffusion

00:06:20 Relationship memory

00:06:53 The hardest part of such ChatGPT powered agents having RPG game making

00:07:25 Workflow of the Smallville game

00:08:12 Generative agent architecture

00:08:59 Memory and retrieval - the core of the game

00:10:10 Memory stream architecture

00:14:02 Reflection memory

00:15:24 Planning and reaction

00:16:53 Reacting and updating plans

00:17:41 From structured world environments to natural language

00:18:12 Human role playing evaluation

00:18:41 The problems of the ChatGPT powered game

00:19:08 Ethics and societal impact

00:20:14 Demo of the game

CCS CONCEPTS

• Human-centered computing→Interactive systems and tools;

• Computing methodologies → Natural language processing.

KEYWORDS

Human-AI Interaction, agents, generative AI, large language models

Generative Agents: Interactive Simulacra of Human Behavior

Generative agents create believable simulacra of human behavior for interactive applications. In this work, we demonstrate generative agents by populating a sandbox environment, reminiscent of The Sims, with twenty-five agents. Users can observe and intervene as agents they plan their days, share news, form relationships, and coordinate group activities.

The Smallville sandbox world, with areas labeled. The root node describes the entire world, children describe areas (e.g., houses, cafe, stores), and leaf nodes describe objects (e.g., table, bookshelf). Agent remember a subgraph reflecting the parts of the world they have seen, in the state that they saw them.

A morning in the life of a generative agent, John Lin. John wakes up around 6 am and completes his morning routine, which includes brushing his teeth, taking a shower, and eating breakfast. He briefly catches up with his wife, Mei, and son, Eddy, before heading out to begin his workday.

At the beginning of the simulation, one agent is initialized with an intent to organize a Valentine’s Day party. Despite many possible points of failure in the ensuring chain of events—agents might not act on that intent, might not remember to tell others, might not remember to show

up—the Valentine’s Day party does in fact occur, with a number of agents gathering and interacting.

Generative agent architecture. Agents perceive their environment, and all perceptions are saved in a comprehensive record of the agent’s experiences called the memory stream. Based on their perceptions, the architecture retrieves relevant memories, then uses those retrieved actions to determine an action. These retrieved memories are also used to form longer-term plans, and to create higher-level reflections, which are both entered into the memory stream for future use.

The memory stream comprises a large number of observations that are relevant and irrelevant to the agent’s current situation. Retrieval identifies a subset of these observations that should be passed to the language model to condition its response to the situation.

A reflection tree for Klaus Mueller. The agent’s observations of the world, represented in the leaf nodes, are recursively synthesized to derive Klaus’s self-notion that he is highly dedicated to his research.

CONCLUSION

This paper introduces generative agents, interactive computational agents that simulate human behavior. We describe an architecture for generative agents that provides a mechanism for storing

a comprehensive record of an agent’s experiences, deepening its understanding of itself and the environment through reflection, and retrieving a compact subset of that information.

-

00:00:00 Greetings everyone. Today I am going to introduce you the first ever made game

-

00:00:05 based on the ChatGPT. Generative Agents: Interactive Simulacra of Human Behavior.

-

00:00:11 This is a research paper published just 4 days ago by the researchers from Stanford

-

00:00:16 University and Google Research. It actually has a sandbox RPG game engine and you are

-

00:00:22 able to see what is happening or you are able to interact with the NPC agents.

-

00:00:27 In this work, authors demonstrated generative agents by populating a sandbox environment

-

00:00:33 reminiscent of The Sims with 25 agents. Users can observe and intervene as agents. They plan

-

00:00:40 their days, share news, form relationships, and coordinate group activities.

-

00:00:45 So their sandbox RPG world is named as the Smallville with the areas labeled as you are

-

00:00:51 seeing on the screen. The root node describes the entire world. Children describe areas,

-

00:00:56 e.g. houses, cafe, stores, and leaf nodes describe objects, e.g. table, bookshelf.

-

00:01:02 Agents remember a subgraph reflecting the parts of the world they have seen in the state they

-

00:01:08 have saw them. So they describe their agent avatar and communication like this. A community of 25

-

00:01:14 unique agents inhabits Smallville. Each agent is represented by a simple sprite avatar.

-

00:01:20 Each agent has a description. If you have used ChatGPT or GPT4, you would already know that you

-

00:01:27 can make the GPT engine behave as someone else you like. For example, John Lin is a pharmacy

-

00:01:34 shopkeeper at the Willow Market and pharmacy who loves to help people. You can say the ChatGPT to

-

00:01:41 behave like this and communicate with the ChatGPT like that. So the GPT4 ChatGPT is able to do

-

00:01:48 role-playing really good. He is always looking for ways to make the process of getting medication

-

00:01:53 easier for his customers. So with this information, you can communicate and chat with the ChatGPT

-

00:02:01 as the ChatGPT is this John Lin character. And this is the core concept that the authors of this

-

00:02:09 paper utilized. Inter-agent communication. The agents interact with the world by their actions

-

00:02:16 and with each other through natural language. Just like you would do with the ChatGPT or with

-

00:02:21 another person. At each time step of the sandbox engine, the agents output a natural language

-

00:02:28 statement describing their current actions such as Isabella Rodriguez is writing in her journal,

-

00:02:35 Isabella Rodriguez is checking her emails, Isabella Rodriguez is talking with her family on the phone

-

00:02:41 and such. This statement is then translated into concrete movements that affect the sandbox world.

-

00:02:48 The action is displayed on the sandbox interface as a set of emojis that provide an abstract

-

00:02:53 representation of the action in the overhead view. Agents are aware of other agents in their local

-

00:03:00 area, and generative agent architecture determines whether they walk by or engage in conversation.

-

00:03:06 Here a sample in the middle of a conversation between the agents Isabella Rodriguez and Tom

-

00:03:13 Moreno about the upcoming election. In this sandbox game that others have built, users are also able to

-

00:03:22 control characters, avatars, and communicate with the agents run by the ChatGPT. A user running

-

00:03:28 this simulation can steer the simulation and intervene either by communicating with the

-

00:03:34 agent through conversation or by issuing a directive to an agent in the form of an inner

-

00:03:40 voice. Environmental interaction. Agents move around Smallville as one would in a simple video

-

00:03:47 game, entering and leaving buildings, navigating its map, and approaching other agents. Agent movements

-

00:03:53 are directed by the generative agent architecture and the sandbox game engine. When the model

-

00:03:59 dictates that the agent will move to a location. We calculate a walking path to the destination in

-

00:04:05 the Smallville environment, and the agent begins moving. So for making this sandbox environment,

-

00:04:10 they used Phaser web game development framework. The visual environment sprites, including agent

-

00:04:18 avatars, as well as environment map and collision map that we authored are imported into Phaser.

-

00:04:23 Starting from the single paragraph description, agents begin plan their days as time passes in

-

00:04:29 the sandbox world, their behaviors evolve as these agents interact with each other, and the world

-

00:04:35 build memories and relationships and coordinate joint activities. However this game is still far

-

00:04:42 from being a full-fledged game because the agents are only able to use their previous day memory.

-

00:04:50 The authors explain that we prompt the language model with the agent summary description e.g. name,

-

00:04:57 traits, and summary of their recent experiences and the summary of their previous day. So with

-

00:05:03 this much of information, the new day of the agent is determined and planned. So each day, a new plan

-

00:05:12 is made for each agent, based on the previous day experiences and based of the initial characteristics

-

00:05:19 of the agent. For example figure 3 is displaying the morning routine of the agent, John Lin. John

-

00:05:27 wakes up around 6 am and completes his morning routine which includes brushing his teeth, taking

-

00:05:33 a shower, and eating breakfast. He briefly catches up with his wife Mei and son Eddy before heading

-

00:05:40 out to begin his work day. Emerging social behaviors: by interacting with each other,

-

00:05:45 generative agents in Smallville exchange information, form new relationships, and coordinate

-

00:05:51 joint activities. These social behaviors are emergent rather than preprogrammed. Information

-

00:05:57 diffusion. As agents notice each other day, they may engage in dialogue. As they do so, information

-

00:06:03 can spread agent to agent. For instance, in a conversation between Sam and Tom at the grocery

-

00:06:09 store, Sam tells about his candidacy in the local election. So with this way, agents are spreading

-

00:06:16 their words and learning new information from other agents. Relationship memory: agents in small

-

00:06:22 will form new relationships over time and remember their interactions with other agents. For example,

-

00:06:28 Sam does not know Latoya Williams at the start. While taking a walk in Johnson Park, Sam runs into

-

00:06:35 Latoya and they introduce themselves and Latoya mentions that she is working on a photography

-

00:06:40 project. In a later interaction, Sam's interactions with Latoya indicate a memory of the interaction

-

00:06:47 as he asks hi Latoya, how is your project going and she replies hi Sam, it is going well. The hardest

-

00:06:54 part of a such sandbox RPG game is the memory currently. Because ChatGPT or GPT4 is extremely

-

00:07:03 limited with the amount of context that they can hold and remember. Therefore, for such a big game,

-

00:07:10 you have to use your own memory stream, and based on that memory, you have to give memory information

-

00:07:18 every time when you are interacting with the GPT agent. So the authors came up with these

-

00:07:25 workflow for their game: perceive memory stream, retrieve retrieved memories, plan, reflect, and

-

00:07:33 act. The authors have explained this workflow as agents perceive their environment and all

-

00:07:39 perceptions are saved in a comprehensive record of the agent's experiences called the memory

-

00:07:45 stream. Based on their perceptions the architecture retrieves relevant memories, then uses those

-

00:07:52 retrieved actions to determine an action. These retrieved memories are also used to form longer

-

00:07:58 term plans and to create higher level reflections, which are both entered into the memory stream for

-

00:08:04 future use. So, it is still not possible to use GPT engine for the entire memory of an agent.

-

00:08:12 Generative agent architecture. Their current implementation utilizes GPT 3.5 turbo version

-

00:08:19 of the ChatGPT. They expect that the architectural basics of generative agents, memory planning, and

-

00:08:26 reflection will likely remain same as language models improve. Newer language models e.g. GPT4

-

00:08:33 will continue to expand the expressivity and performance of the prompts that underpin

-

00:08:39 generative agents. So, since they were writing this paper, GPT4 API was still invitation only,

-

00:08:47 so they used the ChatGPT. Probably they would obtain much better results if the GPT4 was

-

00:08:54 available when they were working on this project. So the memory and retrieval the very important

-

00:09:00 core of this sandbox game. The challenge: this is the most important part. Creating generative

-

00:09:06 agents that can simulate human behavior requires reasoning about a set of experiences that is far

-

00:09:13 larger than what should be described in a prompt as the full memory stream can distract the model

-

00:09:19 and does not even currently fit into the limited context window. So you see it is far larger and

-

00:09:26 does not even currently fit into the limited context window. To overcome this restriction,

-

00:09:32 they developed a memory stream engine maintains a comprehensive record of the agent's experience.

-

00:09:39 So this is the core of their sandbox game. It is a list of memory objects where each object

-

00:09:46 contains a natural language description, a creation timestamp, and a most recent access

-

00:09:51 timestamp. The most basic element of the memory stream is an observation, which is an event

-

00:09:57 directly perceived by an agent. Common observations include behaviors performed by the agents

-

00:10:03 themselves or behaviors that agents perceive being performed by other agents or non-agent objects.

-

00:10:10 So this is their memory stream. We are seeing the timestamp and the description of the observation,

-

00:10:16 the experience. Desk is idle. Bed is idle. Closet is idle. Based on these memory stream, they are

-

00:10:23 planning their next actions. What are you looking forward to the most right now? Now this is important.

-

00:10:29 Isabella Rodriguez is excited to be planning a valentine's day party at the Hobbs cafe on

-

00:10:34 February 14th from 5 p.m and is eager to invite everyone to attend the party. So they need to

-

00:10:41 retrieve the memory, but not just every memory. They need to sort the memory based on recency,

-

00:10:49 importance and relevance. Then from these retrieved memories, it will ask the ChatGPT

-

00:10:56 engine to do what next. So you see each action is getting ranked by the engine they developed.

-

00:11:04 Ordering decorations for the party has 2.21 score. Researching ideas for the party has 2.20 score.

-

00:11:13 Figure 6 is explained like this: the memory stream comprises a large number of observations

-

00:11:18 that are relevant and irrelevant to the agent's current situation. Retrieval identifies a subset

-

00:11:24 of these observations that should be passed to the language model which is ChatGPT plus in this

-

00:11:30 case, condition its response to the situation. So the hardest part of their sandbox game was making

-

00:11:38 the architecture that implements a retrieval function that takes the agent's current situation

-

00:11:44 as an input and returns a subset of the memory stream to pass on the language model. This is the

-

00:11:50 most important part of making such game or such project because the gooder you retrieve the

-

00:11:58 experience, the better the result you will get with the language model. For this task, they defined

-

00:12:06 three main components. Recency assigns a higher score to memory objects that were recently

-

00:12:11 accessed so that events from a moment ago or this morning are likely to remain in the agent's

-

00:12:17 attentional sphere. Importance distinguishes mundane from core memories by assigning a higher

-

00:12:23 score to those memory objects that the agent believes to be important. There are again, many

-

00:12:29 possible implementations of an important score. The authors found that directly asking the language

-

00:12:36 model to output an integer score is effective. This is really convenient actually. So they ask

-

00:12:41 the model as like this and get the score from the model itself. The third component is relevance.

-

00:12:48 Assigns a higher score to memory objects that are related to the current situation. What is relevant

-

00:12:54 depends on the answer to relevant to what. So they condition relevance on a query memory. If the query,

-

00:13:01 for example, is that a student is discussing what to study for a chemistry test with a classmate

-

00:13:07 memory objects about their breakfast should have low relevance, whereas memory objects about the

-

00:13:13 teacher and schoolwork should have high relevance. In their implementation, they use the language

-

00:13:18 model to generate an embedding vector of the text description of each memory. Then they calculate

-

00:13:24 relevance as the cosine similarity between the memory's embedding vector and the query memory

-

00:13:30 embedding vector. So to calculate the final retrieval score, which is the core, as I said,

-

00:13:36 they normalize the recency, relevance, and importance scores to range between zero and one

-

00:13:42 by min max scaling. The retrieval function scores all memories as weighted combination of three

-

00:13:48 elements: recency, importance, and relevance. Once you build this system, the rest is done by the

-

00:13:55 language model, by the ChatGPT. So this is the core of making such game engine. However, still,

-

00:14:02 this is not perfect and not enough. Therefore, the authors came up with another memory called as

-

00:14:08 reflection. Challenge: generative agents when equipped with only raw observational memory, struggle to

-

00:14:15 generalize or make inferences. Actually, this is also because of the amount of memory they have.

-

00:14:20 They are very limited. Consider a scenario in which Klaus Mueller is asked by the user if you had to

-

00:14:27 choose one person of those you know to spend an hour with, who would it be. With access to only

-

00:14:33 observational memory the agent simply chooses the person with whom Klaus has had the most frequent

-

00:14:40 interactions and the answer would be Wolfgang, his college dorm neighbor. Unfortunately, Wolfgang and

-

00:14:46 Klaus only ever see each other in passing and do not have deep interactions. A more desirable response

-

00:14:53 requires that the agent generalize from memories of the Klaus spending hours on a research project

-

00:14:59 to generate a higher level reflection. So they developed this approach. Reflections are generated

-

00:15:05 periodically. In our implementation we generate reflections when the sum of the important scores

-

00:15:11 for the latest events perceived by the agents exceeds a certain threshold. In practice, our

-

00:15:18 agents reflected roughly two or three times a day. So another challenge is planning and reaction.

-

00:15:24 While a large language model can generate plausible behavior in response to situational

-

00:15:30 informations, agents needs to plan over a longer time horizon to ensure that their sequence of

-

00:15:37 actions is coherent and believable. If we prompt a language model with Klaus background, describe the

-

00:15:44 time, and ask what action he ought to make at given moment, Klaus would eat lunch at 12 p.m

-

00:15:50 but then again at 12 30 p.m and 1 p.m despite having already eaten his lunch twice. To overcome

-

00:15:58 this problem at the beginning of each day, they use the memory of the previous day, the description of

-

00:16:05 the agent, and they start planning abstraction of the entire day. Then they start top-down

-

00:16:14 approach and then recursively generates more detail of the each part of the day. So their

-

00:16:22 model is generates a rough sketch of the agent's plan for a day divided into five to eight chunks.

-

00:16:29 Then by recursively, these chunks are subdivided into further chunks and recursively then

-

00:16:37 subdivided into five to 50 minute chunks e.g., 4 p.m grab a light snack such as a piece of fruit, a

-

00:16:46 granola bar, or some nuts. 4.05 p.m take a short walk around his workspace. So this is how

-

00:16:53 their planning works. Of course, there is reacting and updating plans.

-

00:16:58 Generative agents operate in an action loop where at each time step they perceive the world around

-

00:17:03 them and those perceived observations are stored in their memory stream. They prompt the language

-

00:17:10 model e.g. the ChatGPT with these observations to decide whether the agent should continue with

-

00:17:17 their existing plan or react. So the context summary is generated through two prompts that

-

00:17:22 retrieve memories via the queries. What is observer's relationship with the observed entity

-

00:17:28 and observed entity is action status of the observed entity and their answers summarized

-

00:17:35 together. The output suggests that John could consider asking Eddy about his music composition

-

00:17:41 project. So how all these are working? From structured world environments to natural language

-

00:17:48 and back again, they briefly describe their architecture. To achieve this, they represent

-

00:17:54 the sandbox environment areas and objects as a tree data structure with an edge in the tree

-

00:18:00 indicating a containment relationship. In the sandbox world, they convert this tree into natural

-

00:18:05 language to past generative agents. For instance, stove being a child of kitchen is rendered into

-

00:18:12 there is a stove in the kitchen. They also compared the behaviors. The full architecture which includes

-

00:18:19 reflection, plan, and observation performed the best. They also hired humans to evaluate them.

-

00:18:27 So this human crowd worker played role play condition that is intended to provide a human

-

00:18:34 baseline. However, they found that full architecture were performing better than the human role players.

-

00:18:41 So this is just amazing if you ask me. Even though their system was very limited and very

-

00:18:48 constricted with only one day memory and other things, there were still a lot of problems. For

-

00:18:55 example, the famous hallucination problem has also occurred in their game. The hallucination problem

-

00:19:03 is the most severe problem of large language models such as ChatGPT, GPT. If you have used

-

00:19:08 them, you would know this by already. The authors also discussed the ethics and societal impact.

-

00:19:15 I also find this very important because one of the very big risk is people performing

-

00:19:23 parasocial relationships with generative agents. Even when such relationship may not be appropriate,

-

00:19:29 this is really really important thing to be care off. Despite being aware that generative

-

00:19:35 agents are computational entities, users may anthropomorphize them or attach human emotions

-

00:19:42 to them. To mitigate this risk, they propose two principles. First, generative agents should

-

00:19:48 explicitly disclose their nature as computational entities. Second, developers of generative agents

-

00:19:55 must ensure that the agents or the underlying language models be value aligned so that they

-

00:20:01 do not engage in behaviors that would be inappropriate given the context e.g. to recuperate

-

00:20:08 confessions of love. The paper is published on arxiv. The link will be in the description.

-

00:20:14 Moreover, the authors also published a simulation, but it is pre-computed so you won't be able to

-

00:20:21 play with it. You can pick any character you want and see how they are playing in this sandbox RPG

-

00:20:28 environment. You see. It started with Monday, February 13th at 6 am. The agents are moving

-

00:20:35 and doing their things. You can click any agent and see their behavior. Their current status are

-

00:20:42 also displayed with these icons. This one is sleeping. This one is taking a shower. This one

-

00:20:47 is reading a book. This one is drawing something. Let's look at that. So when we click, we can see

-

00:20:53 the action that they are doing. So this is the actual gameplay. It is of course simple because

-

00:20:58 this was a research. This was not a full-fledged game, but it is pretty cute. So this one is doing

-

00:21:05 some workout in the beginning. This one is still sleeping. This one is changing clothes. For example

-

00:21:11 let's look at this one. Yeah, looks like the demo is also not working very well because even though

-

00:21:17 we click, we are not able to see that agent right now. Yes. Also, we are not able to move the demo.

-

00:21:25 Maybe this is because I am using Google Chrome, but I am not sure it shouldn't be. By the way, when you

-

00:21:31 click the agent in the very bottom, you see the current action, location, and current conversation

-

00:21:38 as well. This is a pre-recording as I said, this is not live action and you can't control any agent

-

00:21:45 in this demo. So the link of this demo will be also in the description. The author still didn't

-

00:21:51 release any scripts so you won't be able to run this on your computer, but this is the beginning of

-

00:21:59 GPT powered RPG games. We can say that if you have enjoyed this video, please like subscribe

-

00:22:06 to not miss any news. I also have excellent tutorials for Stable Diffusion and other

-

00:22:11 technological events. If you support us on Patreon or by joining Youtube, that would help us

-

00:22:17 tremendously. The links will be in the description and also in the comment section. Hopefully see you

-

00:22:23 in another awesome video.